What's in a Rainbow table?

- What's a password hash?

- A real users database

- Attacking the users database

- Generating hash tables

- What's a rainbow table?

- The easy case

- The less-easy case

- Implementing rainbow tables

- Improving our rainbow tables implementation

- Defeating rainbow tables

Contents

In Veronica Mars and password hashes, from my new Tech As Seen On TV series, we've explored "cracking passwords" using brute-force methods, and then using rainbow tables, which was much, much faster.

But how do rainbow tables actually work? Let's start at the beginning.

What's a password hash?

A very simple design for an authentication system is to store passwords in

clear text, say, in a file named password.txt:

hunter2

Don't do that. Obviously. Not in the 80s, and not in 2020.

No, not even temporarily. Not even in logs. Just don't.

Then, the authentication code is really simple: just compare the password the user entered, against the one you have in your password file.

$ cargo new pass

Created binary (application) `pass` package

// in `src/main.rs`

use std::{error::Error, fs::read_to_string, io::stdin};

fn main() -> Result<(), Box<dyn Error>> {

let expected = read_to_string("password.txt")?;

println!("Please enter your password:");

let mut entered = Default::default();

stdin().read_line(&mut entered)?;

if entered.trim() == expected.trim() {

println!("You're authenticated!");

} else {

println!("*angry beep* You do not have the required credentials.");

}

Ok(())

}

Either it matches, or it doesn't:

$ cargo run -q Please enter your password: hunter2 You're authenticated! $ cargo run -q Please enter your password: rabbit7 *angry beep* You do not have the required credentials.

But that's, uh, not great. If someone breaks into your server and gets

ahold of password.txt, then they get aaaaall the passwords, and they

can break into aaaaaaall the accounts.

Normally the password file would contain usernames as well, and it would be a database.

A slightly less awful way of doing it, would be store not the passwords, but cryptographic hashes of the passwords instead.

What's a hash? It's a function!

And what's a function?

A function is something that maps inputs to outputs.

For example, an oven is a function. If you give dough as an input to the oven, you get bread.

We can also write that in text form, with any input we want:

oven(dough) = bread oven(apples) = baked apples oven(phone) = fire hazard

It's very convenient to think of a function as a mapping from an input space (containing dough, apples, etc.) to an output space.

Every input maps to an output. That's not true for all functions... but for the ones we're interested in today, it is!

Let's look at other functions! How about f(x) = x + 1? If we say that x

can be any positive integer (whole number), then both our input space and our

output space are infinitely large — so I'm only going to show part of them.

You see here that, for this input space, 0 is never produced by our function.

It can get even worse, see f(x) = x * 2.

Here, half the output space does not map back to the input space.

In the case of hash functions though, things are slightly different. Although our input space is infinite (any byte string of any length), our output space is finite.

For example, the output space for the MD5 function is: "all possible 128-bit values", which means there's only 2128 possible outputs – just about 340 undecillion possibilities (that's 3.4e38 for our engineer friends).

This means several things.

First, with an infinite input space and a finite output space, a good hash function should leave no part of the output space unmapped. You can imagine applying the function to more than 340 undecillion inputs - much more, even.

After a while, if it's a good hash function, then the entire output space has been used.

But there's a second thing - our output space is not large enough for every element of the input space to have a unique mapping.

When two inputs map to the same output, we say we have a collision.

MD5 is super broken by now. In December 2010, researchers Tao Xie and Dengguo Feng announced they had found a "single block" (the inputs are only 512 bits) collision for MD5, where the inputs only differ by two bits.

But, we won't let the brokenness of MD5 get in the way of us discussing authentication systems. Let's use it.

First we'll do by hand the "set password" phase, where we need to calculate the MD5 hash of the password:

$ printf "hunter2" | md5sum 2ab96390c7dbe3439de74d0c9b0b1767 -

We're using printf instead of echo because echo adds a newline afterwards,

and that would change the hash:

echo "hunter2" | md5sum 6a0f0731d84afa4082031e3a72354991 -

And that's what we'll store in a hash.txt file.

2ab96390c7dbe3439de74d0c9b0b1767

Then, when we want to verify the user's password, well, we compute the hash of whatever the user entered and check it against the hash we have in our "database".

$ cargo add md5

Adding md5 v0.7.0 to dependencies

use std::{error::Error, fs::read_to_string, io::stdin};

fn main() -> Result<(), Box<dyn Error>> {

let expected = read_to_string("hash.txt")?;

println!("Please enter your password:");

let mut entered = Default::default();

stdin().read_line(&mut entered)?;

let entered = md5::compute(entered.trim().as_bytes());

// we stored our md5 hash in text (hexadecimal) format,

// so we must format our computed hash the same way

// (or parse `expected` into an array of bytes - left

// as an exercise to the reader)

let entered = format!("{:016x}", entered);

if entered.trim() == expected.trim() {

println!("You're authenticated!");

} else {

println!("*angry beep* You do not have the required credentials.");

}

Ok(())

}

And then our authentication system is complete!

$ cargo run -q Please enter your password: hunter2 You're authenticated! $ cargo run -q Please enter your password: gatherer17 *angry beep* You do not have the required credentials.

A real users database

Let's make our program a little more interesting. We'll try to have a real password database.

For simplicity, we'll keep all the functionality in the same program: adding a user, authenticating, and various attack functions.

Let's define what we want our CLI to look like. I want to be able to do something like:

$ pass add-user renny hunter2 User renny added to database $ pass list-users Users: - renny $ pass auth renny hunter2 Authentication successful! $ pass auth renny foobar Bad password. $ pass auth remy foobar No such user.

With argh, we can achieve this in a nice and declarative way:

$ cargo add argh

Adding argh v0.1.3 to dependencies

use argh::FromArgs;

/// Experiment with passwords.

#[derive(FromArgs)]

struct Args {

#[argh(subcommand)]

command: Command,

}

#[derive(FromArgs)]

#[argh(subcommand)]

enum Command {

AddUser(AddUser),

ListUsers(ListUsers),

Auth(Auth),

}

#[derive(FromArgs)]

/// Add a user to the database

#[argh(subcommand, name = "add-user")]

struct AddUser {

#[argh(positional)]

username: String,

#[argh(positional)]

password: String,

}

#[derive(FromArgs)]

/// List users

#[argh(subcommand, name = "list-users")]

struct ListUsers {}

#[derive(FromArgs)]

/// Authenticate as a user

#[argh(subcommand, name = "auth")]

struct Auth {

#[argh(positional)]

username: String,

#[argh(positional)]

password: String,

}

Next up, we need some way to represent our database. I think here it can just

be a HashMap<String, Vec<u8>>, where the String is the username, and the

Vec<u8> is the MD5 hash of the password.

use std::collections::HashMap;

/// Maps username to passwords

struct Database {

records: HashMap<String, Vec<u8>>,

}

We want to save our database to disk and load it back up, so let's draft a few helpful functions:

use std::error::Error;

impl Database {

fn load_or_create() -> Result<Self, Box<dyn Error>> {

todo!("load database")

}

fn save(&self) -> Result<(), Box<dyn Error>> {

todo!("save database")

}

/// Note: this function performs no locking whatsoever.

/// Last closer wins.

fn with<F, T>(f: F) -> Result<T, Box<dyn Error>>

where

F: FnOnce(&mut Self) -> Result<T, Box<dyn Error>>,

{

let mut db = Self::load_or_create()?;

let res = f(&mut db);

db.save()?;

res

}

}

And given those, it's fairly simple to implement what we had in mind:

fn main() -> Result<(), Box<dyn Error>> {

let args: Args = argh::from_env();

match args.command {

Command::AddUser(args) => Database::with(|db| {

db.records

.insert(args.username.clone(), md5::compute(args.password).to_vec());

println!("User {} added to database", args.username);

Ok(())

}),

Command::ListUsers(_) => Database::with(|db| {

println!("Users:");

for k in db.records.keys() {

println!(" - {}", k);

}

Ok(())

}),

Command::Auth(args) => Database::with(|db| {

let entered = md5::compute(args.password);

match db.records.get(&args.username) {

Some(stored) if stored == entered.as_ref() => {

println!("Authentication successful!");

}

Some(_) => {

println!("Bad password.");

}

None => {

println!("No such user.");

}

}

Ok(())

}),

}

}

Let's try it out!

$ cargo run -q

One of the following subcommands must be present:

help

add-user

list-users

auth

$ cargo run -q -- add-user renny hunter2

thread 'main' panicked at 'not yet implemented: load database', src/main.rs:53:9

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

Oh, uh... right!

There you go again, cutting corners!

I resent that! todo!() is a perfectly fine macro to use – it's just

doing its job.

Mhh, if you say so. Chop chop!

Okay, we need to serialize and deserialize something, y'all already know where this is going.

# in Cargo.toml

[dependencies]

serde = { version = "1", features = ["derive"] }

serde_json = "1"

# (already added by cargo-edit: md5, argh)

And then, well... from here on, as they say, it's all gravy.

First we:

use serde::{Deserialize, Serialize};

/// Maps username to passwords

#[derive(Clone, Default, Serialize, Deserialize)]

struct Database {

records: HashMap<String, Vec<u8>>,

}

And then we:

use std::fs::File;

impl Database {

const PATH: &'static str = "users.db";

fn load_or_create() -> Result<Self, Box<dyn Error>> {

Ok(match File::open(Self::PATH) {

Ok(f) => serde_json::from_reader(f)?,

Err(_) => Default::default(),

})

}

fn save(&self) -> Result<(), Box<dyn Error>> {

let f = File::create(Self::PATH)?;

Ok(serde_json::to_writer_pretty(f, self)?)

}

// omitted: `with`

}

And then we're golden!

$ cargo run -q -- add-user renny hunter2 User renny added to database $ cargo run -q -- list-users Users: - renny $ cargo run -q -- auth renny hunter2 Authentication successful! $ cargo run -q -- auth renny foobar Bad password. $ cargo run -q -- auth remy foobar No such user.

Let's look at our users.db file:

{

"records": {

"renny": [

42,

185,

99,

144,

199,

219,

227,

67,

157,

231,

77,

12,

155,

11,

23,

103

]

}

}

Oh that's...

Oh whoa.

Yeah, JSON doesn't really have a "byte slice" or "raw data" type

Yeah I suppose you could plug base64 in there, but...

Yeah. Better switch to something else.

We don't really care what format our "database" uses. Using serde gives

us many different choices.

Let's go with something simple, like bincode.

$ cargo rm serde_json

Removing serde_json from dependencies

$ cargo add bincode

Adding bincode v1.3.1 to dependencies

Let's change just a couple lines:

impl Database {

fn load_or_create() -> Result<Self, Box<dyn Error>> {

Ok(match File::open(Self::PATH) {

// was using serde_json

Ok(f) => bincode::deserialize_from(f)?,

Err(_) => Default::default(),

})

}

fn save(&self) -> Result<(), Box<dyn Error>> {

let f = File::create(Self::PATH)?;

// was using serde_json

Ok(bincode::serialize_into(f, self)?)

}

// omitted: everything else

}

And try adding Renny to the database again:

$ cargo run -q -- add-user renny hunter2 pass(24262,0x10ccd8dc0) malloc: can't allocate region :*** mach_vm_map(size=8872155185559142400, flags: 100) failed (error code=3) pass(24262,0x10ccd8dc0) malloc: *** set a breakpoint in malloc_error_break to debug memory allocation of 8872155185559138927 bytes failed[1] 24262 abort cargo run -q -- add-user renny hunter2

Oh.

Huh. Oh wait - it's trying to load the JSON as bincode!

Yeah. And it's not happy about it.

Let's wipe users.db and try this again:

$ rm users.db $ cargo run -q -- add-user renny hunter2 User renny added to database $ xxd users.db 00000000: 0100 0000 0000 0000 0500 0000 0000 0000 ................ 00000010: 7265 6e6e 7910 0000 0000 0000 002a b963 renny........*.c 00000020: 90c7 dbe3 439d e74d 0c9b 0b17 67 ....C..M....

Eyyy, see 2ab963...? That's our hash! There's still a bunch of zeroes,

but what are you gonna do - that's alignment for you.

We could plug in compression, if we want.

Can we please?

Oh alright.

But we won't use DEFLATE. We'll use something "modern". Something webscale. We're going to try ourselves a little.

What we want is... something that compresses away all those pesky zeroes, but doesn't necessarily try too hard. We probably want to prefer speed over compression ratio.

What we want is... something like Snappy.

And what do you know, there's a crate for that.

$ cargo add snap

Adding snap v1.0.1 to dependencies

impl Database {

fn load_or_create() -> Result<Self, Box<dyn Error>> {

Ok(match File::open(Self::PATH) {

// new: snap usage

Ok(f) => bincode::deserialize_from(snap::read::FrameDecoder::new(f))?,

Err(_) => Default::default(),

})

}

fn save(&self) -> Result<(), Box<dyn Error>> {

let f = File::create(Self::PATH)?;

Ok(bincode::serialize_into(

// new: snap usage

snap::write::FrameEncoder::new(f),

self,

)?)

}

// omitted: everything else

}

But that's it. No more distractions.

Awww.

Let's see how snap behaves when loading something that's not snap-encoded...

$ cargo run -q -- add-user renny hunter2

Error: Io(Custom { kind: Other, error: StreamHeader { byte: 1 } })

Yeah, that figures.

$ rm users.db $ cargo run -q -- add-user renny hunter2 User renny added to database $ xxd users.db 00000000: ff06 0000 734e 6150 7059 0029 0000 5a2d ....sNaPpY.)..Z- 00000010: 6c0b 2d04 0100 0901 0005 0907 1800 7265 l.-...........re 00000020: 6e6e 7910 0d0d 3c2a b963 90c7 dbe3 439d nny...<*.c....C. 00000030: e74d 0c9b 0b17 67 .M....g

Huh, it's actually longer. We're paying for the header here, and... I guess we don't have that many repeated zeros. It would probably pay off more with a bigger database - let's try adding 100 users, and compare sizes.

$ ls -l users.db

-rw-r--r-- 1 amos staff 55 Oct 24 21:58 users.db

$ for i in $(seq 1 100); do ./target/debug/pass add-user user${i} foobar; done

User user1 added to database

User user2 added to database

User user3 added to database

(cut)

User user98 added to database

User user99 added to database

User user100 added to database

$ ls -l users.db

-rw-r--r-- 1 amos staff 610 Oct 24 22:00 users.db

Okay, that's pretty neat - the number of records increased 100x, and the database size only increased 11x.

Yeah, nice benchmark, genius - they all have the same password.

Too late, I'm being published as we speak.

But enough messing around. I recreated users.db with just the renny user,

and our auth subcommand works fine:

$ cargo run -q -- auth renny hunter2 Authentication successful!

Attacking the users database

How do we attack that database?

How do we, as Veronica puts it "recover the plaintext"?

Well, we can go old-school.

Just try all the passwords! One by one!

#[derive(FromArgs)]

#[argh(subcommand)]

enum Command {

AddUser(AddUser),

ListUsers(ListUsers),

Auth(Auth),

// new!

Bruteforce(Bruteforce),

}

#[derive(FromArgs)]

/// Try to brute-force user accounts

#[argh(subcommand, name = "bruteforce")]

struct Bruteforce {}

fn main() -> Result<(), Box<dyn Error>> {

let args: Args = argh::from_env();

match args.command {

// omitted: other variants

Command::Bruteforce(_) => bruteforce(),

}

}

We're going to over-engineer the heck out of this. We'll want a

Charset type, to represent the character set used to crack passwords,

ie. the set of all characters we think might be in the password:

struct Charset(Vec<u8>);

We'll want to be able to construct it from a string, for convenience:

impl<T> From<T> for Charset

where

T: AsRef<str>,

{

fn from(t: T) -> Self {

Self(t.as_ref().as_bytes().to_vec())

}

}

...and treat it as a byte slice:

impl AsRef<[u8]> for Charset {

fn as_ref(&self) -> &[u8] {

&self.0

}

}

...and have a custom Debug implementation, in case we have non-graphic characters in there:

use std::fmt;

impl fmt::Debug for Charset {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

for c in &self.0 {

let c = *c as char;

if c.is_ascii_graphic() {

write!(f, "{}", c)?;

} else {

write!(f, "\\x{:02x}", c as u64)?;

}

}

Ok(())

}

}

And then well.. we'd love our Charset type to be able to generate

all possible strings for a given character set and minimum/maximum

length.

So... we'll need an iterator. But we don't want the iterator's items to be

owned types, like Vec<u8> - because then we'd have to allocate for every

string we generate, and that would probably slow us down very quickly.

Have you measured that?

No? But no code is faster than some code?

...okay fine.

So we can't use the standard Iterator trait. But that's okay, we can

just roll with our own type!

Or use the streaming_iterator crate!

Yes, we could! But we spent all our budget on snap, sorry.

struct CharsetIterator<'a> {

/// Characters that can appear in the output

charset: &'a Charset,

/// Minimum and maximum length of the output

len_range: RangeInclusive<usize>,

/// Current length of the output

current_len: usize,

/// Current positions used in `charset` for the output

indices: Vec<usize>,

/// Current output

buf: Vec<u8>,

}

And then, the big one:

impl<'a> CharsetIterator<'a> {

fn next(&mut self) -> Option<&[u8]> {

if !self.len_range.contains(&self.current_len) {

return None;

}

// Generate current item slice

let charset = self.charset.as_ref();

// ...this better get inlined

for (index, slot) in self.indices.iter().copied().zip(self.buf.iter_mut()) {

*slot = charset[index];

}

let slice = &self.buf[..self.current_len];

// Generate next item indices

match (&self.indices[..self.current_len])

.iter()

.position(|&i| i < charset.len() - 1)

{

Some(i) => {

// one of the characters in the string is not the

// *last* character of the charset yet, so reset

// everything before it to the first character...

for j in 0..i {

self.indices[j] = 0;

}

// ...and increment it

self.indices[i] += 1;

}

None => {

// all characters are maxed out, our only option is

// to make the input string longer. We'll return `None`

// the next time `next` gets called if we reached our

// maximum length.

self.current_len += 1;

for slot in self.indices.iter_mut() {

*slot = 0;

}

}

}

Some(slice)

}

}

impl Charset {

fn iter<'a>(&'a self, len_range: RangeInclusive<usize>) -> CharsetIterator<'a> {

CharsetIterator {

charset: self,

len_range,

current_len: 0,

indices: vec![],

buf: Vec::new(),

}

}

}

Now, this is not the fastest way do to this. It's not even the smartest way to do this. But it works.

The rest is really simple: for each password candidate generated

via CharsetIterator, compute the MD5 hash, and check against the

database.

#[derive(Debug)]

struct BruteforceParams {

len_range: RangeInclusive<usize>,

charset: Charset,

}

fn bruteforce() -> Result<(), Box<dyn Error>> {

let params = BruteforceParams {

len_range: 4..=8,

charset: "abcdefghijklmnopqrstuvwxyz0123456789".into(),

};

println!("{:?}", params);

let records = Database::with(|db| Ok(db.records.clone()))?;

let start_time = Instant::now();

let mut candidates = params.charset.iter(params.len_range);

while let Some(pass) = candidates.next() {

let hash = md5::compute(pass);

for (db_user, db_hash) in &records {

if hash.as_ref() == db_hash {

println!(

"[CRACKED in {:?}] user ({}) has password ({})",

start_time.elapsed(),

db_user,

std::str::from_utf8(pass).unwrap_or("<not utf-8>")

);

}

}

}

Ok(())

}

AS IT TURNS OUT, this code is not the fastest. Not even a little.

So I've added some weaker passwords to the database just so we can make sure it works.

Let's try it out!

$ cargo build --release --quiet

$ ./target/release/pass bruteforce

BruteforceParams { len_range: 4..=8, charset: abcdefghijklmnopqrstuvwxyz0123456789 }

[CRACKED in 149.740418ms] user (keith) has password (mars)

[CRACKED in 3.994172005s] user (joe) has password (biden)

[CRACKED in 9.72343814s] user (bernie) has password (bird7)

[CRACKED in 10.487802794s] user (twentytwenty) has password (aaaaaa)

[CRACKED in 171.819211016s] user (dev) has password (foobar)

^C

aaaaand that's how much patience I have right now. Just under three minutes.

So there's several things we could address with our brute force implementation.

We're doing a lot of unnecessary in our CharsetIterator - for every output

we generate, we look up and set len_range.max() characters. Often, we'd

only need to change just a single character.

The fact that we're returning a borrowed slice is cool, but it makes it

awkward to parallelize. What if we wanted to use N threads instead of just

the one? We couldn't just hand out &'a [u8] to our worker threads, because

the CharsetIterator is re-using its internal buffer to write the next one.

Having each thread copy the &[u8] to their own buffer would still leave us

with synchronization problems. Not to mention, that's a copy we should be

able to avoid, since the source of truth here is CharsetIterator::indices.

Speaking of indices - why is it a Vec<usize>? The charset is not really

a character set... it's a byte set! All entries in Charset::0 should be

unique, and there should never be more than 256 entries, because there's only

256 distinct 8-bit values.

But you know - if your password was "biden", we'd still "pwn" you in four seconds. So there's that.

Generating hash tables

If we're cracking a lot of password database that use MD5 as their "cryptographic hash" (ie., if we're stuck between 1992 and 1996), then we probably want to pre-compute some hashes.

And we can do that!

But before we do - let's change up our CharsetIterator a little.

Here's an idea: instead of maintaining an indices vector, how about we use

a single number to refer to an input of a given length and charset?

For length 3 and charset "abc", we'd have:

- 0 => "aaa"

- 1 => "baa"

- 2 => "caa"

- 3 => "aba"

- 4 => "bba"

- 5 => "cba"

...and so on. We could make it an u64, which has 1.9e19 possible values.

For a full charset (all 255 bytes are fair game), there's only 1.8e19

passwords of length 8. For just [A-Za-z0-9], there's only 8.4e10 passwords

of length 10.

And I can guarantee you I don't have the patience to come up with an implementation that is fast enough for passwords longer than 7 characters today.

And if I did, well, we could always switch to an u128, which has 3.4e38

possible values.

So... stick to an u64?

Stick to an u64.

Our strategy is going to be much simpler here.

impl Charset {

fn range(&self, output_len: u32) -> Range<u64> {

0..(self.0.len() as u64).pow(output_len)

}

fn get_into(&self, i: u64, buf: &mut [u8]) {

let n = self.0.len() as u64;

let mut remain = i;

for slot in buf.iter_mut() {

let modulo = remain % n;

*slot = self.0[modulo as usize];

remain = (remain - modulo) / n;

}

}

}

..and then we can forget about CharsetIterator completely.

Here's how we can use it:

fn bruteforce() -> Result<(), Box<dyn Error>> {

let params = BruteforceParams {

len_range: 4..=8,

charset: "abcdefghijklmnopqrstuvwxyz0123456789".into(),

};

println!("{:?}", params);

let records = Database::with(|db| Ok(db.records.clone()))?;

let start_time = Instant::now();

for len in params.len_range {

let mut buf = vec![0u8; len];

for i in params.charset.range(buf.len() as _) {

params.charset.get_into(i, &mut buf);

let hash = md5::compute(&buf);

for (db_user, db_hash) in &records {

if hash.as_ref() == db_hash {

println!(

"[CRACKED in {:?}] user ({}) has password ({})",

start_time.elapsed(),

db_user,

std::str::from_utf8(&buf).unwrap_or("<not utf-8>")

);

}

}

}

}

Ok(())

}

Let's give it a whirl:

$ ./target/release/pass bruteforce

BruteforceParams { len_range: 4..=8, charset: abcdefghijklmnopqrstuvwxyz0123456789 }

[CRACKED in 172.104729ms] user (keith) has password (mars)

[CRACKED in 4.863978521s] user (joe) has password (biden)

[CRACKED in 11.755943184s] user (bernie) has password (bird7)

[CRACKED in 12.761011938s] user (twentytwenty) has password (aaaaaa)

^C

It still works, hurray!

It's... slightly slower though. Integer division doesn't come for free!

But you know what we can do here that's sorta neat? We can parallelize that shit. Hell yeah. Someone get rayon on the phone.

Ring ring motherf*cker. We've got some cores for you.

$ cargo add rayon

Adding rayon v1.5.0 to dependencies

use rayon::prelude::*;

fn bruteforce() -> Result<(), Box<dyn Error>> {

let params = BruteforceParams {

len_range: 4..=8,

charset: "abcdefghijklmnopqrstuvwxyz0123456789".into(),

};

println!("{:?}", params);

let records = Database::with(|db| Ok(db.records.clone()))?;

let start_time = Instant::now();

for len in params.len_range.clone() {

params

.charset

.range(len as _)

.into_par_iter()

.for_each_with(vec![0u8; len], |mut buf, i| {

params.charset.get_into(i, &mut buf);

let hash = md5::compute(&buf);

for (db_user, db_hash) in &records {

if hash.as_ref() == db_hash {

println!(

"[CRACKED in {:?}] user ({}) has password ({})",

start_time.elapsed(),

db_user,

std::str::from_utf8(&buf).unwrap_or("<not utf-8>")

);

}

}

})

}

Ok(())

}

Let it all burn, baby. Make those fans roar.

$ cargo build --release && ./target/release/pass bruteforce

Compiling pass v0.1.0 (/Users/amos/ftl/pass)

Finished release [optimized] target(s) in 1.17s

BruteforceParams { len_range: 4..=8, charset: abcdefghijklmnopqrstuvwxyz0123456789 }

[CRACKED in 8.082686ms] user (keith) has password (mars)

[CRACKED in 751.047863ms] user (joe) has password (biden)

[CRACKED in 1.231836105s] user (bernie) has password (bird7)

[CRACKED in 1.302122549s] user (twentytwenty) has password (aaaaaa)

[CRACKED in 54.672641246s] user (dev) has password (foobar)

^C

There. That's more like it. Why do we care? Well, because we're going to be computing tables! Like... the MD5 hashes of all passwords of length 6.

And well.. that's a lot of hashes.

So we might as well do it in parallel.

And now we have to think: what's the best way to compute and store 2.1 billion hashes?

bincode is fast, sure, but do we really need it? Do we really want to pay

the cost of serialization for every single hash?

If you think about it... 2.1 billion hashes is just 2.1 billion [u8; 16].

It's just one big &[[u8; 16]].

In my early experiments, I've learned one thing: it's that just mapping a 32GB file and letting 16 threads write to it is... not a good time.

What we want here is to split our workload into chunks, and sequentially process each of these chunks... with rayon, so it can split chunks even further and let different CPU cores handle them.

Let's go:

$ cargo add memmap indicatif

Adding memmap v0.7.0 to dependencies

Adding indicatif v0.15.0 to dependencies

#[derive(FromArgs)]

#[argh(subcommand)]

enum Command {

// omitted: other variants

GenHtable(GenHtable),

}

#[derive(FromArgs)]

/// Generate a hash table

#[argh(subcommand, name = "gen-htable")]

struct GenHtable {}

fn main() -> Result<(), Box<dyn Error>> {

let args: Args = argh::from_env();

match args.command {

// omitted: other variants

Command::GenHtable(_) => gen_htable(),

}

}

Just before we store 2.1 billion hashes, we're probably going to write a "table header" - that specifies what length and character set the table is for, which will help when we actually want to, y'know, use it.

For that we can use bincode - we only have the one header:

#[derive(Serialize, Deserialize)]

struct TableHeader {

len: u32,

charset: Vec<u8>,

}

And then... it's time to burn our CPU cores a little more:

const HASH_LENGTH: usize = 16;

fn progress_style() -> ProgressStyle {

ProgressStyle::default_bar()

.template("[{elapsed_precise}] [{bar:40.blue}] ({eta_precise} left)")

.progress_chars("#>-")

}

fn gen_htable() -> Result<(), Box<dyn Error>> {

let item_len = 6;

let charset: Charset = "abcdefghijklmnopqrstuvwxyz0123456789".into();

let total_hashes = charset.range(item_len).end;

println!(

"Generating {} hashes — for all items of length {}, with characters {:?}",

total_hashes, item_len, charset

);

let progress = ProgressBar::new(total_hashes).with_style(progress_style());

progress.enable_steady_tick(250);

// Write the header and pre-size the file

let hashes_offset_in_file = {

let mut file = File::create("table.db")?;

bincode::serialize_into(

&mut file,

&TableHeader {

len: item_len,

charset: charset.0.to_vec(),

},

)?;

let hashes_offset_in_file = file.seek(SeekFrom::Current(0))?;

let hashes_len = total_hashes * HASH_LENGTH as u64;

let file_len = hashes_offset_in_file + hashes_len;

file.set_len(file_len)?;

hashes_offset_in_file

};

let max_bytes_per_chunk = {

let gb: u64 = 1024 * 1024 * 1024;

// Picked to keep memory usage low-enough and flush to disk often-enough

2 * gb

};

let hashes_per_chunk = max_bytes_per_chunk / HASH_LENGTH as u64;

let bytes_per_chunk = hashes_per_chunk * HASH_LENGTH as u64;

let num_chunks = total_hashes / hashes_per_chunk;

// For each chunk, one by one...

for chunk_index in 0..num_chunks {

// Show progress

let hashes_done = chunk_index * hashes_per_chunk;

progress.set_position(hashes_done);

let file = OpenOptions::new().read(true).write(true).open("table.db")?;

let chunk_offset_in_file = hashes_offset_in_file + chunk_index * bytes_per_chunk;

let mut file = unsafe {

MmapOptions::new()

.offset(chunk_offset_in_file)

.len(bytes_per_chunk as _)

.map_mut(&file)

}?;

// Map `hashes_per_chunk` hashes into memory, so we can write to the file

let hashes = unsafe {

std::slice::from_raw_parts_mut(

file.as_mut_ptr() as *mut [u8; HASH_LENGTH],

hashes_per_chunk as _,

)

};

// In the collection of "all outputs of this charset", this is

// where our chunk starts.

let first_item_index = chunk_index * hashes_per_chunk;

// Enumerate gives us the position within the chunk.

hashes.par_iter_mut().enumerate().for_each_with(

vec![0u8; item_len as usize],

|buf, (index_in_chunk, out)| {

let item_index = first_item_index + index_in_chunk as u64;

// Generate the candidate password

charset.get_into(item_index, buf);

// Hash it and store it to the file.

*out = md5::compute(buf).0;

},

);

}

progress.finish();

Ok(())

}

Now look at it go:

$ cargo build --release && ./target/release/pass gen-htable

Finished release [optimized + debuginfo] target(s) in 0.03s

Generating 2176782336 hashes — for all items of length 6, with characters abcdefghijklmnopqrstuvwxyz0123456789

[00:00:14] [#######>--------------------------------] (00:00:53 left)

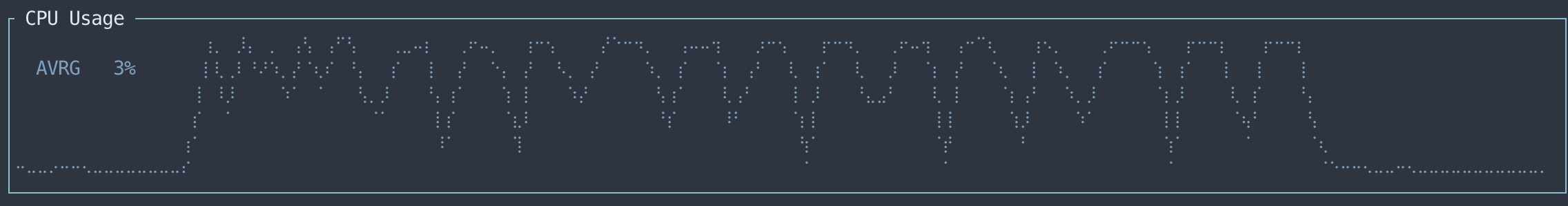

On my machine, it takes just over a minute to complete - that's about 30 million hashes per second.

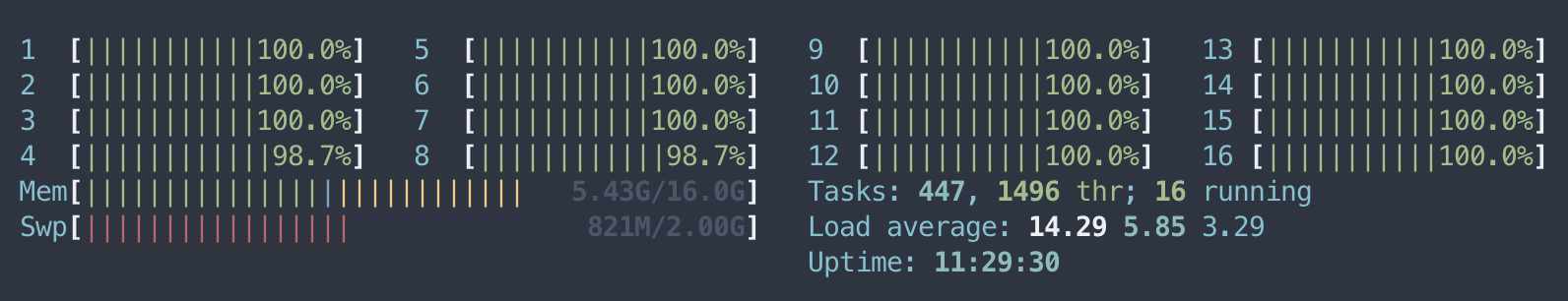

CPU utilization is not optimal: there's dips between each chunk, where all the threads are stopped and started again, and presumably some I/O flushing is happening:

There's definitely some extra performance to be squeezed out of this, but it's going to work for our use case.

The resulting file is 32G, as expected:

$ ls -lhA table.db -rw-r--r-- 1 amos staff 32G Oct 27 13:26 table.db

Now it's time.. to use it!

#[derive(FromArgs)]

#[argh(subcommand)]

enum Command {

// omitted: other variantsj0

UseHtable(UseHtable),

}

#[derive(FromArgs)]

/// Use a hash table

#[argh(subcommand, name = "use-htable")]

struct UseHtable {}

fn main() -> Result<(), Box<dyn Error>> {

let args: Args = argh::from_env();

match args.command {

// omitted: other variants

Command::UseHtable(_) => use_htable(),

}

}

Now for the actual use_htable function:

fn use_htable() -> Result<(), Box<dyn Error>> {

let (header, hashes_offset_in_file) = {

let mut file = File::open("table.db")?;

let header: TableHeader = bincode::deserialize_from(&mut file)?;

let offset = file.seek(SeekFrom::Current(0))?;

(header, offset)

};

let charset = Charset(header.charset);

let num_hashes = charset.range(header.len).end;

let file = File::open("table.db")?;

let file = unsafe { MmapOptions::new().offset(hashes_offset_in_file).map(&file) }?;

let hashes = unsafe {

std::slice::from_raw_parts(

file.as_ptr() as *const [u8; HASH_LENGTH],

num_hashes as usize,

)

};

let records = Database::with(|f| Ok(f.records.clone()))?;

let start_time = Instant::now();

hashes.par_iter().enumerate().for_each_with(

vec![0u8; header.len as usize],

|buf, (item_index, hash)| {

for (db_user, db_hash) in &records {

if db_hash == hash {

charset.get_into(item_index as _, buf);

println!(

"[CRACKED in {:?}] user {} has password {}",

start_time.elapsed(),

db_user,

std::str::from_utf8(buf).unwrap_or("<not utf-8>")

);

}

}

},

);

println!("Spent {:?} going through whole table", start_time.elapsed());

Ok(())

}

$ cargo build --release && ./target/release/pass use-htable

Compiling pass v0.1.0 (/Users/amos/ftl/pass)

Finished release [optimized + debuginfo] target(s) in 3.72s

[CRACKED in 510.335µs] user twentytwenty has password aaaaaa

[CRACKED in 8.918158884s] user alpha has password abcdef

[CRACKED in 23.690443816s] user dev has password foobar

Spent 33.318824641s going through whole table

We were able to crack all three six-character passwords in just 23 seconds!

Compare this with the brute-force method, with which it took us 171 seconds

to find dev's password.

So, it's better. But we stopped at 6-character passwords with only

[a-z0-9], and our hash table is already 32 gigabytes. What if we had

7-character passwords? We can make a back-of-the-envelope calculation.

For 7-character passwords with [a-z0-9] (36 unique characters), we have

36**7 combinations - just shy of 79 billion.

79 billion times 16 bytes is... 1.25 terabytes.

Wooo laddy. That's not gonna fit on your SSD.

No it's not.

Which is what, 46 minutes into this article, brings us to rainbow tables.

What's a rainbow table?

What we've just generated is an exhaustive hash table. First off, all our inputs have an "index". From that index, we can generate the plaintext, and from that plaintext we can generate the hash.

When we use our table, since we know the position at which the hash is stored, we also know which plaintext candidate it corresponds to. That's why we didn't need to store the plaintext - or the index for that matter. The order of the hashes in the database is all we need.

But, as we've seen, exhaustive hash tables get large quickly, because we're storing all possible hashes.

The whole idea behind hash tables is to only store a fraction of all hashes, in exchange for doing a bit more computation.

To start with, we generate a random plaintext. Really, any plaintext at all. And we compute its hash. And those are the first two nodes of our chain:

Then — and here's the tricky bit — we need a family of functions that, given a ciphertext (an MD5 hash, here), generate another plaintext.

We'll name those functions R1, R2, R3, etc. The R stands for "reduction":

we reduce from the "hash space" to the "plaintext space", which is smaller.

What do those functions look like?

We'll come back to it later, but they basically are "using the bits of the ciphertext, pick values from the charset to generate another plaintext".

Now that our chain ends with a plaintext again, we use the hash function again (MD5) to generate the next node:

And then we use the R2 function to generate the next plain text, and so on and so forth, until we reach the desired length for a chain. And then we generate a lot more chains:

And it's called rainbow tables because, conceptually, each chain has its own unique color:

It's important to note that the functions R1, R2, R3 need to all be

distinct from one another, ie. we should have R1(x) != R2(x).

Now, let's say we stored all the nodes of all our chains in a database.

It would be pretty easy to use, right? For each chain, consider nodes two by

two: we get tuples of (plaintext, hash). If the hash is the one we're trying

to crack, boom, we're done.

But then it would be the same as an exhaustive hash table.

Just... more random.

And bigger, since we'd store the plaintexts as well.

So clearly that's not the trick.

No it's not!

Here's the trick: if our rainbow table is "complete enough", the hash we're trying to reverse is somewhere in one of the chains - we just need to figure out in which chain (which row), and at which position in the chain (which column).

And we can do that by only storing the first column (starting plaintexts) and the last column (final hashes).

How do we do that? We make assumptions!

The easy case

First, we assume that the hash we're looking for is in the last column.

So we compare our hash against the last node of all the chains.

If we're cracking 8ae101... for example, well, it's not in the first chain

(which ends in ddc510...), it's not in the second chain (which ends in

cd7edc...), but it is in the third chain.

Now, there's just one problem... we can't navigate chains from right to left.

There's only arrows going from left to right, because we only have functions

going from left to right: from plaintext to ciphertext (the MD5) function,

and from ciphertext to another plaintext, our R family of functions.

So we can't go from 8ae101... from career by moving "one to the left".

But.. since we have the last and the first column... we can start from

the first column, and use our H and R functions to move to the right, until

we reach the column we want:

Tada! The plaintext we were looking for was career.

The less-easy case

But that was the easy case - what about the case where the hash we're looking for is not in the last column?

Say we're looking for d487dd...: it's not in the last column of any of the

chains:

In this case, we move on to another assumption: we just assume it's in the previous ciphertext column.

...but here's the thing: we don't have that column. We're only storing the first (plaintext) column, and the last (ciphertext) column:

So what do we do here? We could start from the leftmost column and work our way towards the column we're interested in — but that would be equivalent to re-computing the whole rainbow table!

What we do instead is move the node we're searching for to the right.

So, instead of looking for d487dd... in that column, we apply R2 to find

the next node:

And then again, until we've reached the last column - the one we have.

And then we compare with the last nodes from all the chains and.. oh look! It's the red chain!

Now that we know in which chain it is, we start from the beginning (leftmost node) of the chain, and move right until we reach the column we want:

And there we have it. The plaintext was yellow.

That is cool.

Yeah! It's pretty neat!

So the worst case scenario is if our plaintext is in the first column, right?

Right - because we try every column starting from the right. So the first column is actually the last one we check.

One thing that is really neat with Rainbow tables, is that it works for any hashing algorithm. We've been going with MD5 for this example, but it does not matter what the algorithm is, as rainbow tables don't exploit any particular weaknesses — they're just a space-saving trick.

Implementing rainbow tables

$ cargo add rand

Adding rand v0.7.3 to dependencies

I'm going to skip over adding subcommands for the executable, because, well, you know the drill.

Just as before, we're going to have a "table header". We need a couple more

fields: chain length is how many (plaintext, hash) pairs we have in a

chain, and total_chains is the number of chains.

#[derive(Serialize, Deserialize)]

struct RainbowTableHeader {

item_len: u32,

charset: Vec<u8>,

chain_length: usize,

total_chains: u64,

}

We're going to use the same "map a file as one big slice" trick as we did when

we generated an exhaustive hash table, only elements are going to be of type

RainbowTuple instead of [u8; 16].

const MAX_PLAINTEXT_LEN: usize = 16;

/// When stored in a rainbow table file: the first plaintext (start of a chain)

/// and the last hash (end of a chain). Also used as temporary values to

/// represent a single node of a chain.

#[repr(C)]

#[derive(Default, Clone)]

struct RainbowTuple {

plaintext: [u8; MAX_PLAINTEXT_LEN],

hash: [u8; HASH_LENGTH],

}

Why is it repr(C)?

This tells the compiler not to re-order fields. It probably doesn't matter much here since both fields are 16 bytes, but since we're mapping memory directly, I'd rather be sure.

Since we're going to deal with big numbers, let's throw in a utility routine to format them for humans:

fn format_num<N: TryInto<u64, Error = E> + fmt::Display + fmt::Debug, E: fmt::Debug>(

num: N,

) -> String {

let num = num.try_into().unwrap();

let mut num = num as f64;

let mut suffix = "";

if num > 10000.0 {

num /= 1000.0;

suffix = " thousand";

if num > 1000.0 {

num /= 1000.0;

suffix = " million";

if num > 1000.0 {

num /= 1000.0;

suffix = " billion";

}

}

}

match suffix {

"" => format!("{}", num),

_ => format!("{:.2}{}", num, suffix),

}

}

Why not use something like human_format?

Didn't feel like it!

Let's look at the actual rainbow table generation code. The general idea is fairly simple. Just as described in the paper, we generate a set of initial plaintexts, and for each of them, generate our full rainbow chains and only save the first plaintext and the last hash.

fn gen_rtable() -> Result<(), Box<dyn Error>> {

// Parameters, found through experimentation

let chain_length = 2400;

let total_chains = 2_000_000;

let item_len: usize = 6;

// Same character set as before

let charset: Charset = "abcdefghijklmnopqrstuvwxyz0123456789".into();

let hashes_in_table = chain_length as u64 * total_chains;

println!("Chain length: {}", format_num(chain_length));

println!("Total chains: {}", format_num(total_chains));

println!("Total hashes in table: {}", format_num(hashes_in_table));

let header = RainbowTableHeader {

item_len: item_len as _,

chain_length,

total_chains,

charset: charset.0.clone(),

};

create_rainbow_file(&header, |tuples| {

let n = AtomicU64::new(0);

// For debugging purposes: while generating, we're going to see how many

// times each of our user password hashes appear in our rainbow table.

let records = Database::with(|db| Ok(db.records.clone()))?;

generate_initial_plaintexts(tuples, &charset, item_len);

let progress = ProgressBar::new(total_chains).with_style(progress_style());

progress.enable_steady_tick(250);

tuples.par_iter_mut().for_each(|tuple| {

generate_rainbow_chain(tuple, &header, &charset, &records, &progress, &n);

});

progress.finish();

Ok(())

})

}

A create_rainbow_file function? Someone's been refactoring!

Oh yeah that part's neat, let me show you.

const RAINBOW_TABLE_FILE_NAME: &str = "rtable.db";

fn create_rainbow_file<F, T>(header: &RainbowTableHeader, f: F) -> Result<T, Box<dyn Error>>

where

F: FnOnce(&mut [RainbowTuple]) -> Result<T, Box<dyn Error>>,

{

let tuples_len = header.total_chains * std::mem::size_of::<RainbowTuple>() as u64;

// Write the header and remember the offset where actual tuples start

let tuples_offset_in_file = {

let mut file = File::create(RAINBOW_TABLE_FILE_NAME)?;

bincode::serialize_into(&mut file, &header)?;

let tuples_offset_in_file = file.seek(SeekFrom::Current(0))?;

let file_len = tuples_offset_in_file + tuples_len;

file.set_len(file_len)?;

tuples_offset_in_file

};

let file = OpenOptions::new()

.read(true)

.write(true)

.open(RAINBOW_TABLE_FILE_NAME)?;

let mut mapping = unsafe {

MmapOptions::new()

.offset(tuples_offset_in_file)

.len(tuples_len as usize)

.map_mut(&file)?

};

let tuples = unsafe {

std::slice::from_raw_parts_mut(

mapping.as_mut_ptr() as *mut RainbowTuple,

header.total_chains as _,

)

};

f(tuples)

}

Ohhh it takes care of all the dirty I/O (input/output) details, I see.

But why take a closure / a callback / an FnOnce, instead of returning

the slice?

Well, we have to reason about types.

Sure.

What type would we return exactly?

Mhhh we're writing to it, so... &mut [RainbowTuple]?

Correct! A mutable borrow. What's the lifetime of that borrow?

Uhhh...

Said differently: what does it borrow from?

Ah! From the MmapMut I guess?

Correct! But the compiler doesn't know that... so what would happen

if we returned only the slice, and dropped the MmapMut?

Oh, we'd have a dangling pointer!

Right!

Couldn't we just return both of them?

We could! But then we could still accidentally drop the mapping:

let (slice, mapping) = create_rainbow_file(); drop(mapping); // woops! slice[0] = foobar;

Mhhhh. Couldn't we... return both of them? Like.. a struct that has both the

borrowee and the borrower, and that implements DerefMut<Target = [RainbowTuple]>, and and and

Yeah we could! Or we could just take a function. So that it's only possible to access the slice while the memory mapping is valid.

Okay yeah, that's simpler.

Next up, how do we generate the initial plaintexts? Well, Charset::get_into

allows us to map an u64 to a plaintext of size item_len with our given

character set. And the rand crate allows us to generate an arbitrary

u64. So...

fn generate_initial_plaintexts(tuples: &mut [RainbowTuple], charset: &Charset, item_len: usize) {

println!("Generating initial plaintexts sequentially, from single RNG");

{

let mut set = HashSet::new();

let start_time = Instant::now();

let mut rng = rand::thread_rng();

let max_plaintext_pos = charset.range(item_len as _).end;

for tuple in tuples.iter_mut() {

// try a few times to get a unique hash

for _ in 0..5 {

charset.get_into(

rng.gen_range(0, max_plaintext_pos),

&mut tuple.plaintext[..item_len],

);

if set.insert((&tuple.plaintext[..item_len]).to_vec()) {

// was unique, we can stop trying

break;

}

}

}

let duration = start_time.elapsed();

println!(

"Generated {} unique plaintexts in {:?} ({} per second)",

tuples.len(),

duration,

format_num((tuples.len() as f64 / duration.as_secs_f64()) as u64),

);

}

}

We spend some extra computation time here to make sure we generate unique starting plaintexts. If we didn't, we'd have duplicate chains in our rainbow table, which would be a complete waste of space and time.

Given those initial plaintexts, we generate every chain, in parallel. Here's what generating a single chain looks like:

#[inline(always)]

fn generate_rainbow_chain(

tuple: &mut RainbowTuple,

header: &RainbowTableHeader,

charset: &Charset,

records: &HashMap<String, Vec<u8>>,

progress: &ProgressBar,

n: &AtomicU64,

) {

let item_len = header.item_len as usize;

// Move to the end of the chain, hop by hop

let mut tmp: RainbowTuple = tuple.clone();

// Apply H

tmp.hash = md5::compute(&tmp.plaintext[..item_len]).0;

for column_index in 0..(header.chain_length - 1) {

// For debugging:

for db_hash in records.values() {

if db_hash == tmp.hash.as_ref() {

progress.println(format!(

"{:?} is in rainbow table (at position {}/{} in a chain)",

std::str::from_utf8(&tmp.plaintext[..item_len]).unwrap_or("<non utf-8>"),

column_index,

header.chain_length

));

}

}

// Apply Ri

next_plaintext(column_index, &charset, item_len, &mut tmp);

// Apply H

tmp.hash = md5::compute(&tmp.plaintext[..item_len]).0;

}

// The stored tuple contains the first plaintext and the last hash

tuple.hash = tmp.hash;

// Progress update

let m = n.fetch_add(1, Ordering::Relaxed);

if m % 100_000 == 0 {

progress.set_position(m);

}

}

We only update progress for every 100K chains we generate, to avoid spending a lot of time on synchronizing the progress bar (it's full of locks).

Which brings us to next_plaintext, our family of reduction functions. What

should they look like? Well, I didn't look at other implementations of rainbow

tables: instead I just went with something that looked reasonable.

Our requirements are:

- If the hash is different, we'll generate a different plaintext

- If the column is different, we'll generate a different plaintext

And you know... we can fill both those requirements pretty easily, by just

making up an u64 from the first half of the hash, adding it with

column_index, constraining it to the 0..(charset.0.len().pow(item_len))

range, and using Charset::get_into.

But then we run into modulo bias.

Say you have a random number generator function, r(x) that generates

numbers between 0 and 100.

But what you really want is a number between 0 and 40. You could do r(x) % 40. Problem solved, right?

Wrong!

And you know, I apologize, I don't have any hard data for you — but when I

tried really-naive versions of next_plaintext, plaintext "aaaaaa" showed

up a lot more than anything else.

So, this is my attempt at escaping modulo bias: by keeping track of the bits we don't use it, and feeding them into the next stage... it seems to be better.

I might be wrong. Please write an hour-long article on how I'm wrong, if I'm wrong.

[inline(always)]

fn next_plaintext(

column_index: usize,

charset: &Charset,

item_len: usize,

column: &mut RainbowTuple,

) {

assert!(item_len < HASH_LENGTH);

let chars = &charset.0[..];

let max_char = chars.len();

let mut hash_rest: usize = 0;

let mut charset_rest: usize = 0;

for plaintext_pos in 0..item_len {

let hash_index = (column_index + plaintext_pos) + hash_rest;

hash_rest = hash_index / HASH_LENGTH;

let hash_index = hash_index % HASH_LENGTH;

let charset_index = column.hash[hash_index] as usize + charset_rest;

charset_rest = charset_index / max_char;

let charset_index = charset_index % max_char;

column.plaintext[plaintext_pos] = chars[charset_index];

}

}

Okay, that's it for rainbow table generation!

Let's run it:

$ cargo build --release && ./target/release/pass gen-rtable

Compiling pass v0.1.0 (/Users/amos/ftl/pass)

Finished release [optimized + debuginfo] target(s) in 3.74s

Chain length: 2400

Total chains: 2.00 million

Total hashes in table: 4.80 billion

Generating initial plaintexts sequentially, from single RNG

Generated 2000000 unique plaintexts in 883.633174ms (2.26 million per second)

"abcdef" is in rainbow table (at position 1188/2400 in a chain)

"aaaaaa" is in rainbow table (at position 56/2400 in a chain)

"aaaaaa" is in rainbow table (at position 1192/2400 in a chain)

"abcdef" is in rainbow table (at position 185/2400 in a chain)

"abcdef" is in rainbow table (at position 1485/2400 in a chain)

"abcdef" is in rainbow table (at position 1485/2400 in a chain)

"foobar" is in rainbow table (at position 2241/2400 in a chain)

"abcdef" is in rainbow table (at position 185/2400 in a chain)

"abcdef" is in rainbow table (at position 1485/2400 in a chain)

"abcdef" is in rainbow table (at position 1485/2400 in a chain)

[00:01:44] [########################################] (00:00:00 left)

Woo, all our six-letter passwords are in there. That's good. But hey, we generated 4.8 billion hashes... how much space does it take on disk?

$ ls -lhA rtable.db -rw-r--r-- 1 amos staff 61M Oct 28 11:31 rtable.db

61 megabytes. A far cry from our initial 32 gigabytes. It's worth noting that we got lucky, though.

You mean... you re-ran the command until it included all three plaintexts we knew we were looking for?

...maybe?

Point is — rainbow tables are probabilistic. There's a chance that the hash we're looking for is somewhere in the table, but it's not 100%.

So now, how do we use that rainbow tables? Just like we said in the pretty diagrams, except with code instead. Easy. Easy! I definitely didn't struggle with this. Nope. Not me.

First, for convenience, we're going to want to group some parameters together in a struct:

struct FindChainContext<'a> {

start_time: Instant,

progress: &'a ProgressBar,

header: &'a RainbowTableHeader,

charset: &'a Charset,

}

Similar to write_rainbow_file, we're going to make a read_rainbow_file

function that gives us both the header, and an immutable slice of rainbow

tuples:

fn read_rainbow_file<F, T>(f: F) -> Result<T, Box<dyn Error>>

where

F: FnOnce(RainbowTableHeader, &[RainbowTuple]) -> Result<T, Box<dyn Error>>,

{

let (header, tuples_offset_in_file) = {

let mut file = File::open("rtable.db")?;

let header: RainbowTableHeader = bincode::deserialize_from(&mut file)?;

let offset = file.seek(SeekFrom::Current(0))?;

(header, offset)

};

let file = File::open("rtable.db")?;

let file = unsafe {

MmapOptions::new()

.offset(tuples_offset_in_file)

.map(&file)?

};

let tuples = unsafe {

std::slice::from_raw_parts(

file.as_ptr() as *const RainbowTuple,

header.total_chains as _,

)

};

f(header, tuples)

}

From there... we have to think about what we want to parallelize. We have millions of chains, and only a handful of hashes to crack, so I think (and I measured that) it makes sense to try to crack all the hashes concurrently, and for each "assumed column", to check against all the chains in parallel.

At first I tried to parallelize over "assumed columns" rather than "chains", and it was, actually, much slower.

So, we're going to have to jump through some hoops to "crack hashes concurrently". We're going to start by assuming they're all in the last column, and look that up in the rainbow table. Then we're going to assume they're all in the second-to-last column. And so on.

So, unlike in our nice diagrammy explanation, we're not dealing with a single hash, but with a collection of hashes - we call them "queries".

Here's our use_rtable:

fn use_rtable() -> Result<(), Box<dyn Error>> {

let start_time = Instant::now();

read_rainbow_file(|header, tuples| {

let item_len = header.item_len as usize;

let charset = Charset(header.charset.clone());

println!(

"Loading {} chains of len {} for {}-len plaintexts with charset {:?}",

format_num(header.total_chains),

format_num(header.chain_length),

header.item_len,

charset

);

let records = Database::with(|db| Ok(db.records.clone()))?;

let chain_length = header.chain_length;

// Process all "passwords we want to crack" in parallel. We store the

// username, the hash as stored in the users db (we'll assume it's in

// different columns every time), and a `RainbowTuple` we're going to

// "move to the right" by the distance between the "assumed column" and

// the "last column".

let mut queries_initial: Vec<_> = records

.iter()

.map(|(db_user, db_hash)| {

let hash: [u8; HASH_LENGTH] = (&db_hash[..]).try_into().unwrap();

(

db_user,

hash,

RainbowTuple {

plaintext: Default::default(),

hash,

},

)

})

.collect();

// Whenever we crack a password, we stop trying to crack it. It's `Arc`

// because each worker thread needs a reference, and it's `Mutex`

// because, when we do crack a pasword, we end up mutating it. Lock

// contention should be very low though - we do millions of iterations

// before cracking a single password.

let found: Arc<Mutex<HashSet<&str>>> = Arc::new(Mutex::new(HashSet::new()));

let progress = ProgressBar::new(chain_length as u64).with_style(progress_style());

progress.enable_steady_tick(250);

let ctx = FindChainContext {

start_time,

progress: &progress,

header: &header,

charset: &charset,

};

for assumed_column in (0..chain_length).into_iter().rev() {

progress.set_position((chain_length - assumed_column) as u64);

// We're assuming the hashes we're looking for are in `assumed_column`. To

// find a chain match, we need to apply `Ri` and `H` repeatedly, to move

// them to the right, until they're in the last column - at the end

// of the chain, for which we have a hash in the rainbow file.

let mut queries = queries_initial.clone();

for column_index in assumed_column..(chain_length - 1) {

for (_, _, query_column) in queries.iter_mut() {

// Apply Ri

next_plaintext(column_index, &charset, item_len, query_column);

// Apply H

query_column.hash = md5::compute(&query_column.plaintext[..item_len]).0;

}

}

tuples.par_iter().enumerate().for_each_with(

found.clone(),

|found, (chain_index, chain_tuple)| {

ctx.find_chain_match(found, &queries, assumed_column, chain_index, chain_tuple)

},

);

// Exclude the passwords we've already cracked from `queries_initial`

{

let found = found.lock().unwrap();

queries_initial = queries_initial

.into_iter()

.filter(|(k, _, _)| !found.contains::<str>(k.as_ref()))

.collect();

}

}

progress.finish();

Ok(())

})

}

In there is the code to "move" from the assumed column to the last column,

and then we still have to compare that to all the chains in our rainbow

file, which is what FindChainContext::find_chain_match does.

And, look! It comes with ASCII diagrams!

impl<'a> FindChainContext<'a> {

#[inline(always)]

fn find_chain_match(

&self,

found: &mut Arc<Mutex<HashSet<&'a str>>>,

queries: &[(&'a String, [u8; HASH_LENGTH], RainbowTuple)],

assumed_column: usize,

chain_index: usize,

chain_tuple: &RainbowTuple,

) {

let item_len = self.header.item_len as usize;

/*

* If everything goes fine, we have:

*

* /-----------------------|-------------------------------|----------------------\

* | first column | assumed column tuple | last column |

* |-----------------------|-------------------------------|----------------------|

* | | query_hash (from users.db) | |

* | | || \------------------- |-> query_moved_right |

* | | || | |

* | table_tuple.plaintext | || | table_tuple.hash |

* | \----------|-> query_from_left | |

* \-----------------------|-------------------------------|----------------------/

*/

for (username, query_hash, query_moved_right) in queries.iter() {

// Is `query_hash` in a chain that ends with the same hash as `chain_tuple`

// of length `chain_length - assumed_column`?

if query_moved_right.hash != chain_tuple.hash {

// No.

continue;

}

// Rebuild queried hash starting from the left column (first plaintext in chain)

// to recover the plaintext.

let mut query_from_left = chain_tuple.clone();

// Apply H - this "completes" the 0th column.

query_from_left.hash = md5::compute(&query_from_left.plaintext[..item_len]).0;

for column_index in 0..assumed_column {

// Apply Ri

next_plaintext(column_index, self.charset, item_len, &mut query_from_left);

// Apply H

query_from_left.hash = md5::compute(&query_from_left.plaintext[..item_len]).0;

}

if &query_from_left.hash != query_hash {

// If the rebuilt hash is not equal to the initial hash, the

// query_hash was in a different chain that merged with the one

// stored in the rainbow table.

//

// This happens all the time. `query_from_left.plaintext` is not

// what we're looking for.

continue;

}

{

// If this is the first time we crack this password (it may be in

// multiple chains stored in the rainbow table)...

let mut found = found.lock().unwrap();

if found.insert(username) {

// ...then print it out.

self.progress.println(format!(

"[CRACKED in {:?}] user '{}' has password '{}' (found in chain {} at {}/{})",

self.start_time.elapsed(),

username,

std::str::from_utf8(&query_from_left.plaintext[..item_len]).unwrap_or("<non utf-8>"),

chain_index,

assumed_column,

self.header.chain_length,

));

}

}

}

}

}

Well, there it is. It's showtime.

We've optimized some things since we last ran bruteforce, so let's run it again:

$ cargo build --release && ./target/release/pass bruteforce

Compiling pass v0.1.0 (/Users/amos/ftl/pass)

Finished release [optimized + debuginfo] target(s) in 3.46s

BruteforceParams { len_range: 4..=8, charset: abcdefghijklmnopqrstuvwxyz0123456789 }

[CRACKED in 1.181187301s] user (twentytwenty) has password (aaaaaa)

[CRACKED in 14.501821615s] user (alpha) has password (abcdef)

[CRACKED in 29.108262954s] user (dev) has password (foobar)

And let's run use-htable again:

$ cargo build --release && ./target/release/pass use-htable

Finished release [optimized + debuginfo] target(s) in 0.04s

[CRACKED in 410.505µs] user twentytwenty has password aaaaaa

[CRACKED in 10.731340801s] user alpha has password abcdef

[CRACKED in 17.523243879s] user dev has password foobar

And fianlly, use-rtable:

$ cargo build --release && ./target/release/pass use-rtable

Finished release [optimized + debuginfo] target(s) in 0.16s

Loading 2.00 million chains of len 2400 for 6-len plaintexts with charset abcdefghijklmnopqrstuvwxyz0123456789

[CRACKED in 426.636558ms] user 'dev' has password 'foobar' (found in chain 1826222 at 2241/2400)

[CRACKED in 2.492776857s] user 'alpha' has password 'abcdef' (found in chain 1818787 at 1485/2400)

[CRACKED in 3.227486797s] user 'twentytwenty' has password 'aaaaaa' (found in chain 837839 at 1192/2400)

[00:00:04] [########################################] (00:00:00 left)

The results are in!

| Method | Space | aaaaaa | abcdef | foobar |

|---|---|---|---|---|

| Bruteforce | 0 | 1.2s | 14.5s | 29.1s |

| Exhaustive | 32GB | 0s | 10.7s | 17.5s |

| Rainbow | 61MB | 426ms | 2.5s | 3.2s |

Rainbow tables are the clear winner here 🎉

Just to convince you that there's not just those hashes in the rainbow table, let's generate ten other dummy accounts with random 6-character passwords:

$ for i in $(seq 1 10); do PASSWORD=$(openssl rand -base64 16 | tr -dc 'a-z0-9' | head -c 6); ./target/release/pass add-user random$i "$PASSWORD"; echo "(with password $PASSWORD)"; done User random1 added to database (with password ivypj5) User random2 added to database (with password iipjrc) User random3 added to database (with password zq1djg) User random4 added to database (with password e3kyj9) User random5 added to database (with password cz7pws) User random6 added to database (with password 1wcauj) User random7 added to database (with password acd8y6) User random8 added to database (with password uul2nk) User random9 added to database (with password ov7nx6) User random10 added to database (with password rrv6pi)

And let's run use-rtable again:

$ cargo build --release && ./target/release/pass use-rtable

Finished release [optimized + debuginfo] target(s) in 0.14s

Loading 2.00 million chains of len 2400 for 6-len plaintexts with charset abcdefghijklmnopqrstuvwxyz0123456789

[CRACKED in 678.589077ms] user 'random2' has password 'iipjrc' (found in chain 402534 at 2274/2400)

[CRACKED in 855.57664ms] user 'dev' has password 'foobar' (found in chain 1826222 at 2241/2400)

[CRACKED in 1.694286796s] user 'random6' has password '1wcauj' (found in chain 184202 at 2075/2400)

[CRACKED in 1.909697589s] user 'random7' has password 'acd8y6' (found in chain 1911512 at 2033/2400)

[CRACKED in 3.05897522s] user 'random4' has password 'e3kyj9' (found in chain 135326 at 1798/2400)

[CRACKED in 4.605489184s] user 'alpha' has password 'abcdef' (found in chain 1801797 at 1485/2400)

[CRACKED in 6.079666699s] user 'twentytwenty' has password 'aaaaaa' (found in chain 837839 at 1192/2400)

[CRACKED in 7.417559702s] user 'random1' has password 'ivypj5' (found in chain 1088265 at 911/2400)

[CRACKED in 10.187383951s] user 'random8' has password 'uul2nk' (found in chain 1432884 at 315/2400)

[CRACKED in 10.315894942s] user 'random9' has password 'ov7nx6' (found in chain 663976 at 285/2400)

[CRACKED in 10.443286352s] user 'random10' has password 'rrv6pi' (found in chain 1969534 at 251/2400)

[00:00:11] [########################################] (00:00:00 left)

Now here's a good demonstration of the "probabilistic" nature of rainbow

tables: we got eight ouf of ten. random3 and random5 are missing from our

table. If we were to run gen-rtable again, we might catch them! But we might

also lose out on others.

Improving our rainbow tables implementation

...is not something we're going to do. But there are things we could do.

We could — and I think that's what the original paper intends — not have a

single item_len, but instead, have next_plaintext (our Ri family of

functions) decide the length of the next plaintext, within a specified range.

The resulting rainbow table would be larger, but it would work for passwords that aren't exactly of length 6.

We also could treat hashes as an u128 - or a pair of u64. Then equality

comparison would be faster than comparing u8 slices. Or maybe it's already

optimizing that far? You do the sleuthing. I'm all sleuthed out.

Most of what we're doing is embarassingly parallel, so we could use SIMD (Single Instruction, Multiple Data) to process several items at a time on a single thread. That's left as an exercise to the reader.

Also, I'm sure we could do even fewer allocations. The memory-mapping tricks are also costing us — we probably should not trust the OS to "just do the right thing".

We could also sort our rainbow tables after generation, so that, in

find_chain_match, we don't do 2 million comparisons, only log2 of that

(which is... 20).

I'm not sure how our rainbow tables implementation compares to existing, state-of-the-art implementations, but it allowed us to show the trade-off, which is cool.

But how useful are rainbow tables... in the real world?

Defeating rainbow tables

The first defense against rainbow tables was to "add salt".

Is this a metaphor?

No, really. Salt is something you add to a password before you hash it.

Say, instead of doing:

let password = "foobar"; let hash = md5(password);

You do:

let password = "foobar";

let salt = "a79n48gm"

let input = format!("{}{}", password, salt);

let hash = md5(input);

And then you store both the hash and the salt in the database.

Wait... but then attackers could just generate a rainbow table using that salt?

Bear is right! But the idea is to have a unique salt per user. So you can generate a rainbow table for a specific user, if you really want their password.

But if the salt is long enough, generating rainbow tables for all possible salts is.. impractical. And boom, rainbow tables don't work anymore.

However — rainbow tables are no longer the best option to crack passwords. These days, your GPU is much more effective at brute-forcing passwords than your CPU is at generating and using rainbow tables.

Oh well. It was fun living in 2004 for a while!

If you liked what you saw, please support my work!

fasterthanlime

fasterthanlime