Setting up a local Ubuntu Server VM

This article is part of the Building a Rust service with Nix series.

- Picking a set of tools for this series

- Creating a VM that can use all our cores

- Setting up port forwarding

- Making sure you have a GitHub account

- Ubuntu's text mode install wizard

- Updating packages and creating a snapshot

Contents

The first step to using Nix to build Rust is to do so without Nix, so that when we finally do, we can feel the difference.

There's many ways to go about this: everyone has their favorite code editor, base Linux distribution (there's even a NixOS distribution, which I won't cover). Some folks like to develop on macOS first, and then build for Linux.

Y'all are all valid, but for this series, we're going to very specifically do what I used to do before nix, and what I'm doing now that I've embraced my 30s and become "a nix person", and we'll evaluate the ups and downsides of both approaches.

Even if you're not ready to let go of NeoVim, or if you don't want to mess with Linux VMs locally, this will still largely be useful to you — you'll just have to do the extra credit of making it work with your preferred setup yourself.

So, let's get started!

Picking a set of tools for this series

I'm doing all this from Windows 11 (shock! awe!), and I'll be using VirtualBox for this, setting up an Ubuntu 22.04.1 VM from scratch, which we'll access using VSCode's Remote - SSH feature.

You could be using a different VM hypervisor for this. On Windows, Hyper-V Manager is the built-in thing (which WSL2 requires). Actually you could be doing all this from WSL2!

You could also be using VMWare Workstation Player. Or, you could be doing all this on a "bare metal" install of Linux, if you don't mind dual booting / are already doing that.

One compelling reason to use VMs is that it makes it easy to separate different projects completely: "day job" projects vs side projects, various customers if you do consulting, etc. There's no risk of mixing anything up if they're all in completely separate installs.

Doing this with containers instead of VMs is also an option, but because we're going to be running containers as part of this series, and because Docker in docker is annoying, let's not.

Ubuntu is not necessarily the best choice for a day-to-day distribution. Fedora is surprisingly capable, and we've already mentioned NixOS.

Anyway: this series is designed for you to follow along at home, so just know the more flavor you add, the more work it'll be for you. You could just stay within the tracks for now and go wild later on, that'd be neat. But you're your own person and you have agency and stuff.

With disclaimers out of the way, let's begin.

In case you don't want to set up the VM yourself from scratch, you can grab a pre-made image from osboxes for example.

If you trust them, of course.

You'll still want to review the settings we talk about in this section, notably in terms of CPUs used and port forwarding.

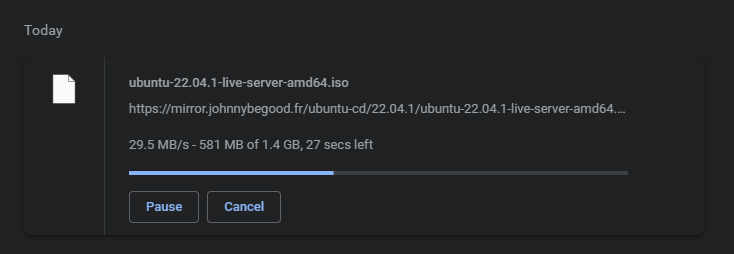

We can grab an Ubuntu Server 22.04.1 LTS install image from the official Ubuntu website: it's a 1.4GB download for me.

Creating a VM that can use all our cores

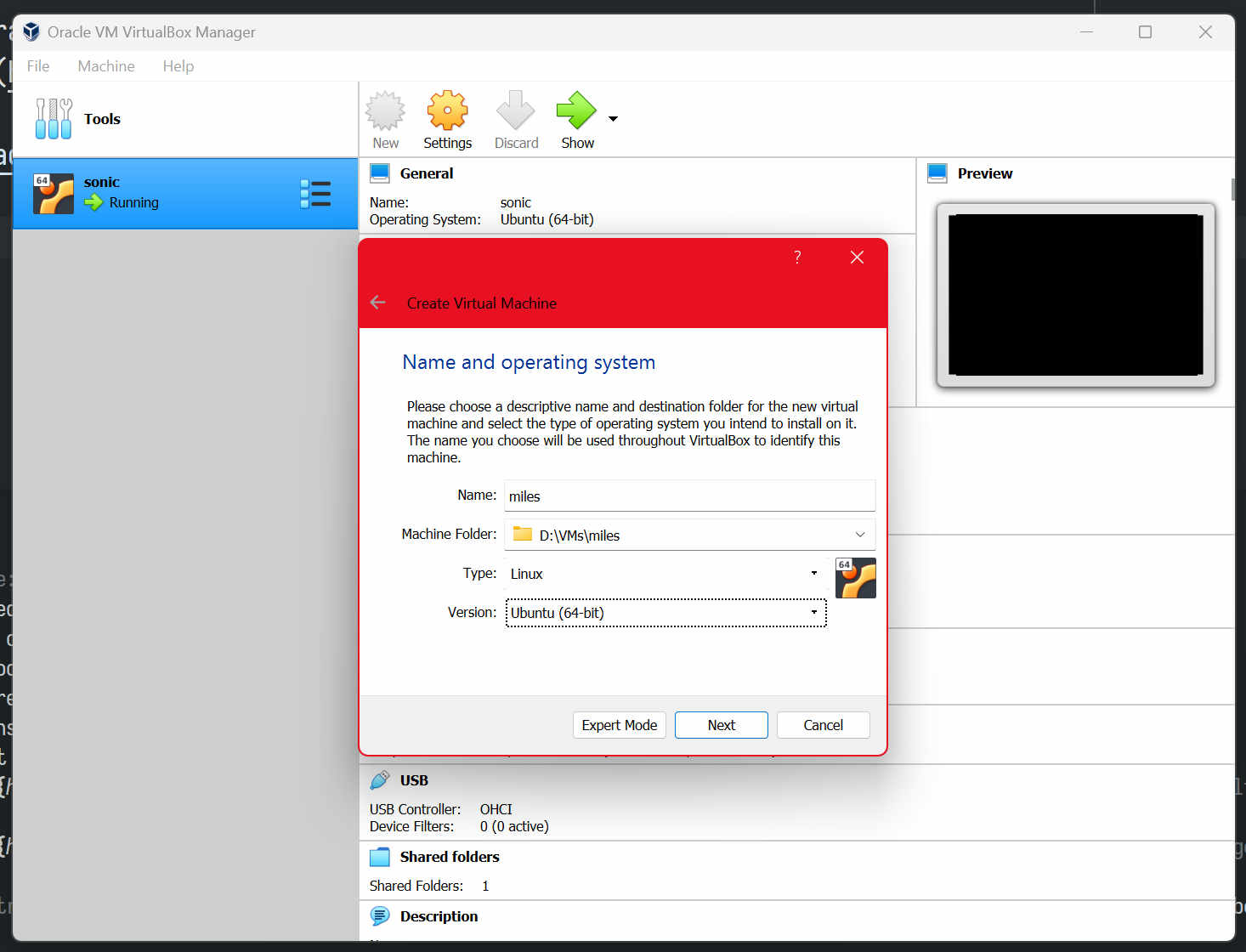

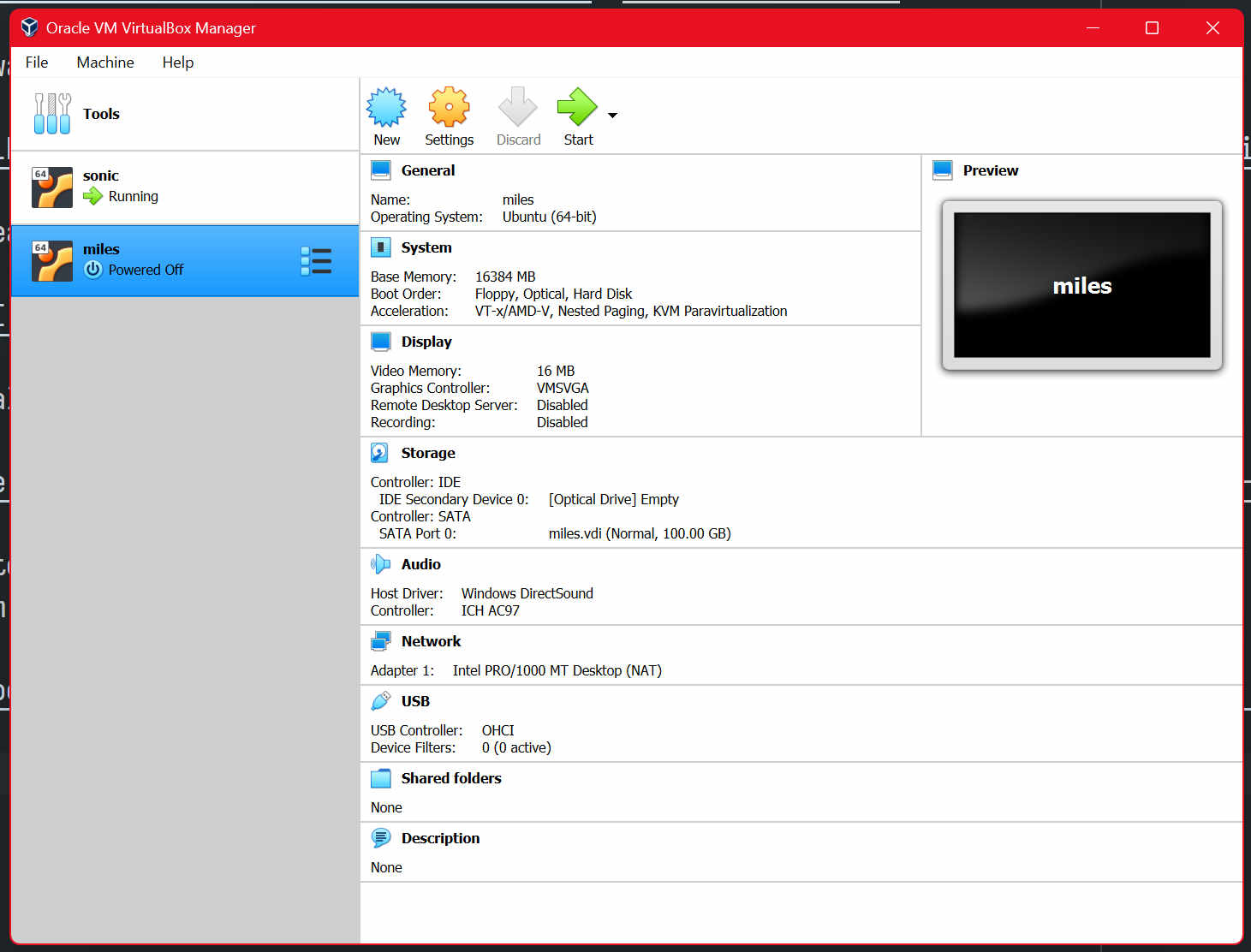

While this downloads, let's create a new VM in VirtualBox: my main VM is called "sonic", so I'll name this one "miles", pick a drive where I know I have enough space, set type to "Linux" and Version to "Ubuntu (64-bit)".

I'm fairly sure those fields mostly set the icon, although they may also influence what VirtualBox advises you to do in the configuration screen.

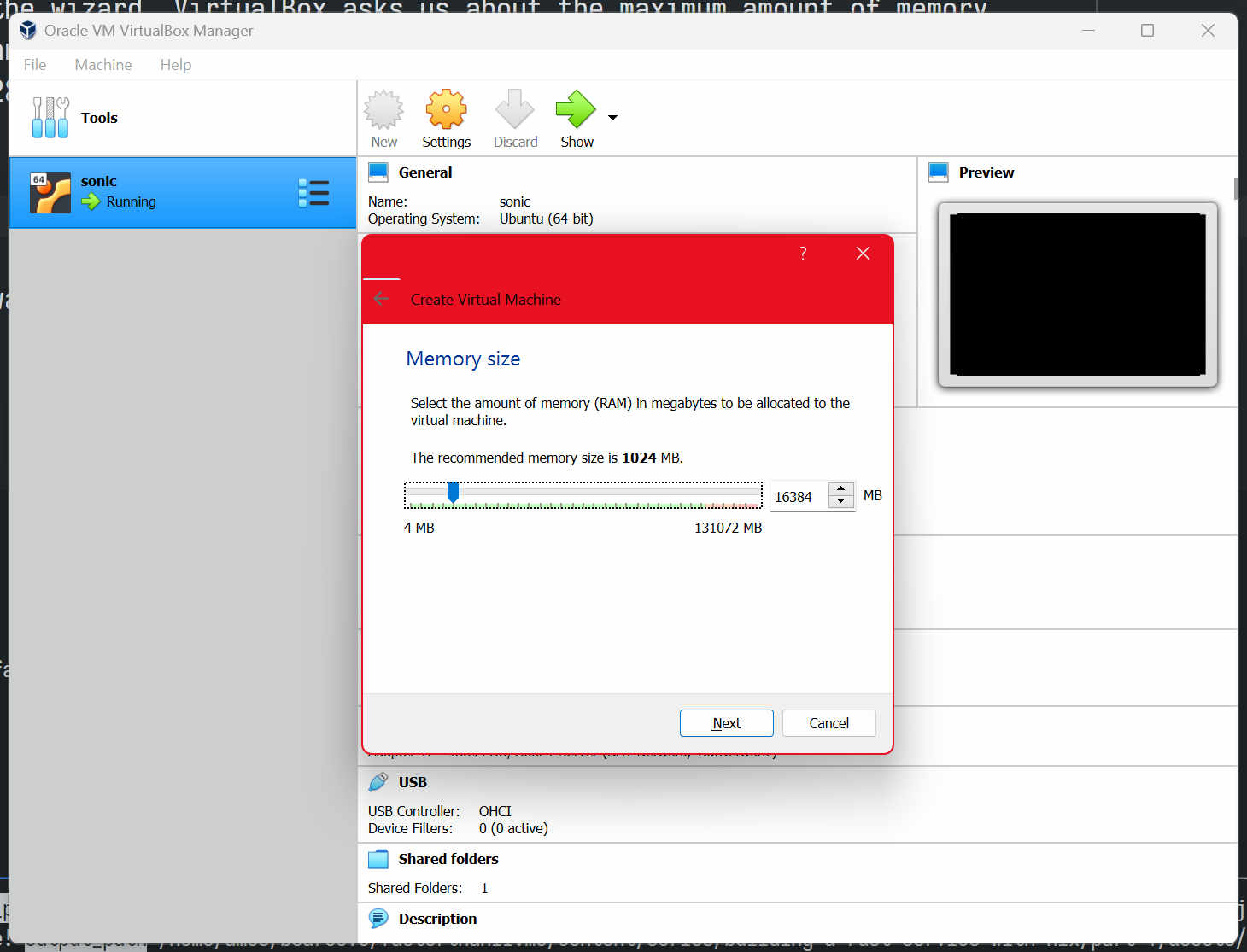

Next in the wizard, VirtualBox asks us about the maximum amount of memory, you'll want to leave some for the host operating system. Me personally, I bought 128GiB so I don't have to worry about these things, so I'll just give it 16GiB.

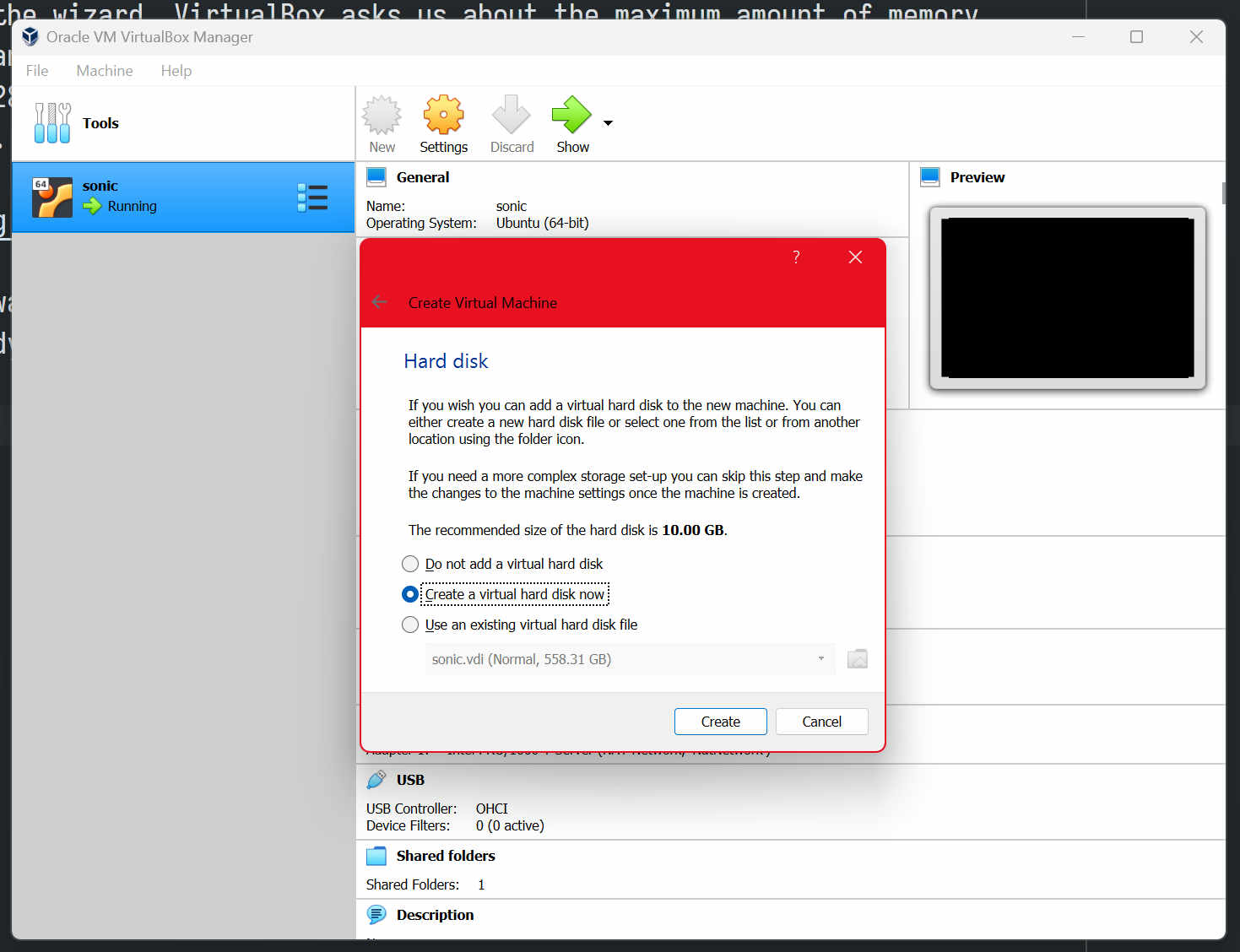

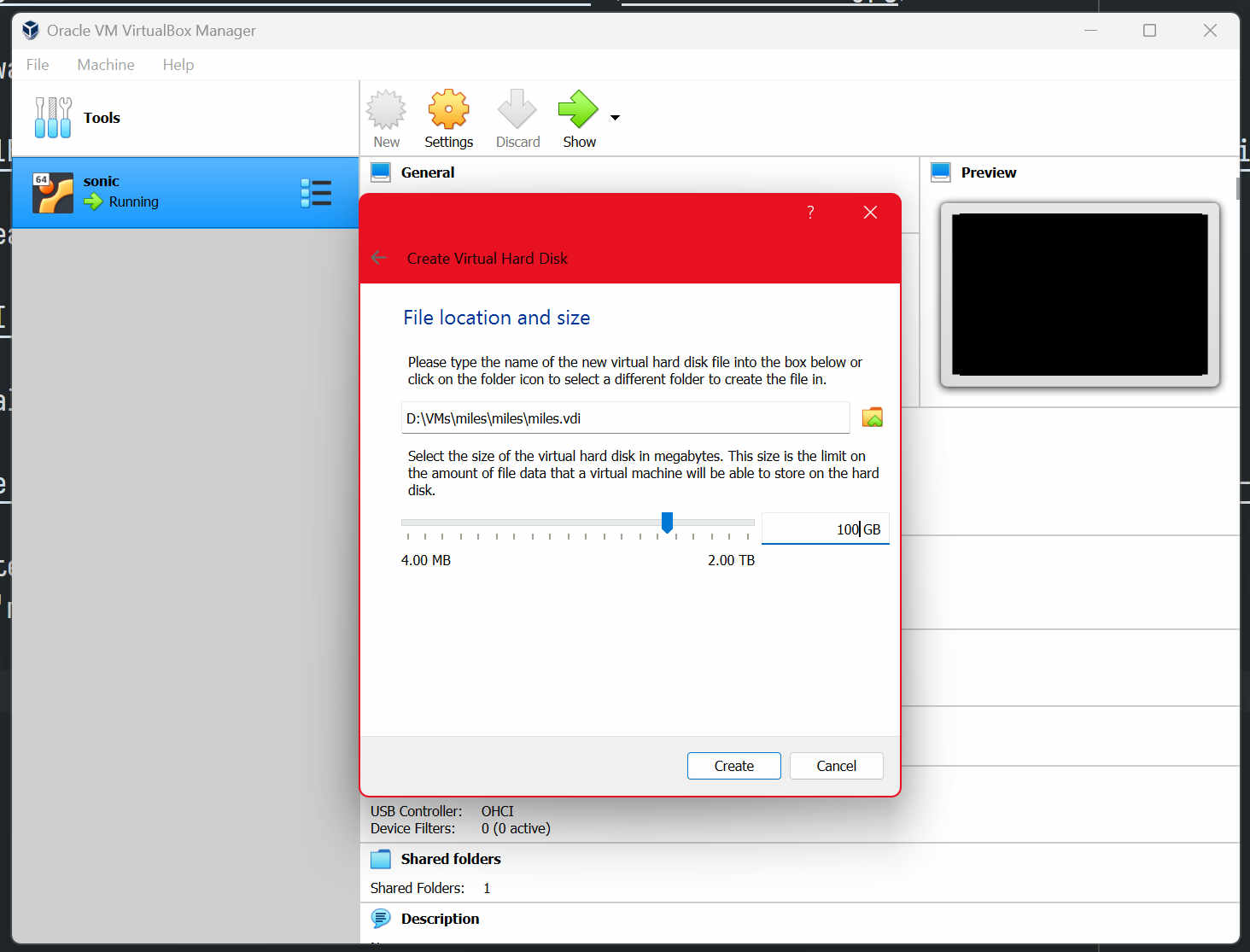

Then it wants to create a Virtual Hard Disk:

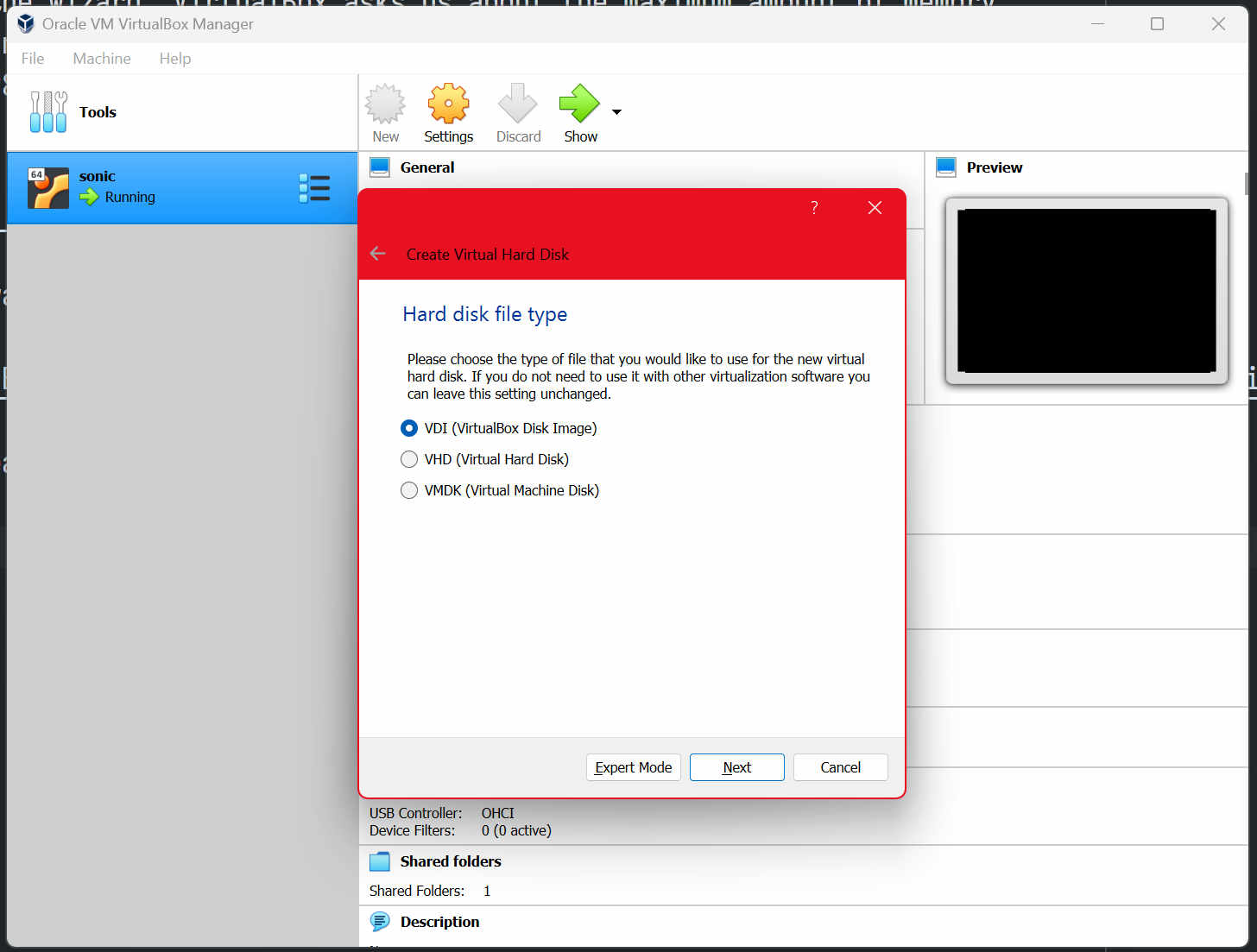

We can leave the default type:

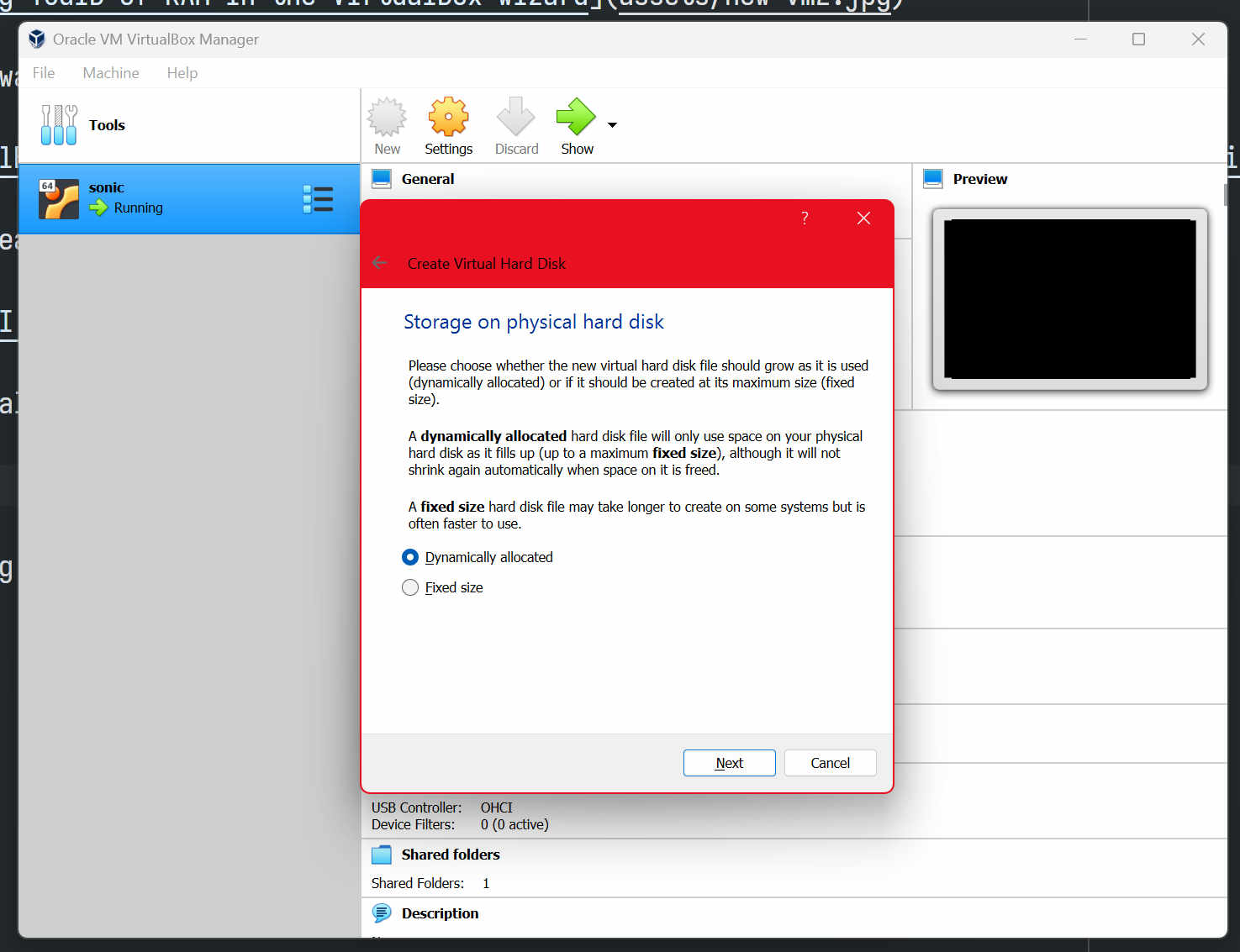

Default allocation strategy (dynamic):

Then it tells us where the disk will be stored, and we can pick its maximum size. I'm going to pick 100GiB because it's dynamically allocated anyway:

Our new VM is now listed and can be started, but before we do, let's take a look at the settings:

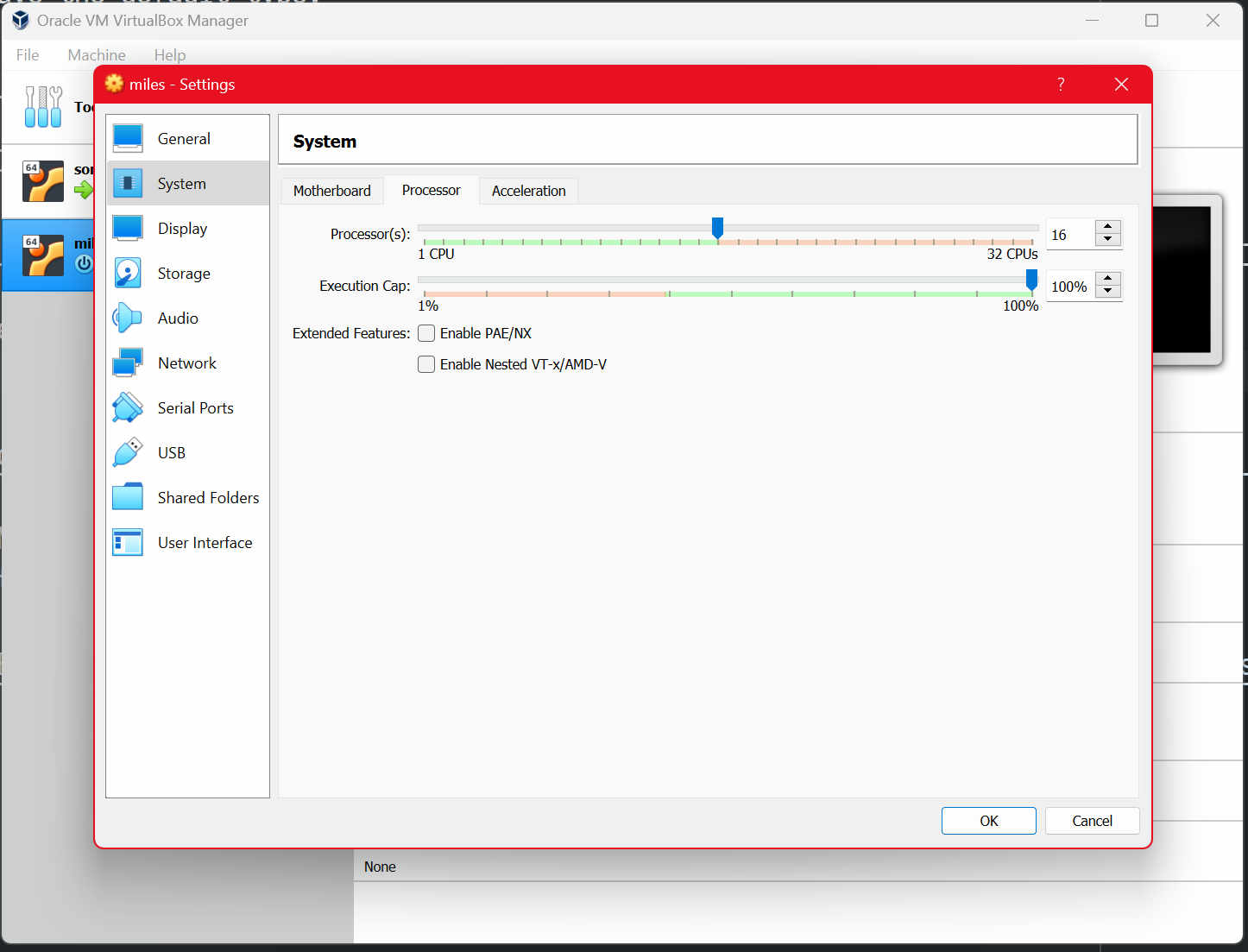

For example, the VM defaults to being limited to 1 CPU core. I have 16 cores available, let's allow the VM to use all of them:

Usually you'd want to leave at least one core to the host OS so your guest can't accidentally make the whole machine unresponsive, but, as the kids say: you only live once.

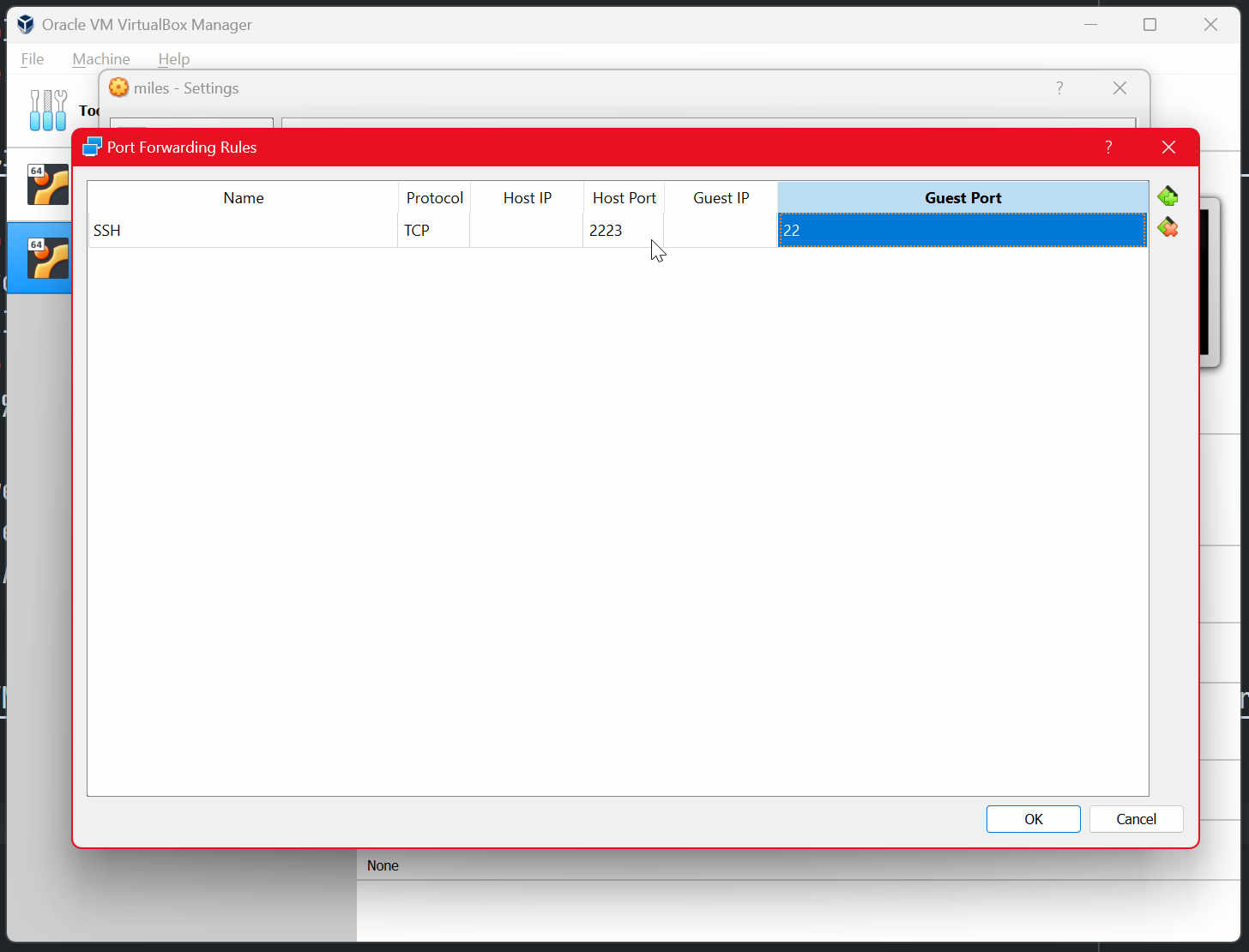

Setting up port forwarding

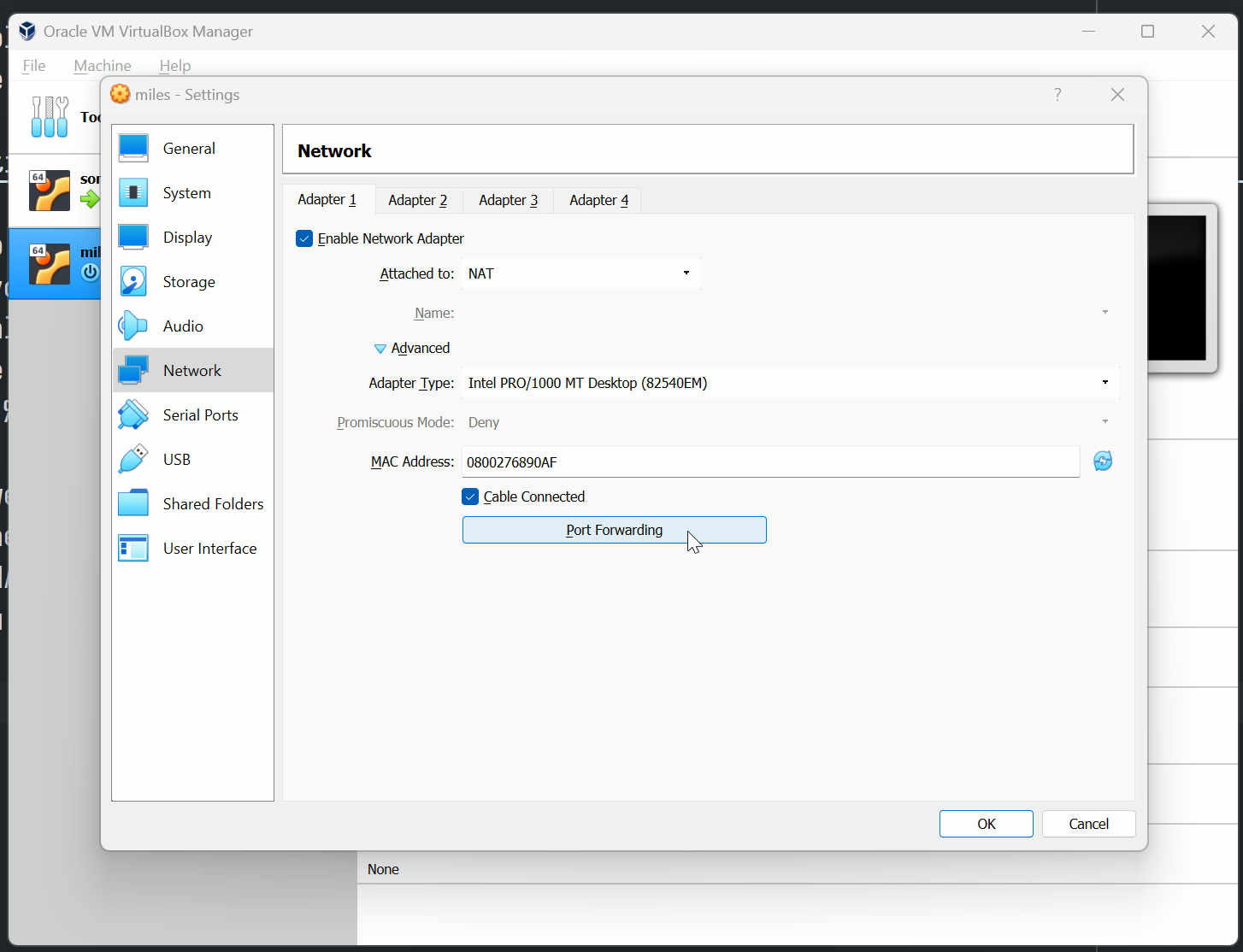

Because we're going to be connecting to the machine over SSH, we need to take care of networking too. There's many options there, but we can stick with the default NAT (which has the merit of not breaking IPv6 connectivity if your ISP gives you that)...

...we'll simply want to set up a port forwarding rule: port 2223 on the host

(2222 is taken by my main VM) will redirect to port 22 on our miles VM:

Making sure you have a GitHub account

Because we will first be writing a Rust service without nix, and then doing it again with nix, on a fresh Ubuntu install, we'll want to push our code somewhere. That way, when we're ready to play with nix, we'll be able to just clone our repository and build it.

Although there's many services that can be used to store Git repositories, for this series, we'll use GitHub — make sure you have an account there, and follow their instructions on Connecting to GitHub with SSH.

Ubuntu's text mode install wizard

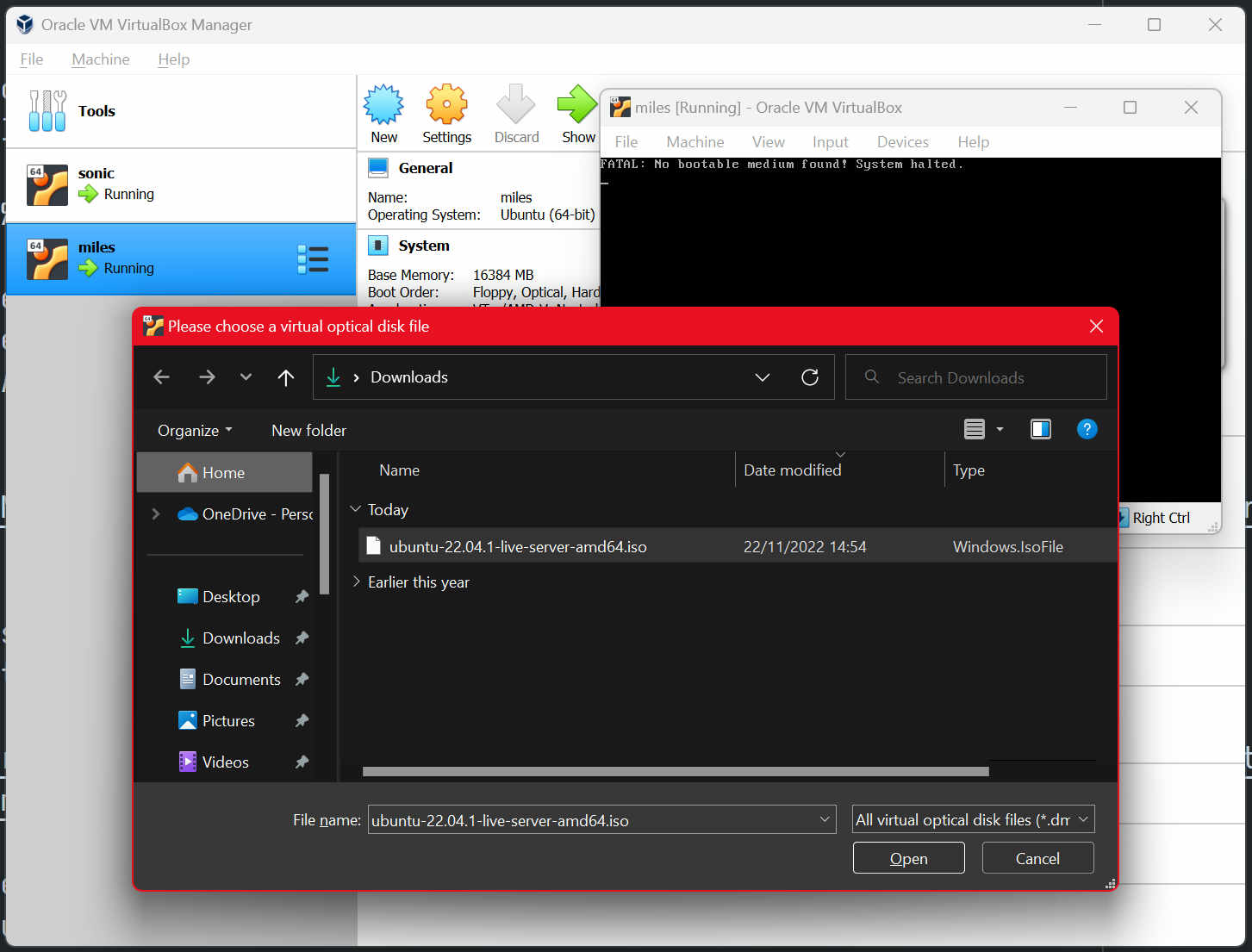

Okay! Time to start the VM for real! You'll be tempted to click the big "Start" button, but instead, let me suggest clicking the down-arrow next to it, and picking "Detachable start". More on that later.

On first start, VirtualBox suggests you pick a disk image to boot from: that's the Ubuntu server image we've downloaded earlier.

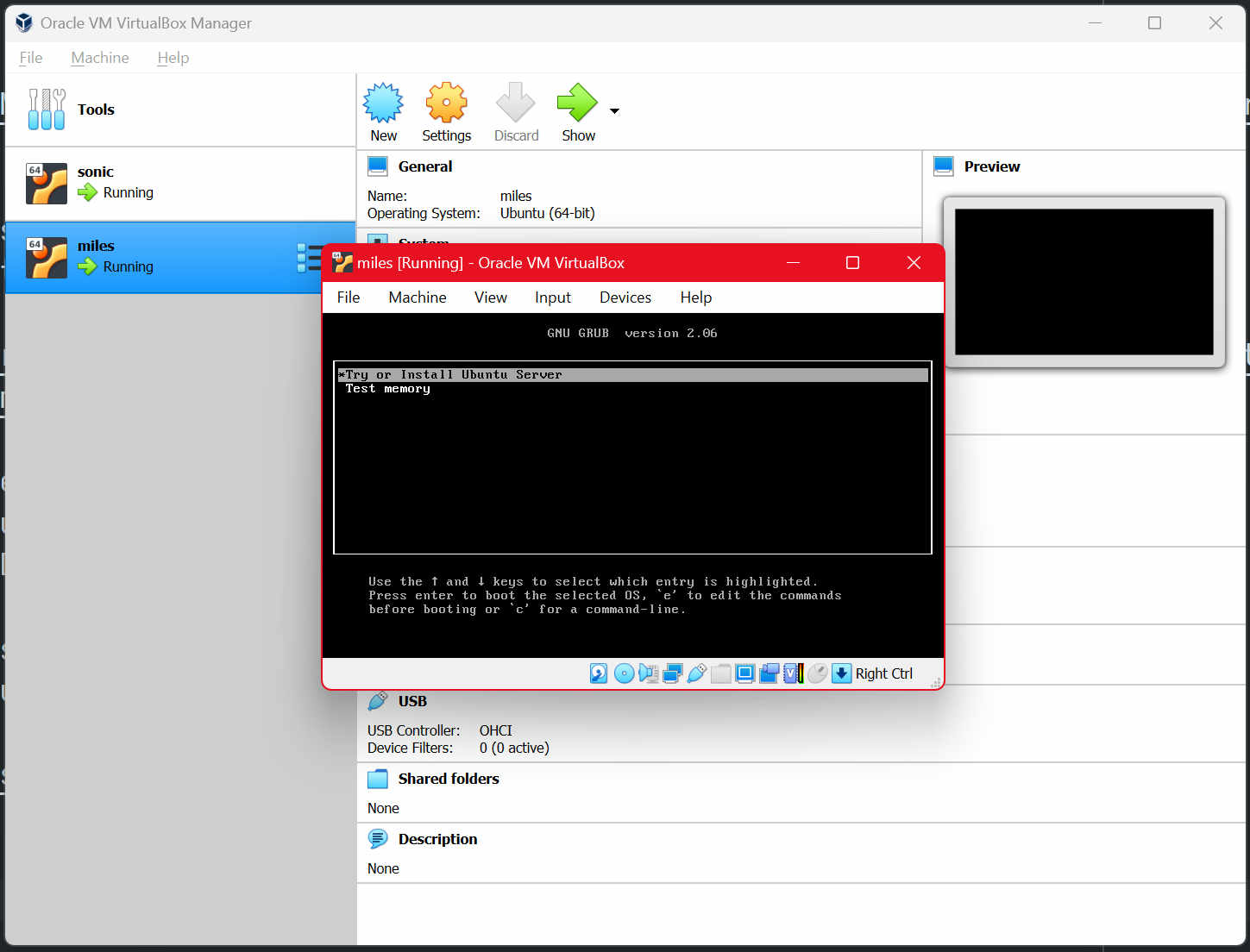

If everything went fine, we should both be looking at a pretty barebones bootloader screen. Ubuntu still uses GRUB2, apparently:

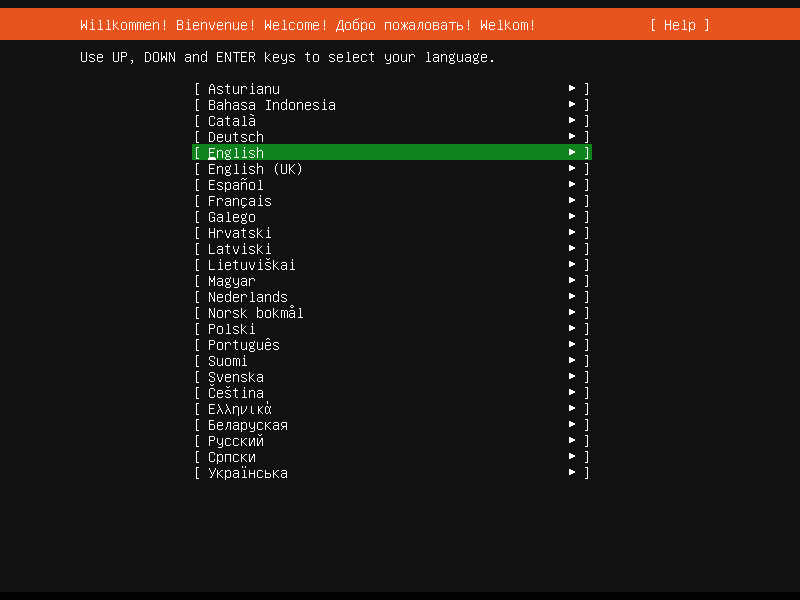

We can pick "Try or install Ubuntu server", and be greeted with a text mode install wizard:

The defaults all worked for me and I was able to hit next (by pressing "Enter", occasionally having to press "Tab" to focus the "Done" button).

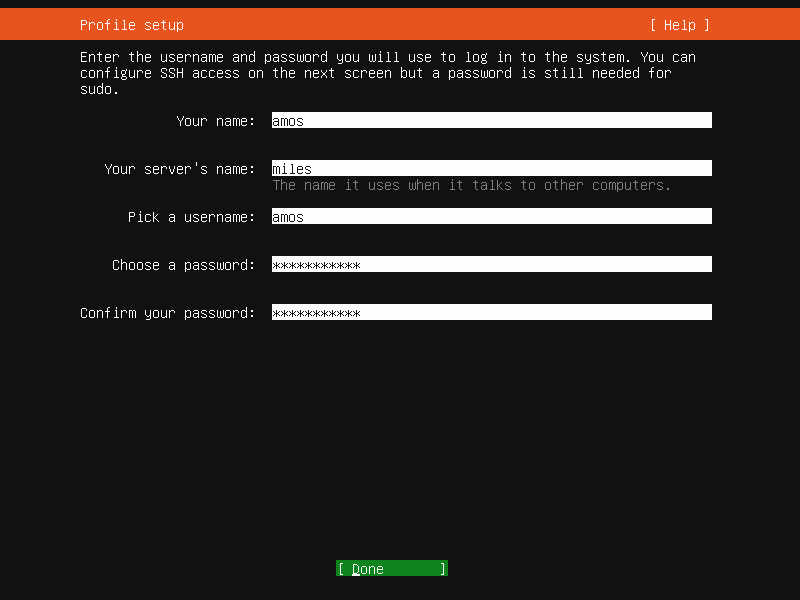

Eventually it'll ask you for personal information, you can fill in whatever. Remember, you can press the "Tab" key to focus the next field, "Shift-Tab" to focus the previous field (just like you would do in a web browser if you didn't want to reach for the mouse), and the "Enter" key to press buttons.

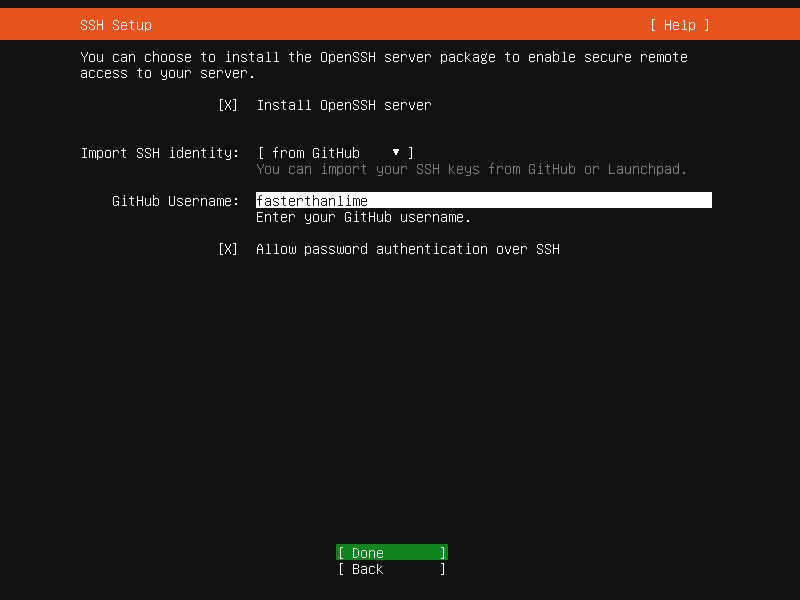

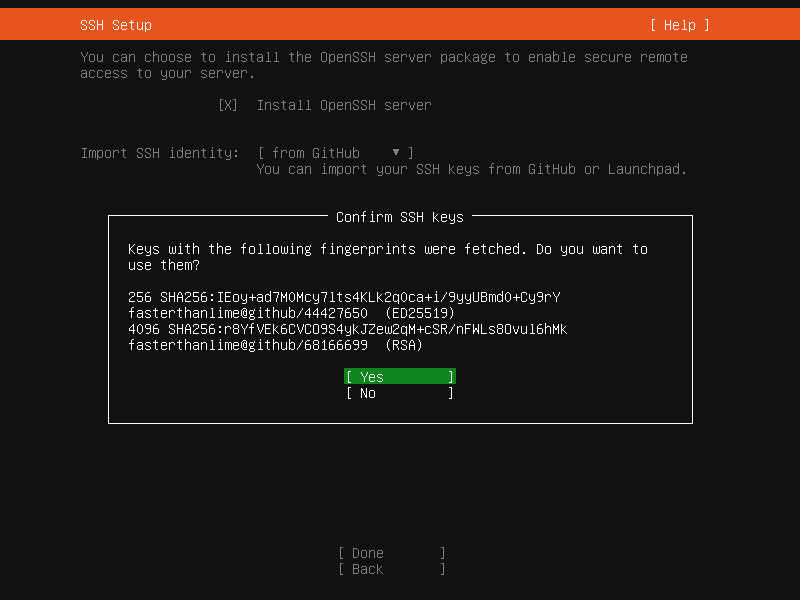

Next up, it's suggesting we install the OpenSSH server, that seems like a good idea. It even lets you import SSH identities from GitHub!

GitHub lets you add your public SSH keys, so you can use them to authenticate when cloning private repositories.

Here are amos's public keys: https://github.com/fasterthanlime.keys — you can substitute any username in that URL to get theirs!

If you need them, GitHub has great docs about SSH, in the context of Git of course, but it works just as well for our purpose. Moving forward we'll assume you already have an SSH key, and you have some SSH client installed (most probably OpenSSH, which ships by default on macOS, most Linux distributions, and is an optional Windows 11 component).

If you're not feeling 100% about SSH just yet, you can check "Allow password authentication over SSH" like I did here: you wouldn't want that on a server that is exposed to the internet (you'd be surprised how many things try to get in), but we're safely tucked away behind a NAT, so it's not as bad.

Note that, if we were installing Ubuntu on a laptop for example, we'd need to enter Wi-fi credentials before it can do anything with the network (like fetching the SSH public keys from GitHub here). But again, this is NAT networking, if the host (here, Windows 11) has internet access, so will the guest. It just sees an ethernet network interface that has internet connectivity.

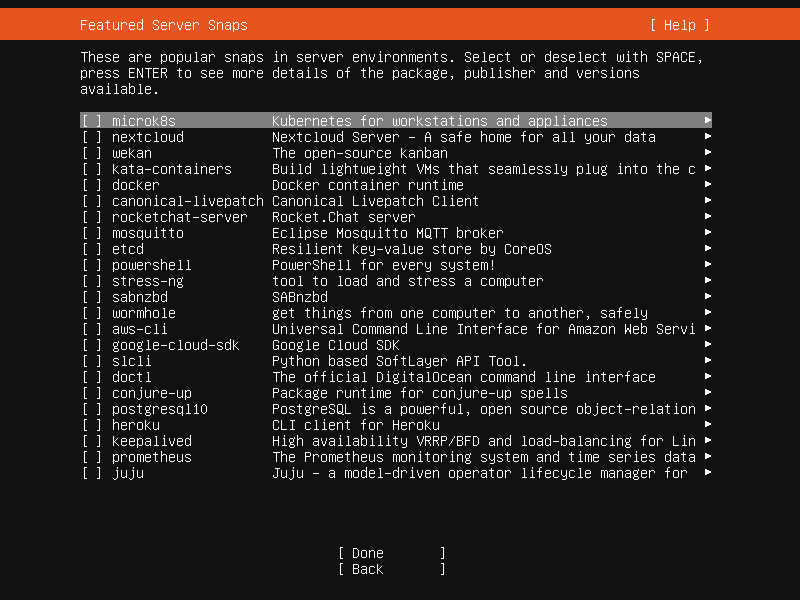

Next up, the server will suggest we install a bunch of "snaps". In this house, we don't do that. Feel free to browse, but my recommendation here is to skip that noise.

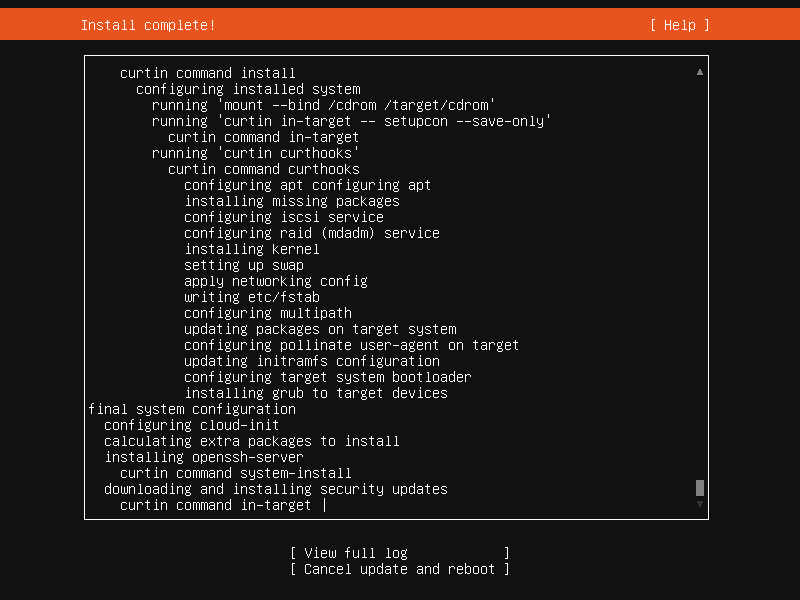

Pressing "Done" shows an "Install complete!" screen, but there's clearly a spinner going round and round here, so let's just wait until that finishes.

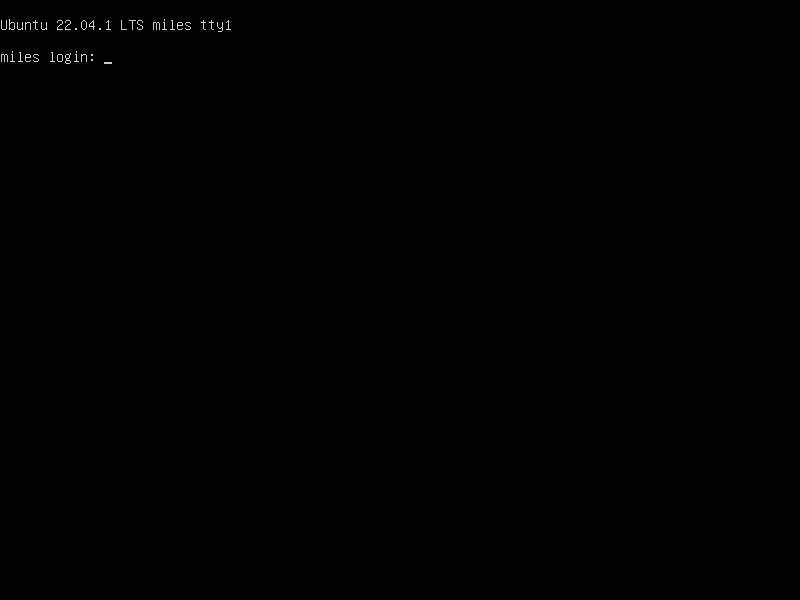

After it's done, there's a "Reboot Now" button. I got a "Failed unmounting /cdrom", but when I looked it had been ejected from VirtualBox, so, pressing Enter again continued with the reboot, and tada: here's our fresh new install of Ubuntu 22.04.1:

Updating packages and creating a snapshot

I'm able to log in with the username and password I set up during the install, and run:

$ sudo apt update $ sudo apt upgrade

Now is a good time to take a snapshot of the VM. Why you ask? Because when it comes time to do everything again, but with Nix, we'll want to travel back in time to this point.

So, say cheese and... press Host+T (where "Host" defaults to "Right Ctrl" for

me, consult the lower-right corner of your running VirtualBox VM to find what's

yours), and name it "Clean Slate".

Are we good? Good! Let's move on.

Installing Linux on a VM works much like installing it on a physical computer, except VMs tend to come with "hardware" that's readily supported, networking works out of the box, disks are just files on the host OS, etc.

Ubuntu Server comes with a different installer and a different set of defaults than Ubuntu Desktop, but it uses the same package repository and one can be turned into the other if you really wanted to.

What we've done here manually is exactly what Vagrant solves. However, Vagrant hides a bunch of specifics that are interesting to learn, so we didn't use it here, on purpose.

This article is part 1 of the Building a Rust service with Nix series.

If you liked what you saw, please support my work!

fasterthanlime

fasterthanlime