Deploying at the edge

This article is part of the Updating fasterthanli.me for 2022 series.

- Content Delivery Networks

- Migrating from rusqlite to sqlx

- Deploying software and content updates

Contents

Disclosure: Although I no longer work for the company my website is hosted on, and this article is written in way that mentions neither my previous or current hosting provider: at the time of this writing, I don't pay for hosting.

One thing I didn't really announce (because I wanted to make sure it worked before I did), is that I've migrated my website over completely from a CDN (Content Delivery Network) to an ADN (Application Delivery Network), and that required some architectural changes.

Content Delivery Networks

In a CDN set-up, there's PoPs (points of presence) all around the globe, that take care of TLS termination (including certificate renewal etc.), and proxy requests to, in my case, a single origin server:

This was already a win compared to serving directly from the origin, since establishing a TLS session involves a few RTTs (round-trip time, it gets better or worse depending on the scenario), and the edge nodes were able to cache some assets, mostly images and stylesheets.

HTML pages however, are rendered from templates + markdown sources, and they hit the database. They also render differently whether you're logged in or not.

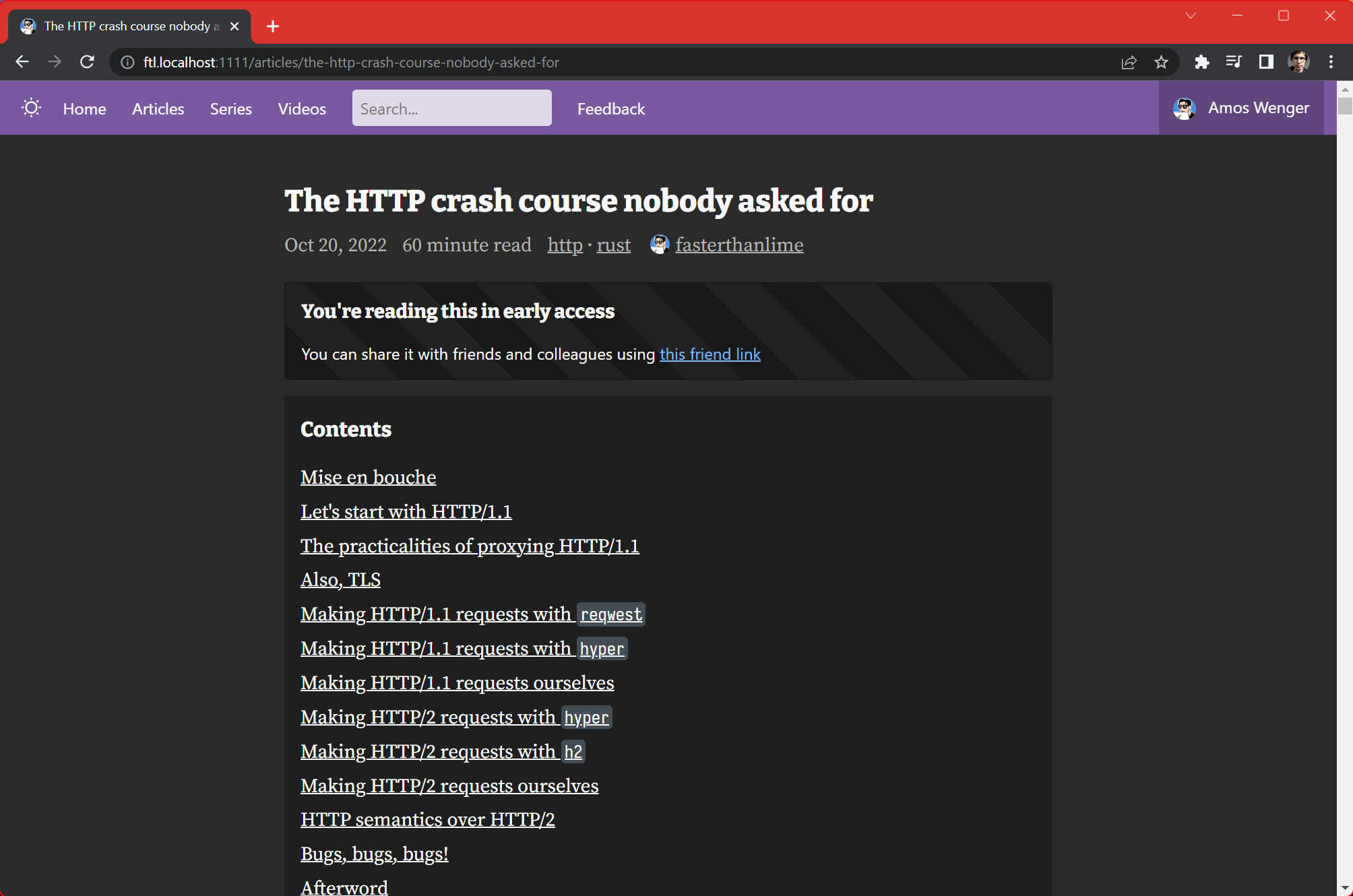

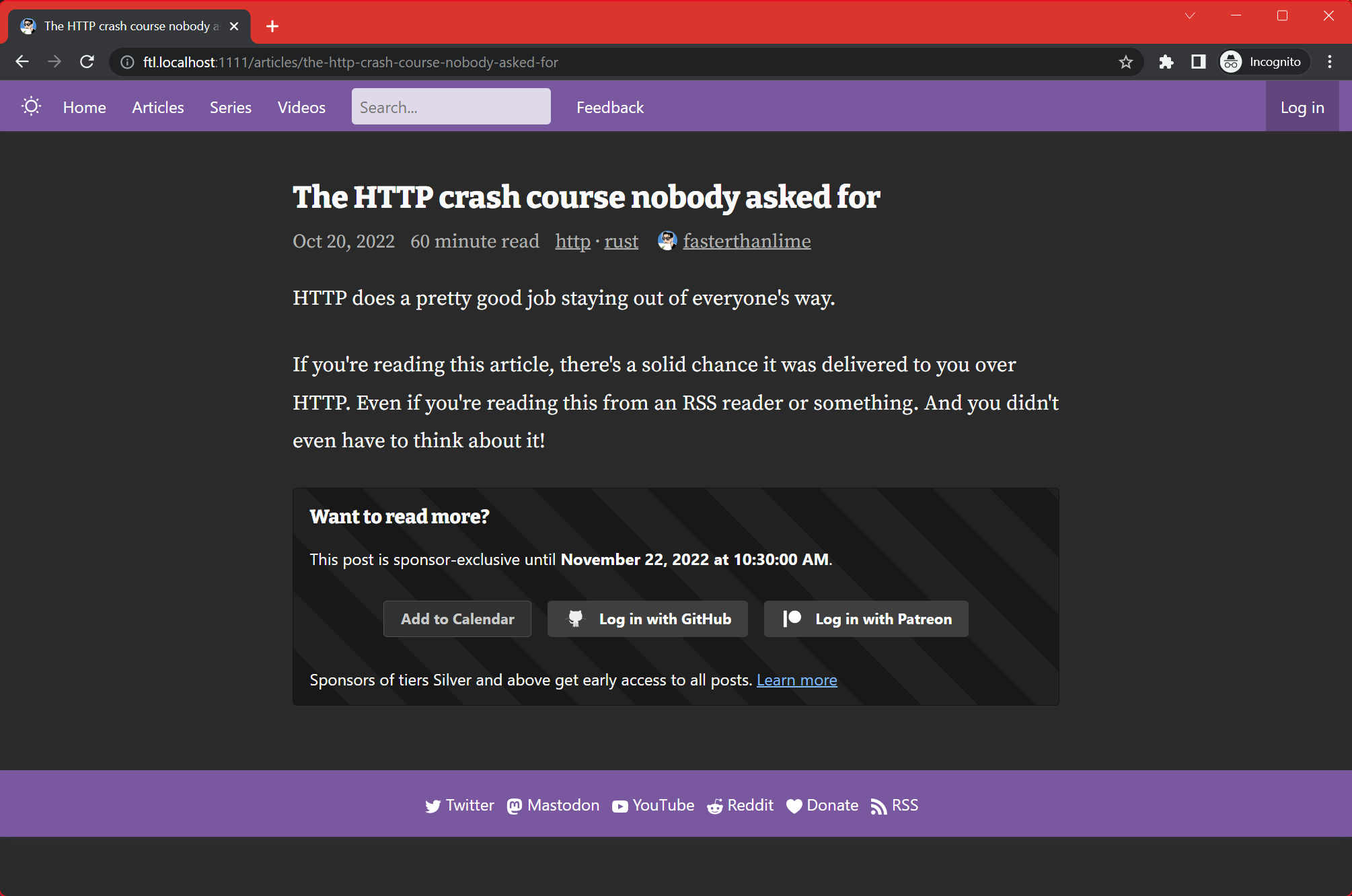

For example, sponsors at the "Silver" tier and above get to read some articles one week in advance: if they navigate to an article, they'll be able to read the whole thing, along with a box thanking them for their support, and a friend link they can share with.. friends!

Everyone else sees the first few paragraphs, and a box that lets them know they can become sponsors if they want to read it now, or, that they can add it to their calendar to get notified when it goes live for everyone:

The fundamental problems CDNs have with dynamic content like this, is that there's no way for a "generic CDN edge node" to know whether the page should be cached or not. The "logged-out" version can be cached for sure, but to know whether someone is legitimately "logged in", we must run application code, that lives on the origin server.

Various CDNs offer various solutions to this problem: for example, they'll

usually have an easy way to disable caching for logged-in users by simply

bypassing the cache if the incoming request has a PHPSESSID cookie set (for

y'know, PHP web applications).

The problem with that of course, is that you don't know if it's a valid session.

So once a potential attacker notices that they can just forge an arbitrary

PHPSESSID, bypass the cache, and hammer your origin server, they'll just do

that!

This is less of a problem if the CDN in question has some sort of DDoS mitigation built-in, but... it still kinda is. It can be expensive to render pages. So, I added caching in various parts of my origin server — you can read that part in I Won Free Load Testing.

Still, performance wasn't as good as it could be for logged-in users, who just

happen to be my paying customers generous sponsors, and I wanted to address

that.

Migrating from rusqlite to sqlx

You may have noticed in this first diagram, there's two databases:

There's a content database, stored in SQLite, that just has all the markdown sources, all the templates, stylesheets, routes, redirects, etc. And the second is the users database which contains preferences (do you like fancy ligatures in your code or not?) and credentials for third-party authentication providers (like Patreon).

In an ADN (Application Delivery Network) scenario, you run several copies of your application in different geographical locations, and so for me, duplicating the content database was okay, but I wanted a single users database.

So the first step involved migrating a bunch of code over from rusqlite to sqlx, then from "sqlx for sqlite" to "sqlx for postgres" (the SQL dialects are slightly different), provision a high-availability postgres database from my ADN provider, and switch to that.

This was more work than expected, since rusqlite provides a synchronous

interface to sqlite (and I queried it from async contexts, so I had to use

spawn_blocking),

but sqlx provides an asynchronous interface (and I also queried it from sync contexts,

like rendering a liquid template, so I had to use

Handle::block_on),

but eventually I got it working.

Deploying software and content updates

With a single origin, futile (the software powering the website) was run as a

systemd service, and updating it was as easy as SSHing in, running git pull,

backing up the previous executable just in case, running cargo build --release, and restarting the systemd service. Zero-downtime deploys, easy

peasy.

Wait, zero-downtime how?

Because systemd socket units — futile didn't actually do the listening.

With multiple origins though... I had to package everything up as a container image and use the ADN's own facilities for deployment. Which was very easy, since I had done it a bunch of times before. Maybe I should write about just that part!

As for deploying content updates, futile is designed to watch for filesystem

changes, and rebuild revisions incrementally, atomically switching to the next

one, so I did that in production too.

I'd have a command that would just SSH into the origin, run git pull in the

fasterthanli.me folder, and that's it.

But with multiple origins... well nothing needs to change, I just didn't feel like SSH-ing into a half dozen separate nodes, also setting up an isolated Git user that could just clone/pull from the content repository.

Also, SSH-ing to a bunch of nodes (even in parallel) tends to be slow because, something something key exchange mechanisms, lots of roundtrips, old protocol maybe, I don't know.

So here's what I did instead: I taught futile to push all revision assets to

cloud storage (something S3-compatible, doesn't matter which — we can rebuild it

from scratch if it goes down). S3 has a very good official

crate now (that wasn't the case when I

started), so that part was no problem at all.

Wait, so you re-implemented a subset of Git, poorly?

Yes I did, shut up.

Because there's thousands of objects involved in a single revision (that are, thankfully, content-addressed, which means their path is a hash of their content), deploying was slow at first: it would spend most of its time checking if objects were already there.

There might be a better way to do this with S3 (a bulk "let me know which of

these 2600 objects doesn't exist" operation), but I started getting worried

about billing and stuff, so I did the low-tech thing instead: for each revision,

futile generates a manifest, that simply lists all the objects it has.

When deploying a new revision, it downloads the last revision's manifest, and

only uploads the objects that were added since. (Note that a file being modified

counts as a "new object" because of

content-addressing).

When it's confident all the new objects have been uploaded, it writes a new

revision manifest, along with a latest-revision file that contains the

revision ID of the newly uploaded revision, and tada, it's all in the cloud now.

Then, I added some sort of /pull endpoint to futile (and a way to disable

"watching for filesystem changes"), that looks up the latest revision from S3,

downloads the manifest, compares with what objects are available locally,

downloads any missing objects, and kicks up the making of a new revision from

there.

So now, every instance of futile in production would start up with an empty

content folder, and I was able to make requests to /pull from my local futile

instance to every production instance, reporting progress as it went.

The new diagram looks something like this:

And the deploy process now looks something like this:

You can see some IPv6 addresses in that video there — don't waste your time, it's all private networking.

You might also be wondering: how are the instances discovered? Shouldn't there be a central place where they're all listed? The ADN in question provides an easy way to discover them: DNS. Again it's all internal/private, so, nothing to see here.

I might refine that deploy process in the future, but for now I'm fairly happy with it! I like the idea of being able to bootstrap new nodes from cloud storage at any time, and to not have to broadcast the content of delta updates from my local computer directly to a bunch of instances.

One could even imagine... some sort of CI pipeline doing that work, taking us all the way back to how most static website generators are set up. Everything new is old again.

This article is part 4 of the Updating fasterthanli.me for 2022 series.

If you liked what you saw, please support my work!

fasterthanlime

fasterthanlime