Peeking inside a Rust enum

- What the heck is that question about

- One word, many meanings

- But Rust enums aren't just that.

- Making our own enum

- A closer look at pointers

- What do we want? Alignment! Why do we want it? Well...

- Once upon a time, before ARMv5...

- Alignment still matters

-

A look at

smartstring's memory layout

Contents

During a recent Rust Q&A Session on my twitch

channel, someone asked a question that

seemed simple: why are small string types, like SmartString or SmolStr,

the same size as String, but small vec types, like SmallVec, are larger

than Vec?

Now I know I just used the adjective simple, but the truth of the matter is: to understand the question, we're going to need a little bit of background.

What the heck is that question about

I recently talked about Rust small string crates.

The types exposed by these crates can help avoid allocations, and lower overall memory usage. Let's take smartstring for example:

use smartstring::{Compact, SmartString};

use std::mem::size_of_val;

fn main() {

let smart = SmartString::<Compact>::from("hello world");

dbg!(size_of_val(&smart));

let stand = String::from("hello world");

dbg!(size_of_val(&stand));

}

$ cargo run -q [src/main.rs:6] size_of_val(&smart) = 24 [src/main.rs:9] size_of_val(&stand) = 24

As you can see - as was stated in the original question - those types are the same size.

That's... that's not the full story.

Surely String stores the actual data (the "contents" of the string)

somewhere else? Since all values of type String are 24 bytes on 64-bit

platforms.

Right, of course it's not the full story. In that particular example, smart

stores its data inline (on the stack), whereas stand stores its data on the

heap:

If we want to know how much memory total each type uses, we can do something like this instead:

let smart = SmartString::<Compact>::from("hello world");

dbg!(size_of_val(&smart));

let stand = String::from("hello world");

dbg!(size_of_val(&stand) + stand.capacity());

$ cargo run -q [src/main.rs:6] size_of_val(&smart) = 24 [src/main.rs:9] size_of_val(&stand) + stand.capacity() = 35

Ok but - you say "String stores its contents on the heap" like it's obvious.

Isn't there any way we can verify that?

There is!

Typically, on Linux 64-bit systems the stack and the heap are very far apart in the virtual address space, which means that if we print the address of the string's metadata and the address of its contents, we should see:

- That

SmartString's metadata and contents are next to each other - That

String's metadata and contents are far apart

use smartstring::{Compact, SmartString};

fn main() {

let smart = SmartString::<Compact>::from("hello world");

let smart_meta = &smart as *const _;

let smart_data = &smart.as_bytes()[0] as *const _;

dbg!((smart_meta, smart_data));

let stand = String::from("hello world");

let stand_meta = &stand as *const _;

let stand_data = &stand.as_bytes()[0] as *const _;

dbg!((stand_meta, stand_data));

}

$ cargo run -q

[src/main.rs:7] (smart_meta, smart_data) = (

0x00007ffce4cf4728,

0x00007ffce4cf4729,

)

[src/main.rs:12] (stand_meta, stand_data) = (

0x00007ffce4cf47f8,

0x0000555f87686a60,

)

Okay, I'm convinced. Does smart always store its data on the stack though?

No! Because it only has 24 bytes to work with, the same as String.

So if we go for a slightly longer string:

use smartstring::{Compact, SmartString};

fn main() {

let input = "Turns out you can blame your tools *and* be a good craftsperson. Who knew?";

let smart = SmartString::<Compact>::from(input);

let smart_meta = &smart as *const _;

let smart_data = &smart.as_bytes()[0] as *const _;

dbg!((smart_meta, smart_data));

let stand = String::from(input);

let stand_meta = &stand as *const _;

let stand_data = &stand.as_bytes()[0] as *const _;

dbg!((stand_meta, stand_data));

}

$ cargo run -q

[src/main.rs:9] (smart_meta, smart_data) = (

0x00007ffd460d0268,

0x0000555f4636ca30,

)

[src/main.rs:14] (stand_meta, stand_data) = (

0x00007ffd460d0338,

0x0000555f4636cac0,

)

...then we can see that, for both types, the contents is on the heap.

So, does that mean that SmartString is... a "two for one" kind of deal?

Except, you know, with types?

How does that work?

What a good-looking question. Let's talk about enums.

One word, many meanings

If you come from a C/C++/Java/C# background, an enum type is "just" an

integer type, for which only some values have a meaning.

Let's take this C code for example:

#include <stdio.h>

enum Drink {

Water,

Soda,

Juice,

};

int main() {

enum Drink dri = Soda;

printf("dri = %d\n", dri);

printf("sizeof(dri) = %ld\n", sizeof(dri));

printf("sizeof(int) = %ld\n", sizeof(int));

}

Here, we're declaring an enum type, enum Drink, and three variants,

which are just integers starting from zero, so we have Water = 0,

Soda = 1, Juice = 2:

$ clang -Wall main.c -o main && ./main dri = 1 sizeof(dri) = 4 sizeof(int) = 4

I'm not super fond of that code though - I like my type names to not have any extra qualifiers, and I like my variants to be namespaced. This being C, though, we have to do everything ourselves:

#include <stdio.h>

// this could also be done in two separate declarations

typedef enum Drink {

Drink_Water,

Drink_Soda,

Drink_Juice,

} Drink;

int main() {

Drink dri = Drink_Soda;

printf("dri = %d\n", dri);

printf("sizeof(dri) = %ld\n", sizeof(dri));

printf("sizeof(int) = %ld\n", sizeof(int));

}

Ahhh, better. There's other things I'm not particularly fond of there, like

C's switch semantics. This code is wrong, for example:

#include <stdio.h>

typedef enum Drink {

Drink_Water,

Drink_Soda,

Drink_Juice,

} Drink;

void print_drink(Drink dri) {

switch (dri) {

case Drink_Water:

printf("It's water!\n");

case Drink_Soda:

printf("It's soda!\n");

case Drink_Juice:

printf("It's juice!\n");

}

}

int main() {

print_drink(Drink_Soda);

}

$ clang -Wall main.c -o main && ./main It's soda! It's juice!

The correct code involves using break statements at the end of

each case, one of the things budding developers get to learn very

early on.

Amazingly, the same is true in C#, except it protects you against implicit fall-through with a hard compiler error. You can still implement explicit fall-through if you feel like it.

Even if we fix our switch, another thing I don't particularly

appreciate about C enums is that nothing prevents us from passing

values that are meaningless:

#include <stdio.h>

typedef enum Drink {

Drink_Water,

Drink_Soda,

Drink_Juice,

} Drink;

void print_drink(Drink dri) {

switch (dri) {

case Drink_Water:

printf("It's water!\n");

break;

case Drink_Soda:

printf("It's soda!\n");

break;

case Drink_Juice:

printf("It's juice!\n");

break;

}

}

int main() {

print_drink(47);

}

$ clang -Wall main.c -o main && ./main

(The program produced no output.)

Now, if we look at Rust enums... it's a different story.

Let's try to write roughly the same program.

use std::mem::size_of_val;

enum Drink {

Water,

Soda,

Juice,

}

fn main() {

let dri = Drink::Water;

dbg!(size_of_val(&dri));

dbg!(dri as u32);

}

$ cargo run -q warning: variant is never constructed: `Soda` --> src/main.rs:5:5 | 5 | Soda, | ^^^^ | = note: `#[warn(dead_code)]` on by default warning: variant is never constructed: `Juice` --> src/main.rs:6:5 | 6 | Juice, | ^^^^^ warning: 2 warnings emitted [src/main.rs:11] size_of_val(&dri) = 1 [src/main.rs:12] dri as u32 = 0

So, what immediate benefits do we get?

Let's see.. it still looks like an integer type - at least, we can cast

it to an u32. But it's namespaced by default?

Correct. And that's not all! The compiler warned us about unused variants,

and we can derive an implementation for the Debug trait easily:

#[derive(Debug)]

enum Drink {

Water,

Soda,

Juice,

}

fn main() {

print_drink(&Drink::Water);

print_drink(&Drink::Juice);

print_drink(&Drink::Soda);

}

fn print_drink(dri: &Drink) {

println!("{:?}", dri);

}

$ cargo run -q Water Juice Soda

...and even if we didn't, we'd be able to use a match rather than

a switch, which checks for exhaustiveness, so for example, this won't

compile:

fn print_drink(dri: &Drink) {

match dri {

Drink::Water => println!("it's water!"),

Drink::Soda => println!("it's soda!"),

}

}

$ cargo run -q

error[E0004]: non-exhaustive patterns: `&Juice` not covered

--> src/main.rs:15:11

|

2 | / enum Drink {

3 | | Water,

4 | | Soda,

5 | | Juice,

| | ----- not covered

6 | | }

| |_- `Drink` defined here

...

15 | match dri {

| ^^^ pattern `&Juice` not covered

|

= help: ensure that all possible cases are being handled, possibly by adding wildcards or more match arms

= note: the matched value is of type `&Drink`

The compiler suggests two possible fixes, either add a wildcard:

fn print_drink(dri: &Drink) {

match dri {

Drink::Water => println!("it's water!"),

Drink::Soda => println!("it's soda!"),

_ => println!("it's something we don't know about!"),

}

}

...or just handle all cases:

fn print_drink(dri: &Drink) {

match dri {

Drink::Water => println!("it's water!"),

Drink::Soda => println!("it's soda!"),

Drink::Juice => println!("it's juice!"),

}

}

Does this sound like a lot of typing? Luckily, rust-analyzer comes with a "Fill match arms" feature, which generates a large part of that code for you.

Speaking of match, it's also an expression, so we can do this:

fn print_drink(dri: &Drink) {

println!(

"{}",

match dri {

Drink::Water => "it's water!",

Drink::Soda => "it's soda!",

Drink::Juice => "it's juice!",

}

)

}

Although personally, I would probably write something more along those lines:

fn print_drink(dri: &Drink) {

let name = match dri {

Drink::Water => "water",

Drink::Soda => "soda",

Drink::Juice => "juice",

};

println!("it's {}!", name)

}

Let's see, what else have I been complaining about regarding C-style enums..

That you could pass meaningless values!

Right! Let's try that:

fn main() {

print_drink(&Drink::Water);

print_drink(&Drink::Juice);

print_drink(&Drink::Soda);

let val: Drink = 4 as Drink;

print_drink(&val);

}

$ cargo run -q error[E0605]: non-primitive cast: `i32` as `Drink` --> src/main.rs:13:22 | 13 | let val: Drink = 4 as Drink; | ^^^^^^^^^^ | = note: an `as` expression can only be used to convert between primitive types. Consider using the `From` trait

Ah, that doesn't work! There's cases where we'd need to do that though, let's

say we're parsing a binary format, and we've already checked that the numeric

value is meaningful for that type - then we can just use transmute:

#[allow(dead_code)]

enum Drink {

Water,

Soda,

Juice,

}

fn main() {

let juice_from_binary_format = 2;

let val: Drink = unsafe { std::mem::transmute(juice_from_binary_format as u8) };

print_drink(&val);

}

fn print_drink(dri: &Drink) {

let name = match dri {

Drink::Water => "water",

Drink::Soda => "soda",

Drink::Juice => "juice",

};

println!("it's {}!", name)

}

$ cargo run -q it's juice!

Of course, we'd normally want to provide a safe interface to this unsafe operation, something like:

use std::convert::{TryFrom, TryInto};

#[allow(dead_code)]

enum Drink {

Water,

Soda,

Juice,

}

impl TryFrom<i32> for Drink {

type Error = &'static str;

fn try_from(x: i32) -> Result<Self, Self::Error> {

match x {

0..=2 => Ok(unsafe { std::mem::transmute(x as u8) }),

_ => Err("invalid Drink value"),

}

}

}

fn main() {

let juice_from_binary_format: i32 = 2;

let val: Drink = juice_from_binary_format.try_into().unwrap();

print_drink(&val);

let invalid_value: i32 = 4;

let val: Drink = invalid_value.try_into().unwrap();

print_drink(&val);

}

$ cargo run -q it's juice! thread 'main' panicked at 'called `Result::unwrap()` on an `Err` value: "invalid Drink value"', src/main.rs:27:22 note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

And the fun doesn't stop there.

Remember when we printed the size of our enum? Let's refresh our memory:

use std::mem::size_of;

#[allow(dead_code)]

enum Drink {

Water,

Soda,

Juice,

}

fn main() {

dbg!(size_of::<Drink>());

}

$ cargo run -q [src/main.rs:11] size_of::<Drink>() = 1

The unit here is "bytes", so our enum is one byte.

Let's compare with C:

#include <stdio.h>

typedef enum Drink {

Drink_Water,

Drink_Soda,

Drink_Juice,

} Drink;

int main() {

printf("sizeof(Drink) = %ld\n", sizeof(Drink));

}

$ clang -Wall main.c -o main && ./main sizeof(Drink) = 4

That enum is four bytes. So, right now, we could say that our Rust enum

is "sorta like" an u8 (unsigned integer, 0..256) and our C enum is

"sorta like" an u32 (unsigned integer, 0..4_294_967_296).

What would happen if our Rust enum had more than 256 variants?

use std::mem::size_of;

#[allow(dead_code)]

enum Drink {

Variant0,

Variant1,

Variant2,

Variant3,

Variant4,

// (etc.)

Variant252,

Variant253,

Variant254,

Variant255,

Variant256,

}

fn main() {

dbg!(size_of::<Drink>());

}

Wait, isn't that just 256 variants?

Nice try cool bear, but no! You're counting the fences, when you should be counting the posts.

Anyway, what's the size of our Drink enum now?

$ cargo run -q [src/main.rs:265] size_of::<Drink>() = 2

Two bytes! "Sorta like" an u16.

Of course "sorta like" is not the technical term - the technical term is a "representation". A Rust enum is an abstraction over a specific integer type.

Let's go back to our original Drink enum:

On a conceptual level, there's only three meaningful values for Drink,

but the representation, an u8, can hold 256 different values. That's why

you can always turn a Drink into an u8, but turning an u8 into a

Drink is a fallible operation.

Right! That the underlying u8 for a Drink value should always be 0, 1, or 2,

is called an invariant. If we break that invariant, our code is unsound.

In Rust, this requires using unsafe code:

use std::mem::transmute;

#[allow(dead_code)]

#[derive(Debug, PartialEq)]

enum Drink {

Water,

Soda,

Juice,

}

fn main() {

// woops! that's unsound.

let d: Drink = unsafe { transmute(15_u8) };

dbg!(&d);

dbg!(d == Drink::Juice);

}

$ cargo run -q [src/main.rs:14] &d = Juice [src/main.rs:15] d == Drink::Juice = false

Oh that's bad - but you can't accidentally end up with an invalid Drink

value without using unsafe Rust code, right?

Correct! The Rust unsafe keyword marks areas of the code where fewer

restrictions apply, code that is potentially dangerous and to which special

attention should be paid during review.

That sounds bad. Isn't unsafe code bad?

Sometimes, it's unavoidable. But that's only sometimes! Library authors often

discover that they didn't actually need unsafe code to do what they

wanted to do, and they can replace it with safe code - and that's one less

code region to worry about.

Couldn't the language itself be improved so that no unsafe code is needed at all?

No unsafe code is a big ask. But less unsafe code, definitely. For *example, there's a Working Group for safe(r) transmute.

The issue is that not everything that a computer can do (and that you need to

do) can be checked using Rust's model. For some things, you stil need unsafe code.

There's no such thing as "two Rusts", there's just different levels of risk, and thus different levels of trust.

If you trust the Rust core team to root out unsoundness in the standard library, you can let even junior members of your team write safe Rust code on top of it, protected by all of Rust's safety guarantees:

But Rust enums aren't just that.

Cool bear said earlier that SmartString was a "two for one" kind of deal.

And cool bear was right!

Let's make another two-for-one deal:

enum UserID {

Number(u64),

Text(String),

}

UserID is a sum type. A UserID value can either be the UserID::Number

variant, or the UserID::Text variant. If we want to operate on its contents,

we need to match it:

fn print_user_id(id: &UserID) {

match id {

UserID::Number(n) => {

println!("user id number {}", n);

}

UserID::Text(s) => println!("user id {}", s),

}

}

Hopefully this feels familiar, as we've used match on simpler enums before.

Let's take print_user_id for a spin:

fn main() {

print_user_id(&UserID::Number(79));

print_user_id(&UserID::Text("fh99a73gbh8".into()));

}

$ cargo run -q user id number 79 user id fh99a73gbh8

Wonderful. We've seen other sum types before in that article: when we

implemented TryInto, we were returning a Result<T, E>:

impl TryFrom<i32> for Drink {

type Error = &'static str;

fn try_from(x: i32) -> Result<Self, Self::Error> {

// omitted

}

}

Result is just an enum, here's its actual definition:

pub enum Result<T, E> {

/// Contains the success value

Ok(T),

/// Contains the error value

Err(E),

}

But let's get back to our UserID enum:

enum UserID {

Number(u64),

Text(String),

}

What's the size of this type?

If we were trying to emulate Rust enums in C, we could do something like this:

#include <stdint.h>

#include <stdio.h>

enum UserIDKind {

UserIDKind_Number,

UserIDKind_Text,

};

struct UserID {

enum UserIDKind kind;

uint64_t number;

char *text;

};

Even though we only have two variants, we need a third field, just so we know which variant we're dealing with.

For example, in print_user_id, we could use a switch to know whether we're

dealing with the Number variant or the Text variant:

void print_user_id(struct UserID* id) {

switch (id->kind) {

case UserIDKind_Number:

printf("user id number %lu\n", id->number);

break;

case UserIDKind_Text:

printf("user id %s\n", id->text);

break;

}

}

And when we initialize a UserID struct, we only need to initialize

the fields we care about - if kind is Number, then we need to set

the number field. If kind is Text, then we need to set the

text field:

int main() {

struct UserID a = {

.kind = UserIDKind_Number,

.number = 79,

};

print_user_id(&a);

struct UserID b = {

.kind = UserIDKind_Text,

.text = "fh99a73gbh8",

};

print_user_id(&b);

}

And this does work!

$ clang -Wall main.c -o main && ./main user id number 79 user id fh99a73gbh8

But it's not ideal - first of all, we don't have any of the guarantees

of Rust sum types: nothing prevents us from creating a UserID with

its kind set to number but only the text field set:

int main() {

struct UserID woops = {

.kind = UserIDKind_Number,

.text = "woops",

};

print_user_id(&woops);

}

$ clang -Wall main.c -o main && ./main user id number 0

What we have here is a leaky abstraction - we get to access the underlying

representation of UserID directly, and we can manipulate it in unsound

ways.

For example, what if our UserID was allocated on the heap using malloc, which

doesn't zero out memory?

#include <stdlib.h>

int main() {

struct UserID *woops = malloc(sizeof(struct UserID));

woops->kind = UserIDKind_Text;

woops->number = 79;

print_user_id(woops);

}

In a debug build, things don't go too wrong:

$ clang -Wall main.c -o main && ./main user id (null)

In a release build though, with optimizations turned on... fun stuff happens:

$ clang -O3 -Wall main.c -o main && ./main user id } $ clang -O3 -Wall main.c -o main && ./main user id $ clang -O3 -Wall main.c -o main && ./main user id m

Where is it reading from? Who knows! It doesn't segfault though - which means it's reading from other parts of our program's memory. In a larger program, this could be confidential user data, and that bug could be used to exfiltrate it.

But this is not an article about the dangers of C,

...all your articles are about the dangers of C.

...fair enough - but let's look at what else is suboptimal here.

Specifically, let's look at the size of our struct UserID:

$ clang -Wall main.c -o main && ./main sizeof(struct UserID) = 24

24 bytes. It's just a struct, so we could've probably computed

that ourselves:

int main() {

printf("sizeof(struct UserID) = %ld\n", sizeof(struct UserID));

printf("%ld + %ld + %ld = %ld\n",

sizeof(enum UserIDKind), sizeof(uint64_t), sizeof(char *),

sizeof(enum UserIDKind) + sizeof(uint64_t) + sizeof(char *)

);

}

$ clang -Wall main.c -o main && ./main sizeof(struct UserID) = 24 4 + 8 + 8 = 20

Oh, uh, woops. We got it wrong.

Turns out, there's padding between kind and number, so that

all our fields are 64-bit aligned. (More on that later).

Which is why our UserID struct is actually 3*8 = 24 bytes.

Of course, we can instruct the compiler to not add padding, and then our calculation is correct:

struct __attribute__((packed)) UserID {

enum UserIDKind kind;

uint64_t number;

char *text;

};

$ clang -Wall main.c -o main && ./main sizeof(struct UserID) = 20 4 + 8 + 8 = 20

Now let's look at the size of our Rust UserID enum:

use std::mem::size_of;

#[allow(dead_code)]

enum UserID {

Number(u64),

Text(String),

}

fn main() {

dbg!(size_of::<UserID>());

}

$ cargo run -q [src/main.rs:10] size_of::<UserID>() = 32

Oh, uh, that's big. Too big. I don't think Rust's String type is just a

single pointer to a null-terminated (C-style) string. I think it's a little more involved.

So, in the interest of fairness, let's jam a raw pointer in our Rust struct instead:

use std::{mem::size_of, os::raw::c_char};

#[allow(dead_code)]

enum UserID {

Number(u64),

Text(*const c_char),

}

fn main() {

dbg!(size_of::<UserID>());

}

$ cargo run -q [src/main.rs:10] size_of::<UserID>() = 16

Ok, that's more reasonable. And smaller than our C version! What's happening there?

First off - there has to be an equivalent to our kind field. In Rust, it's

called a discriminant. It's also the "tag" as in "tagged unions".

Welcome to Computers, where everything has at least three different names.

And then... I guess it's saving space by overlapping the u64 and the

*const c_char, since either of them could be valid, but never both at the

same time: that's why we also call this construct a "disjoint union".

That's 16 bytes total.

Can we do the same thing in C? Sure we can! There's a union keyword, like,

right there. It's just like struct, except everything overlaps, and it

has the size of the largest member (more or less - there's also alignment

considerations).

And indeed, if we do this:

struct UserID {

enum UserIDKind kind;

union {

uint64_t number;

char *text;

};

};

int main() {

printf("sizeof(struct UserID) = %ld\n", sizeof(struct UserID));

}

Then we get the same result as Rust:

$ clang -Wall main.c -o main && ./main sizeof(struct UserID) = 16

We can go even further - we can switch from an int (4 bytes for our current

target, which happens to be clang 10 on 64-bit Linux) to an uint8_t:

struct UserID {

uint8_t kind;

union {

uint64_t number;

char *text;

};

};

$ clang -Wall main.c -o main && ./main sizeof(struct UserID) = 16

Mhh that didn't change much...

Much like commercial food packaging - it's probably mostly padding.

Ah right! Let's try packing our struct again.

struct __attribute__((packed)) UserID {

uint8_t kind;

union {

uint64_t number;

char *text;

};

};

$ clang -Wall main.c -o main && ./main sizeof(struct UserID) = 9

Just nine bytes! Now that's compact.

Can we do the same with our Rust enum?

Let's look at structs first - just like in C, Rust defaults to proper

alignment for fields, so if we have an u8 and an u64:

struct Foo {

bar: u8,

baz: u64,

}

fn main() {

dbg!(size_of::<Foo>());

}

...then we end up with a 16-byte struct:

$ cargo run -q [src/main.rs:16] size_of::<Foo>() = 16

But, just like in C, if we ask nicely, Rust will pack it:

#[repr(packed)]

struct Foo {

bar: u8,

baz: u64,

}

$ cargo run -q [src/main.rs:17] size_of::<Foo>() = 9

But what about enums?

#[repr(packed)]

enum UserID {

Number(u64),

Text(*const c_char),

}

$ cargo run -q

error[E0517]: attribute should be applied to struct or union

--> src/main.rs:4:8

|

4 | #[repr(packed)]

| ^^^^^^

5 | / enum UserID {

6 | | Number(u64),

7 | | Text(*const c_char),

8 | | }

| |_- not a struct or union

We can't pack them! It's been discussed before, along with other exotic enum layout optimizations, but currently, you can't do it.

And yet... clearly smartstring is doing something like that.

When a SmartString is stored on the heap (its "boxed" variant), it's 24

bytes, just like a String.

But if we try to make our own smartstring, with a Rust enum, we can't

even get close to that:

use std::mem::size_of;

#[allow(dead_code)]

enum SmartString {

Boxed(String),

Inline([u8; 24]),

}

fn main() {

dbg!(size_of::<String>());

dbg!(size_of::<[u8; 24]>());

dbg!(size_of::<SmartString>());

}

$ cargo run -q [src/main.rs:10] size_of::<String>() = 24 [src/main.rs:11] size_of::<[u8; 24]>() = 24 [src/main.rs:12] size_of::<SmartString>() = 32

There is one thing we can do - since Rust won't pack our enum, we can just make our own enum.

Making our own enum

First off, we can't use Rust unions, because it only accepts fields of types that 1) are Copy, 2) do not implement Drop.

But what we can do... is just treat any type as what it is: a miserable little pile of bytes.

use std::mem::size_of;

#[allow(dead_code)]

#[repr(packed)]

struct SmartString {

discriminant: u8,

data: [u8; 24],

}

fn main() {

dbg!(size_of::<SmartString>());

}

$ cargo run -q [src/main.rs:11] size_of::<SmartString>() = 25

There! 25 bytes. It's the best we can hope for right now.

But it doesn't actually do anything yet - we're just storing 25 bytes in a struct.

We need to figure out a way to store both our variants:

- boxed: a

String - inline: some utf-8 bytes, and I guess a length?

In the "inline" variant, we can never store more than 24 bytes, so we can

definitely represent the length as an u8, too. So we actually want something

like this:

struct Inline {

len: u8,

data: [u8; 23],

}

And then, to be sure all our types actually have the same size, we'll use the static_assertions crate:

$ cargo add static_assertions

Adding static_assertions v1.1.0 to dependencies

use static_assertions::*;

use std::mem::size_of;

#[allow(dead_code)]

#[repr(packed)]

struct SmartString {

discriminant: u8,

data: [u8; 24],

}

#[allow(dead_code)]

struct Inline {

len: u8,

data: [u8; 23],

}

assert_eq_size!(String, Inline);

fn main() {

dbg!(size_of::<SmartString>());

}

$ cargo check

Finished dev [unoptimized + debuginfo] target(s) in 0.00s

Good. But to be extra super duper sure, since we're going to be doing a lot

of unsafe stuff, we can even define our Inline in terms of the size of a

String, so it's checked both ways:

use static_assertions::*;

use std::mem::size_of;

const VARIANT_SIZE: usize = std::mem::size_of::<String>();

#[allow(dead_code)]

#[repr(packed)]

struct SmartString {

discriminant: u8,

data: [u8; VARIANT_SIZE],

}

#[allow(dead_code)]

struct Inline {

len: u8,

data: [u8; VARIANT_SIZE - 1],

}

assert_eq_size!(String, Inline);

fn main() {

dbg!(size_of::<SmartString>());

}

Okay. Now let's actually implement our hand-rolled enum. The first thing we'll need is... to actually be able to build it.

We're only using [u8; VARIANT_SIZE] to reserve VARIANT_SIZE bytes - if

we actually want to store something in there, we're going to need to obtain

a *mut pointer to it, and cast it to whatever we want:

impl SmartString {

pub fn new_boxed(s: String) -> Self {

Self::new(0, s)

}

pub fn new_inline() -> Self {

Self::new(

1,

Inline {

len: 0,

data: Default::default(),

},

)

}

fn new<T>(discriminant: u8, data: T) -> Self {

let mut res = Self {

discriminant,

data: Default::default(),

};

let ptr: *mut T = res.data.as_mut_ptr().cast();

unsafe { ptr.write_unaligned(data) };

res

}

}

We can now build both variants of our SmartString:

fn main() {

let boxed = SmartString::new_boxed("This is a longer string, would not fit inline".into());

let inline = SmartString::new_inline();

}

Except we can't do much with them beyond that point.

Let's try to do something useful, like, obtaining a &str slice from them:

impl AsRef<str> for SmartString {

fn as_ref(&self) -> &str {

match self.discriminant {

0 => {

let s: *const ManuallyDrop<String> = self.data.as_ptr().cast();

let tmp = unsafe { s.read_unaligned() };

unsafe { &*(tmp.as_ref() as *const str) }

}

1 => {

let s: *const Inline = self.data.as_ptr().cast();

unsafe {

let slice = std::slice::from_raw_parts((*s).data.as_ptr(), (*s).len as _);

std::str::from_utf8_unchecked(slice)

}

}

_ => unreachable!(),

}

}

}

Remember my bit about how we review unsafe code extra hard to make sure

it doesn't violate invariants? That definitely applies here. We're using

the "safer" variant, unreachable, but if I was feeling adventurous, I'd

think about using unreachable_unchecked instead.

Now that we have an AsRef implementation, we can actually print the

contents of our SmartString - no matter what the underlying variant is.

For convenience, we'll implement Display and Debug:

use std::fmt;

impl fmt::Display for SmartString {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

let s: &str = self.as_ref();

fmt::Display::fmt(s, f)

}

}

impl fmt::Debug for SmartString {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

let s: &str = self.as_ref();

fmt::Debug::fmt(s, f)

}

}

fn main() {

let boxed = SmartString::new_boxed("This is a longer string, would not fit inline".into());

let inline = SmartString::new_inline();

dbg!(boxed, inline);

}

$ cargo run -q [src/main.rs:84] boxed = "This is a longer string, would not fit inline" [src/main.rs:84] inline = ""

We're still missing a lot of things - we can't mutate our SmartString at

all, which the smartstring crate allows. We also never "demote" a boxed

variant to an inline variant, and we haven't had to think about "promoting"

an inline variant to a boxed variant, in case it grows too much.

But there's a much more pressing worry.

Allow me to demonstrate.

fn main() {

let s: String = "this is just some text".into();

dbg!(s);

}

$ cargo build --quiet --release && valgrind --tool=memcheck ./target/release/enumpeek ==173592== Memcheck, a memory error detector ==173592== Copyright (C) 2002-2017, and GNU GPL'd, by Julian Seward et al. ==173592== Using Valgrind-3.16.1 and LibVEX; rerun with -h for copyright info ==173592== Command: ./target/release/enumpeek ==173592== [src/main.rs:82] s = "this is just some text" ==173592== ==173592== HEAP SUMMARY: ==173592== in use at exit: 0 bytes in 0 blocks ==173592== total heap usage: 15 allocs, 15 frees, 2,335 bytes allocated ==173592== ==173592== All heap blocks were freed -- no leaks are possible ==173592== ==173592== For lists of detected and suppressed errors, rerun with: -s ==173592== ERROR SUMMARY: 0 errors from 0 contexts (suppressed: 0 from 0)

fn main() {

let s: SmartString = SmartString::new_boxed("this is just some text".into());

dbg!(s);

}

$ cargo build --quiet --release && valgrind --tool=memcheck ./target/release/enumpeek ==173779== Memcheck, a memory error detector ==173779== Copyright (C) 2002-2017, and GNU GPL'd, by Julian Seward et al. ==173779== Using Valgrind-3.16.1 and LibVEX; rerun with -h for copyright info ==173779== Command: ./target/release/enumpeek ==173779== [src/main.rs:82] s = "this is just some text" ==173779== ==173779== HEAP SUMMARY: ==173779== in use at exit: 22 bytes in 1 blocks ==173779== total heap usage: 15 allocs, 14 frees, 2,335 bytes allocated ==173779== ==173779== LEAK SUMMARY: ==173779== definitely lost: 22 bytes in 1 blocks ==173779== indirectly lost: 0 bytes in 0 blocks ==173779== possibly lost: 0 bytes in 0 blocks ==173779== still reachable: 0 bytes in 0 blocks ==173779== suppressed: 0 bytes in 0 blocks ==173779== Rerun with --leak-check=full to see details of leaked memory ==173779== ==173779== For lists of detected and suppressed errors, rerun with: -s ==173779== ERROR SUMMARY: 0 errors from 0 contexts (suppressed: 0 from 0)

We're leaking memory!

String is a struct just like any other struct, but it owns memory that is

heap-allocated. In SmartString::new_boxed, we take ownership of the

String... and its associated heap-allocated memory... and then we just

never free it.

The compiler doens't know to drop the String we're holding inside of our

boxed-variant SmartString, because that's not the type we're holding - as

far as it knows, we're just holding 24 bytes, which could be anything.

If we know that it is, in fact, a String, and that these need to be dropped,

then we need to say so.

Here's our first stab at Drop:

impl Drop for SmartString {

fn drop(&mut self) {

match self.discriminant {

0 => {

let s: *mut String = self.data.as_mut_ptr().cast();

let b: String = unsafe { *s };

drop(b);

}

1 => {

// etc.

}

_ => unreachable!(),

}

}

}

$ cargo run -q

error[E0507]: cannot move out of `*s` which is behind a raw pointer

--> src/main.rs:46:42

|

46 | let b: String = unsafe { *s };

| ^^

| |

| move occurs because `*s` has type `std::string::String`, which does not implement the `Copy` trait

| help: consider borrowing here: `&*s`

Woops, that doesn't work. We can't move from a raw pointer since String is not copy.

What can we do.. can we maybe make a Box from it? It has from_raw, which sounds

delightful:

impl Drop for SmartString {

fn drop(&mut self) {

match self.discriminant {

0 => {

let s: *mut String = self.data.as_mut_ptr().cast();

let b = unsafe { Box::from_raw(s) };

drop(b);

}

1 => {

// etc.

}

_ => unreachable!(),

}

}

}

$ cargo run -q [src/main.rs:117] s = "this is just some text" free(): invalid pointer [1] 179297 abort (core dumped) cargo run -q

Uh oh.

Wow, you weren't lying, unsafe code is tricky.

Let's check in with our friend Valgrind:

$ cargo build --quiet --release && valgrind --tool=memcheck ./target/release/enumpeek

==179648== Memcheck, a memory error detector

==179648== Copyright (C) 2002-2017, and GNU GPL'd, by Julian Seward et al.

==179648== Using Valgrind-3.16.1 and LibVEX; rerun with -h for copyright info

==179648== Command: ./target/release/enumpeek

==179648==

[src/main.rs:117] s = "this is just some text"

==179648== Invalid free() / delete / delete[] / realloc()

==179648== at 0x483B9AB: free (vg_replace_malloc.c:538)

==179648== by 0x10D501: enumpeek::main (in /home/amos/ftl/enumpeek/target/release/enumpeek)

==179648== by 0x10D8E2: std::rt::lang_start::{{closure}} (in /home/amos/ftl/enumpeek/target/release/enumpeek)

==179648== by 0x1163F7: {{closure}} (rt.rs:52)

==179648== by 0x1163F7: do_call<closure-0,i32> (panicking.rs:297)

==179648== by 0x1163F7: try<i32,closure-0> (panicking.rs:274)

==179648== by 0x1163F7: catch_unwind<closure-0,i32> (panic.rs:394)

==179648== by 0x1163F7: std::rt::lang_start_internal (rt.rs:51)

==179648== by 0x10D561: main (in /home/amos/ftl/enumpeek/target/release/enumpeek)

==179648== Address 0x1ffefff561 is on thread 1's stack

==179648== in frame #1, created by enumpeek::main (???:)

==179648==

==179648==

==179648== HEAP SUMMARY:

==179648== in use at exit: 0 bytes in 0 blocks

==179648== total heap usage: 15 allocs, 16 frees, 2,335 bytes allocated

==179648==

==179648== All heap blocks were freed -- no leaks are possible

==179648==

==179648== For lists of detected and suppressed errors, rerun with: -s

==179648== ERROR SUMMARY: 1 errors from 1 contexts (suppressed: 0 from 0)

The problem here is that it's trying to deallocate the String as if it had been

allocated on the heap. But it hasn't! The String's internal data has been

allocated on the heap, but the String struct itself hasn't.

Here's one way that does appear to work:

impl Drop for SmartString {

fn drop(&mut self) {

match self.discriminant {

0 => {

let s: *mut String = self.data.as_mut_ptr().cast();

let s: String = unsafe { std::ptr::read_unaligned(s) };

drop(s);

}

1 => {

// etc.

}

_ => unreachable!(),

}

}

}

We can even:

- Make a generic internal method to avoid code duplication with the

Inlinecase - Forget about the

drop, since dropping will happen as soon as the result ofstd::ptr::read_unalignedgoes out of scope

Let's go!

impl SmartString {

fn drop_variant<T>(&self) {

unsafe { std::ptr::read_unaligned(self.data.as_ptr().cast::<T>()) };

}

}

impl Drop for SmartString {

fn drop(&mut self) {

match self.discriminant {

0 => unsafe { self.drop_variant::<String>() },

1 => unsafe { self.drop_variant::<Inline>() },

_ => unreachable!(),

}

}

}

$ cargo build --quiet --release && valgrind --tool=memcheck ./target/release/enumpeek ==181085== Memcheck, a memory error detector ==181085== Copyright (C) 2002-2017, and GNU GPL'd, by Julian Seward et al. ==181085== Using Valgrind-3.16.1 and LibVEX; rerun with -h for copyright info ==181085== Command: ./target/release/enumpeek ==181085== [src/main.rs:99] s = "this is just some text" ==181085== ==181085== HEAP SUMMARY: ==181085== in use at exit: 0 bytes in 0 blocks ==181085== total heap usage: 15 allocs, 15 frees, 2,335 bytes allocated ==181085== ==181085== All heap blocks were freed -- no leaks are possible ==181085== ==181085== For lists of detected and suppressed errors, rerun with: -s ==181085== ERROR SUMMARY: 0 errors from 0 contexts (suppressed: 0 from 0)

Wonderful! Is it actually correct? I don't know! I wouldn't use it in production before asking a bunch of other folks to look at it first. But at least, in our case, it doesn't seem to leak memory or do invalid frees.

That's always good.

We could go on all day re-implementing all of smartstring's features

on our homegrown version, but the point remains: our version is larger.

One entire byte larger.

Much like the smallvec::SmallVec type is larger than the Vec type.

$ cargo add smallvec

Adding smallvec v1.4.2 to dependencies

use std::mem::size_of;

use smallvec::SmallVec;

fn main() {

dbg!(size_of::<Vec<u8>>(), size_of::<SmallVec<[u8; 1]>>());

}

$ cargo run -q [src/main.rs:100] size_of::<Vec<u8>>() = 24 [src/main.rs:100] size_of::<SmallVec<[u8; 1]>>() = 32

So, hopefully, by now, 44 whole-ass minutes into this article, you understand exactly why that is an interesting question.

The mystery is not why SmallVec<[u8; 1]> is 32 bytes, 8 more than a

Vec<u8>. A SmallVec is "just an enum" - its discriminant only needs to

account for two variants, but because Rust enums have padding to maintain

alignment, we pay 8 bytes for it.

The mystery is, how the heck is a SmartString just 24 bytes.

To answer that question, we need to take a closer look at pointers.

A closer look at pointers

So, what's a pointer? Just a number right? That tells you where in memory something is.

For example, if we declare a local x, of type i32, it probably ends up

on the stack:

fn main() {

// this is a signed 32-bit integer

let x = 30;

// this is a reference to a signed 32-bit integer

let x_ref = &x;

// this is a pointer to a signed 32-bit integer

let x_ptr = x_ref as *const _;

dbg!(x_ptr);

}

$ cargo run -q [src/main.rs:105] x_ptr = 0x00007fff10be39ec

Of course, a local could also be in a register. But that's irrelevant here. As soon as we take the address of something, it needs to be mapped somewhere in our virtual memory address space, and registers aren't, so for the purposes of this explanation, let's pretend registers don't exist.

Yeah let's just completely disregard the fastest storage available on a modern computer, seems good.

Look cool bear, do you want to finish this article?

yawn no no, go ahead.

So. Number that tells you where in memory something is. It is very much an address, the same way some countries have addresses for physical places, except with more indirection.

An "aligned" pointer is a pointer whose value (the address) is a multiple of the data size. It is convenient for CPUs when data has its "natural alignment".

Let's look at some examples.

The smallest unit of memory we can address is a byte. A pointer to a byte is

always aligned, since the number of a pointer counts bytes. In other

words, the alignment of u8 is 1.

fn main() {

let arr = [1u8, 2u8, 3u8, 4u8];

dbg!(

&arr[0] as *const _,

&arr[1] as *const _,

&arr[2] as *const _,

&arr[3] as *const _,

);

}

$ cargo run -q [src/main.rs:106] &arr[0] as *const _ = 0x00007ffd6474abdc [src/main.rs:106] &arr[1] as *const _ = 0x00007ffd6474abdd [src/main.rs:106] &arr[2] as *const _ = 0x00007ffd6474abde [src/main.rs:106] &arr[3] as *const _ = 0x00007ffd6474abdf

If we're talking about pointers to u16, then we want an alignment of 2.

fn main() {

let arr = [1u16, 2u16, 3u16, 4u16];

fn inspect<T>(t: *const T) -> (*const T, bool) {

(t, t as usize % 2 == 0)

}

dbg!(

inspect(&arr[0] as *const _),

inspect(&arr[1] as *const _),

inspect(&arr[2] as *const _),

inspect(&arr[3] as *const _),

);

}

$ cargo run -q

[src/main.rs:110] inspect(&arr[0] as *const _) = (

0x00007ffd81bf5918,

true,

)

[src/main.rs:110] inspect(&arr[1] as *const _) = (

0x00007ffd81bf591a,

true,

)

[src/main.rs:110] inspect(&arr[2] as *const _) = (

0x00007ffd81bf591c,

true,

)

[src/main.rs:110] inspect(&arr[3] as *const _) = (

0x00007ffd81bf591e,

true,

)

For u32 values, we want an alignment of 4, and for u64 values, we want

an alignment of 8.

For the visual thinkers among us, here are some examples of values that are properly aligned:

The little boxes at the bottom represent what types we can store at these locations if we want them to be properly aligned.

The top part represents the actual memory layout of, for example, a struct, like this one here:

#include <stdint.h>

#include <stdio.h>

#include <stddef.h>

struct S {

uint8_t a;

uint8_t b;

uint16_t c;

uint32_t d;

};

int main() {

printf("sizeof(S) = %ld\n", sizeof(struct S));

printf("offsetof(struct S, a) = %zu\n", offsetof(struct S, a));

printf("offsetof(struct S, b) = %zu\n", offsetof(struct S, b));

printf("offsetof(struct S, c) = %zu\n", offsetof(struct S, c));

printf("offsetof(struct S, d) = %zu\n", offsetof(struct S, d));

}

$ clang -Wall main.c -o main && ./main sizeof(S) = 8 offsetof(struct S, a) = 0 offsetof(struct S, b) = 1 offsetof(struct S, c) = 2 offsetof(struct S, d) = 4

Here, things work out just fine - but they could be less ideal.

For example, we could have this layout instead:

struct S {

uint8_t a;

uint16_t b;

uint8_t c;

uint32_t d;

};

What would this look like?

$ clang -Wall main.c -o main && ./main sizeof(S) = 12 offsetof(struct S, a) = 0 offsetof(struct S, b) = 2 offsetof(struct S, c) = 4 offsetof(struct S, d) = 8

To maintain alignment, the compiler has inserted padding:

"Padding" is not necessarily zeroed - it's just unused space. Even if it's initially zeroed, it's not guaranteed to stay zero if you assign members.

Regular usage of the struct might mess with the values in the padding, and so

a good old block memory comparison (memcmp) would not be enough to test two

structs for equality.

What happens if we define the same struct layout in Rust?

fn main() {

struct S {

a: u8,

b: u16,

c: u8,

d: u32,

}

dbg!(std::mem::size_of::<S>());

}

$ cargo run -q [src/main.rs:112] std::mem::size_of::<S>() = 8

It's only 8 bytes again! What happened? Let's look at its layout:

$ cargo add memoffset

Adding memoffset v0.5.5 to dependencies

fn main() {

struct S {

a: u8,

b: u16,

c: u8,

d: u32,

}

use memoffset::offset_of;

dbg!(

std::mem::size_of::<S>(),

offset_of!(S, a),

offset_of!(S, b),

offset_of!(S, c),

offset_of!(S, d)

);

}

$ cargo run -q [src/main.rs:113] std::mem::size_of::<S>() = 8 [src/main.rs:113] offset_of!(S, a) = 6 [src/main.rs:113] offset_of!(S, b) = 4 [src/main.rs:113] offset_of!(S, c) = 7 [src/main.rs:113] offset_of!(S, d) = 0

Our fields were re-ordered!

We can tell the Rust compiler not to re-order our fields with

repr(C):

fn main() {

#[repr(C)]

struct S {

a: u8,

b: u16,

c: u8,

d: u32,

}

use memoffset::offset_of;

dbg!(

std::mem::size_of::<S>(),

offset_of!(S, a),

offset_of!(S, b),

offset_of!(S, c),

offset_of!(S, d)

);

}

cargo run -q [src/main.rs:11] std::mem::size_of::<S>() = 12 [src/main.rs:11] offset_of!(S, a) = 0 [src/main.rs:11] offset_of!(S, b) = 2 [src/main.rs:11] offset_of!(S, c) = 4 [src/main.rs:11] offset_of!(S, d) = 8

And now we have the same layout we had with C (hence repr(C)), with

the same padding:

Or we could tell the Rust compiler to not re-order fields, and to pack the struct (ie. to not insert any padding), completely disregarding alignment:

fn main() {

#[repr(C, packed)]

struct S {

a: u8,

b: u16,

c: u8,

d: u32,

}

use memoffset::offset_of;

dbg!(

std::mem::size_of::<S>(),

offset_of!(S, a),

offset_of!(S, b),

offset_of!(S, c),

offset_of!(S, d)

);

}

But now... S.b is no longer aligned properly!

$ cargo run -q [src/main.rs:11] std::mem::size_of::<S>() = 8 [src/main.rs:11] offset_of!(S, a) = 0 [src/main.rs:11] offset_of!(S, b) = 1 [src/main.rs:11] offset_of!(S, c) = 3 [src/main.rs:11] offset_of!(S, d) = 4

And if we try to take a reference to it, Rust will warn us (for now, it'll become a hard error later):

fn main() {

#[repr(C, packed)]

#[derive(Default)]

struct S {

a: u8,

b: u16,

c: u8,

d: u32,

}

let s: S = Default::default();

dbg!(&s.b);

}

$ cargo run -q warning: borrow of packed field is unsafe and requires unsafe function or block (error E0133) --> src/main.rs:12:10 | 12 | dbg!(&s.b); | ^^^^ | = note: `#[warn(safe_packed_borrows)]` on by default = warning: this was previously accepted by the compiler but is being phased out; it will become a hard error in a future release! = note: for more information, see issue #46043 <https://github.com/rust-lang/rust/issues/46043> = note: fields of packed structs might be misaligned: dereferencing a misaligned pointer or even just creating a misaligned reference is undefined behavior warning: 1 warning emitted [src/main.rs:12] &s.b = 0

And yet... everything seems to work fine on my 2018 Core i7.

We can even mutate it, no problem:

fn main() {

#[repr(C, packed)]

#[derive(Default)]

struct S {

a: u8,

b: u16,

c: u8,

d: u32,

}

let mut s: S = Default::default();

unsafe {

s.b = 0x123;

println!("{:#x}", s.b);

}

}

$ cargo run -q 0x123

That's not the only way to obtain an unaligned pointer. Using some pointer

casting, we can also treat, say, two u8 as a single, unaligned u16:

fn main() {

let mut arr = [1u8, 2u8, 3u8];

let ptr_u16 = (&mut arr[1]) as *mut _ as *mut u16;

unsafe {

*ptr_u16 = 0x123;

println!("{:#x}", *ptr_u16);

}

}

Note that clippy will catch this - and has this a hard error by default:

$ cargo clippy

Checking enumpeek v0.1.0 (/home/amos/ftl/enumpeek)

error: casting from `*mut u8` to a more-strictly-aligned pointer (`*mut u16`) (1 < 2 bytes)

--> src/main.rs:3:19

|

3 | let ptr_u16 = (&mut arr[1]) as *mut _ as *mut u16;

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

= note: `#[deny(clippy::cast_ptr_alignment)]` on by default

= help: for further information visit https://rust-lang.github.io/rust-clippy/master/index.html#cast_ptr_alignment

It does work, though, again, on my 2018 Core i7.

$ cargo run -q 0x123

So... why should we care about alignment again?

Well, it's a long story.

What do we want? Alignment! Why do we want it? Well...

Basically, back when C was "invented", some processors had poor support for unaligned memory access.

For some of them, unaligned memory accesses would raise a processor exception: the exception handler might be able to correct the unaligned access, but at a very high performance cost. Or the handler might not be able to correct the unaligned access at all, and program execution would just abort.

Some other architectures, like Intel's "Core 2" series (succeeded by Nehalem), transparently support unaligned memory accesses, at "some performance cost".

I'm tempted to link to some microbenchmarks here, but they all contradict each other - it depends on so many factors. Some benchmarks show a 10% slowdown, some show a 50% slowdown, there's clearly a lot going on there.

Just remember that, even after processors started getting first-class support for unaligned reads, it was still desirable to avoid them for performance reasons.

But I've kept the best for last:

Some architectures would not raise a processor exception, but silently perform a different read instead.

An example ARMv4t chip: the sound processor chip for the SEGA NAOMI arcade system.

Wait... it would perform a different read?

Yeah, it would!

That sounds terrifying. Did that really happen? Do you have any sources on that?

I'll do you one better - I'll show you.

Once upon a time, before ARMv5...

We've done some unaligned reads in this article already.

It's relatively easy to do!

First you need some data - we'll just pick 8 unique byte values, so it's easier to follow what's going on:

uint8_t arr[8] = { 0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01 };

And then we do a read from an address that isn't aligned properly. For

example, we try to read an uint32_t starting from the second item

of our array.

Here's the full code sample:

#include <stdint.h>

#include <stdio.h>

int main() {

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

// arrays are zero-indexed, so `1` is the second item

uint32_t *ptr = (uint32_t *)(&arr[1]);

printf("0x%08x\n", *ptr);

return 0;

}

What is this going to print? 0xcdab8967? Wrong!

My 2018 Core i7 is a little-endian CPU:

$ lscpu | grep -E '(Byte Order|Model name)' Byte Order: Little Endian Model name: Intel(R) Core(TM) i7-8750H CPU @ 2.20GHz

...which means bytes are stored from "least significant" to "most significant".

So instead of having the thing that makes sense:

We have this upside-down world arrow spaghetti thing:

$ gcc -Wall main.c -o main && ./main 0x6789abcd

And that's why I picked such specific values for our uint_8 array.

Okay, I still don't believe you though - this unaligned read is working perfectly fine.

Yes, yes.

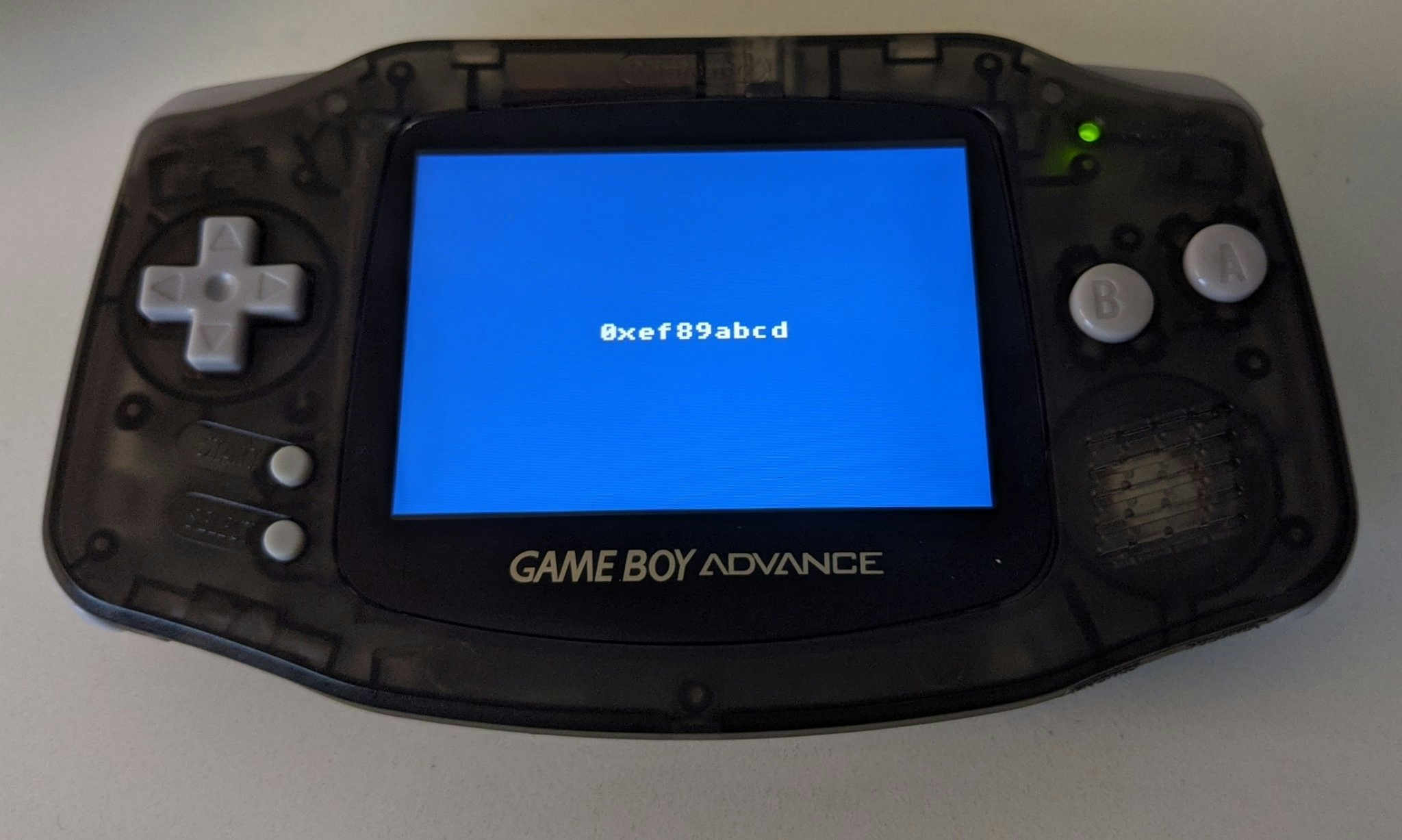

But what if we compile that code... for the Game Boy Advance?

A 'Glacier' Game Boy Advance - one of the colors available at launch in North America

The Game Boy Advance has a 16.8 MHz 32-bit ARM7TDMI processor - which implements the ARMv4T micro-architecture.

But what does it mean?

It means we went back to 2001, Marty.

Okay... let's say I'm curious - how does one even compile for the GBA in 2020?

It's simple! Just install devKitPro on

your OS of choice, and, uh, read a bit lot of documentation, and you're good to go!

Yeah well, hindsight is 20/20. Do you want to see something cool or not?

Go on, go on.

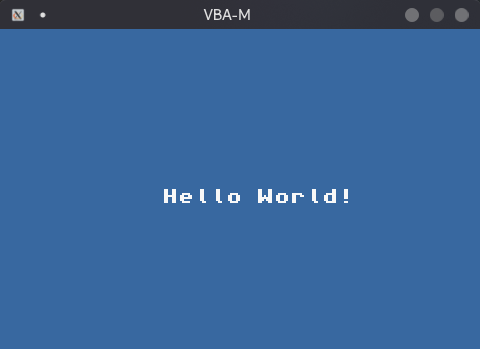

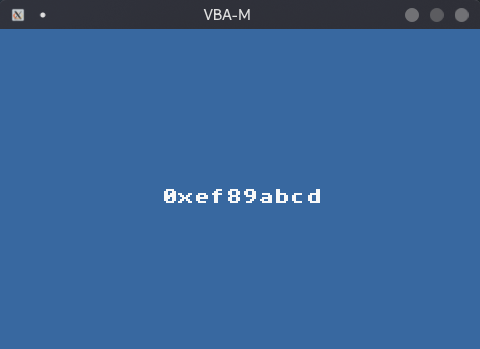

So, I didn't actually read any documentation about the Game Boy Advance, I just found a sample project I liked, and this is what it showed in VisualBoyAdvance:

The C code for the project looked something like this:

// (cut)

// clear screen

iprintf("\x1b[2J");

// print at coordinates 10,10

iprintf("\x1b[10;10H");

iprintf("Hello World!");

// (cut)

So - easy enough! We have some sort of printf implementation - and

apart from that... it's just C! And it's built with GCC, the same

compiler family we just used on 64-bit Linux for my 2018 Core i7.

So, it shouldn't be too hard to get our code running there.

Here's the full listing of source/console.c:

#include <gba_console.h>

#include <gba_video.h>

#include <gba_interrupt.h>

#include <gba_systemcalls.h>

int main(void) {

irqInit();

irqEnable(IRQ_VBLANK);

consoleDemoInit();

// clear screen

iprintf("\x1b[2J");

// print at coordinates 10,10

iprintf("\x1b[10;10H");

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

// arrays are zero-indexed, so `1` is the second item

uint32_t *ptr = (uint32_t *)(&arr[1]);

iprintf("0x%08x", *ptr);

while (1) {

VBlankIntrWait();

}

}

And as you can see, cool bear, it does the wrong rea...

Crap.

AhAH! SEE? It's fine! Everything's fine.

No, wait this can't be right - is VBA-M somehow fixing the unaligned access transparently? This wouldn't work on the actual chip... let me just-

Yeah yeah, the actual chip, sure.

All I'm seeing here is that you're once again making outrageous claims you can't back. So much for checking your sources, Amos. "Oooh we have to be careful about factual errors, ooh we don't want to contribute to misinformation", "our job is to dispel myths", yada-yada.

Hold on, let me see if I can disassemble this.

I noticed that, along with a .gba file, devKitPro also gave us an .elf file.

$ file ./ansi_console/ansi_console.elf ./ansi_console/ansi_console.elf: ELF 32-bit LSB executable, ARM, EABI5 version 1 (SYSV), statically linked, with debug_info, not stripped

And I noticed that, alongside gcc, as, ld, etc., it also had an objdump:

$ $DEVKITARM/bin/arm-none-eabi-objdump --disassemble --source ./ansi_console/ansi_console.elf

int main(void) {

(cut)

// print at coordinates 10,10

iprintf("\x1b[10;10H");

8000286: 4805 ldr r0, [pc, #20] ; (800029c <main+0x2c>)

8000288: f000 fcec bl 8000c64 <iprintf>

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

// arrays are zero-indexed, so `1` is the second item

uint32_t *ptr = (uint32_t *)(&arr[1]);

iprintf("0x%08x", *ptr);

800028c: 4904 ldr r1, [pc, #16] ; (80002a0 <main+0x30>)

800028e: 4805 ldr r0, [pc, #20] ; (80002a4 <main+0x34>)

8000290: f000 fce8 bl 8000c64 <iprintf>

/*! \fn void VBlankIntrWait()

\brief waits for a vertical blank interrupt to occur.

*/

static inline

void VBlankIntrWait() { SystemCall(5); }

8000294: df05 svc 5

8000296: e7fd b.n 8000294 <main+0x24>

Huh, first time looking at ARM assembly, neat.

Yeah uh, good thing objdump has a --source option or I would be

completely lost.

So I'm assuming we want to look at 800028c, where it does the interesting

iprintf call?

Right. It seems to be using ldr (load with immediate offset) to put values

in registers r1 and r0. I'm assuming r0 is the printf format string,

and r1 is the first argument, which is relative to the program counter,

Oh just like rip-relative on x86?

Yes! And the argument in question ends up being read from 80002a0,

which... let me scroll down a bit:

8000298: 080086cc .word 0x080086cc 800029c: 080086d4 .word 0x080086d4 80002a0: 6789abcd .word 0x6789abcd 80002a4: 080086e0 .word 0x080086e0

...which is a constant? Equal to 0x6789abcd?

Bwahahahahahahahahahahaha. Amos.

What?

Do you want to hear a joke?

Sure?

What's the worst thing about laundry day?

I don't know, what is the worst thing about laundry day?

The worst thing about laundry day is constant folding!

...okay. How does that help?

Well, I'm afraid GCC just went ahead and evaluated all your little unaligned crimes at compile-time.

So it ends up printing a constant. Which is the expected result, if unaligned reads were supported transparently.

Right! Because everything is constant - the array is a constant, the pointer arithmetic and dereferencing will be the same for all runs... I see it now.

I can't believe I trusted GCC.

Don't go blaming GCC. GCC is generating the fastest code - no code at all.

By the way, you might want to disassemble your 64-bit Linux program as well.

No? Don't tell me that..

$ objdump --disassemble --source ./main

(cut)

int main() {

1040: 48 83 ec 08 sub rsp,0x8

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

// arrays are zero-indexed, so `1` is the second item

uint32_t *ptr = (uint32_t *)(&arr[1]);

printf("0x%08x\n", *ptr);

1044: be cd ab 89 67 mov esi,0x6789abcd

1049: 48 8d 3d b4 0f 00 00 lea rdi,[rip+0xfb4] # 2004 <_IO_stdin_used+0x4>

1050: 31 c0 xor eax,eax

1052: e8 d9 ff ff ff call 1030 <printf@plt>

return 0;

}

Oh come on. It's just doing mov esi,0x6789abcd!

Yup. Which just goes to show: don't mess with something you don't underst..

That's baloney. We definitely should mess with things we don't understand, we should just verify our work, and possibly have more experienced folks double-check it.

Okay, valid - how are you going to get out of that one, though?

Well, let's fix the Linux 64-bit version first - I want the CPU to be doing that read, not one of GCC's passes.

What if I made a function? Then it definitely couldn't just constant-fold all the fun away.

#include <stdint.h>

#include <stdio.h>

uint32_t deref(uint32_t *ptr) {

return *ptr;

}

int main() {

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

uint32_t *ptr = (uint32_t *)(&arr[1]);

// we are now calling deref:

printf("0x%08x\n", deref(ptr));

return 0;

}

$ gcc -O3 -g main.c -o main && ./main 0x6789abcd

There!

...now try disassembling it?

$ objdump --disassemble --source ./main

0000000000001040 <main>:

uint32_t deref(uint32_t *ptr) {

return *ptr;

}

int main() {

1040: 48 83 ec 08 sub rsp,0x8

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

uint32_t *ptr = (uint32_t *)(&arr[1]);

// we are now calling deref:

printf("0x%08x\n", deref(ptr));

1044: be cd ab 89 67 mov esi,0x6789abcd

1049: 48 8d 3d b4 0f 00 00 lea rdi,[rip+0xfb4] # 2004 <_IO_stdin_used+0x4>

1050: 31 c0 xor eax,eax

1052: e8 d9 ff ff ff call 1030 <printf@plt>

return 0;

}

1057: 31 c0 xor eax,eax

1059: 48 83 c4 08 add rsp,0x8

105d: c3 ret

105e: 66 90 xchg ax,ax

OH COME ON.

Bwahahahahahahha inliner goes brrrr.

Don't forget you're doing this to yourself.

Okay fine, fine, this is GCC, I can just tell it not to inline

the function. Then it has to pass the pointer through a register,

uhh, %rdi probably? And it has to do the actual memory read

in the function to return it through %rax.

Okay, whatever you say champ!

uint32_t __attribute__((noinline)) deref(uint32_t *ptr) {

return *ptr;

}

$ gcc -O3 -g main.c -o main && ./main

0x6789abcd

$ objdump --disassemble --source ./main

(cut)

00000000000011b0 <deref>:

return *ptr;

11b0: 8b 07 mov eax,DWORD PTR [rdi]

}

11b2: c3 ret

11b3: 66 2e 0f 1f 84 00 00 nop WORD PTR cs:[rax+rax*1+0x0]

11ba: 00 00 00

11bd: 0f 1f 00 nop DWORD PTR [rax]

Yes.

0000000000001050 <main>:

uint32_t __attribute__((noinline)) deref(uint32_t *ptr) {

return *ptr;

}

int main() {

1050: 48 83 ec 18 sub rsp,0x18

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

1054: 64 48 8b 04 25 28 00 mov rax,QWORD PTR fs:0x28

105b: 00 00

105d: 48 89 44 24 08 mov QWORD PTR [rsp+0x8],rax

1062: 48 b8 ef cd ab 89 67 movabs rax,0x123456789abcdef

1069: 45 23 01

uint32_t *ptr = (uint32_t *)(&arr[1]);

// we are now calling deref:

printf("0x%08x\n", deref(ptr));

106c: 48 8d 7c 24 01 lea rdi,[rsp+0x1]

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

1071: 48 89 04 24 mov QWORD PTR [rsp],rax

printf("0x%08x\n", deref(ptr));

1075: e8 36 01 00 00 call 11b0 <deref>

107a: 48 8d 3d 83 0f 00 00 lea rdi,[rip+0xf83] # 2004 <_IO_stdin_used+0x4>

1081: 89 c6 mov esi,eax

1083: 31 c0 xor eax,eax

1085: e8 b6 ff ff ff call 1040 <printf@plt>

return 0;

}

Yes! Okay, that should do it.

Now let's do the same to our Game Boy Advance, uh, game:

// includes omitted

uint32_t __attribute__((noinline)) deref(uint32_t *ptr) {

return *ptr;

}

int main(void) {

irqInit();

irqEnable(IRQ_VBLANK);

consoleDemoInit();

// clear screen

iprintf("\x1b[2J");

// print at coordinates 10,10

iprintf("\x1b[10;10H");

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

uint32_t *ptr = (uint32_t *)(&arr[1]);

// we are now calling deref:

iprintf("0x%08x", deref(ptr));

while (1) {

VBlankIntrWait();

}

}

And...

$ make && vbam -f 1 ./ansi_console/ansi_console.gba (cut)

YES!

The above code, ran on an actual Game Boy Advance.

Okay, that is pretty cool.

How is it getting to that result, though? Shouldn't the result

be 0x89abcdef?

Well, no - it's not "rounding down the memory address" - it's just that the bottom 2 bits are not part of the address that's being read - it interprets the bottom two bits as the amount by which to circular-shift the result to the right.

It uses which bits to what now?

Okay it'll be simpler to just show you: if we wanted to emulate the behavior of ARMv4t in our 64-bit Linux executable, we would do this:

// performs a circular shift to the right by `n` bits

uint32_t circ_rshift(uint32_t input, int n) {

return (input >> n) | (input << (32 - n));

}

uint32_t __attribute__ ((noinline)) deref_armv4t(uint32_t *input_addr) {

size_t input = (size_t) input_addr;

// everything except the bottom 2 bits

const size_t ADDR_MASK = 0xfffffffffffffffc;

// just the bottom 2 bits

const size_t SHFT_MASK = ~ADDR_MASK;

uint32_t *addr = (uint32_t *) (input & ADDR_MASK);

int shift_bytes_amount = (int) (input & SHFT_MASK);

int shift_bits_amount = shift_bytes_amount * 8;

return circ_rshift(*addr, shift_bits_amount);

}

int main() {

uint8_t arr[8] = {0xef, 0xcd, 0xab, 0x89, 0x67, 0x45, 0x23, 0x01};

uint32_t *ptr = (uint32_t *)(&arr[1]);

printf("0x%08x\n", deref_armv4t(ptr));

return 0;

}

$ gcc -O3 -g main.c -o main && ./main 0xef89abcd

Okay, that'll take a few reads, but thanks.

This was a long-ass example though. Are you sure it was worth it?

Sure! Developing for small devices is fun. Pick up your phone and send "ARM"

to 0xef89abcd if you want more of this.

Alignment still matters

Let's take a minute to regroup.

Historically, it was easier - and cheaper - for processors to only provide good support for aligned reads. So, for historical and architectural reasons, natural data alignment has been very desirable in a language like C.

Which is why C automatically inserts padding when laying out structs, and also why the outcome of "obtaining an unaligned pointer and dereferencing it" is UB (undefined behavior) - it varies from platform to platform!

Because of that history, data alignment is a concept built deep into the C language, and compiler infrastructure like LLVM - even though our modern processors might be fine with it in some cases.

On modern x86-64 processors (post-Nehalem), unaligned memory access typically comes at a much lower performance cost than, say, on a Core 2, especially if the data is already in cache.

So, are there any reasons left to care about alignment on modern processors?

Yes! Even though performance is getting harder and harder to "get a feel for", due to the increasing complexity of processor architectures, aligned memory access performance remains more predictable.

We've also seen the advent of SIMD (Single Instruction, Multiple Data) instruction sets, which as their name indicates, allow operating on large amounts of data (more than 64 bits' worth) with a single instruction.

Those come with even stricter alignment requirements. For example, SSE requires all its operands to be 16-byte (128-bit) aligned.

Some atomic operations also have alignment requirements - for example, Go's

sync/atomic package documentation notes the following:

On ARM, x86-32, and 32-bit MIPS, it is the caller's responsibility to arrange for 64-bit alignment of 64-bit words accessed atomically. The first word in a variable or in an allocated struct, array, or slice can be relied upon to be 64-bit aligned.

And finally, data alignment rules let us do the one trick smartstring relies on.

A look at smartstring's memory layout

Let's clone smartstring locally, and navigate through it using rust-analyzer's

"Go To Definition" functionality.

The type we already know about is SmartString - it is defined as follows:

#[cfg_attr(target_pointer_width = "64", repr(C, align(8)))]

#[cfg_attr(target_pointer_width = "32", repr(C, align(4)))]

pub struct SmartString<Mode: SmartStringMode> {

data: MaybeUninit<InlineString<Mode>>,

}

In other words - it's mostly a wrapper over InlineString, which looks like:

#[repr(C)]

pub(crate) struct InlineString<Mode: SmartStringMode> {

pub(crate) marker: Marker,

pub(crate) data: Mode::InlineArray,

}

Interesting! There's definitely a parallel with our homegrown version, shown here as a refresher:

struct Inline {

len: u8,

data: [u8; 23],

}

And indeed, Marker is "just an u8":

#[derive(Clone, Copy, Debug)] pub(crate) struct Marker(u8);

If we look at Marker's code, though, we notice something interesting:

it seems to contain both the discriminant, and 7 bits of data.

impl Marker {

#[inline(always)]

fn assemble(discriminant: Discriminant, data: u8) -> u8 {

let data = data;

if UPSIDE_DOWN_LAND {

data << 1 | discriminant.bit()

} else {

discriminant.bit() << 7 | data

}

}

}

How? Why?

Well... it's all thanks to data alignment.

Thanks, data alignment!

I lied a little just now - when I said SmartString was "mostly just a

wrapper for InlineString". You know what else SmartString can be?

A String!

When a SmartString is built from a String, it's literally just

assigning that String to itself, via std::ptr::write:

impl<Mode: SmartStringMode> SmartString<Mode> {

fn from_boxed(boxed: Mode::BoxedString) -> Self {

let mut out = Self {

data: MaybeUninit::uninit(),

};

let data_ptr: *mut Mode::BoxedString = out.data.as_mut_ptr().cast();

unsafe { data_ptr.write(boxed) };

out

}

}

Ok so what you're saying is...

Sorry, no, I don't get it. Come again? A SmartString can be just a String?

How does it know if it's a String or an InlineString? Is it... is it somehow

using unused parts of the String struct to store the discriminant?

It is! In the best way!

Is it using padding maybe? Padding is unused.

No, that would be undefined behavior! Padding can get randomly messed up if the compiler thinks it's fast, remember?

Okay so, what else? What else is unused in String, which can be used to

store the discriminant of a SmartString?

Well - let's look at the definition of the String struct.

pub struct String {

vec: Vec<u8>,

}

Mh.

Mh indeed. Let's look at the definition of Vec:

pub struct Vec<T> {

buf: RawVec<T>,

len: usize,

}

It's turtles all the way down. Let's look at the definition of RawVec

pub struct RawVec<T, A: AllocRef = Global> {

ptr: Unique<T>,

cap: usize,

alloc: A,

}

Okay... and finally, let's look at the definition of Unique:

#[repr(transparent)]

pub struct Unique<T: ?Sized> {

pointer: *const T,

// (cut)

}

Ah. A pointer.

So to summarize, String owns a Vec<u8>, which owns a RawVec<u8>, which

contains a Unique<u8>, which is actually just a *const u8.

So, the first member of the String struct is a pointer to an u8. The

second is an usize, to store capacity, and the third one is length, and

it's 8 bytes as well, so we have:

Right - String starts with a pointer.

But it's a pointer to an u8, right? Shouldn't it have an alignment of 1?

Right! But it points to heap-allocated memory.

In other words, it's set to a value returned by our friendly neighborhood system allocator (or a custom allocator, which hopefully behaves).

And the malloc(3) man page says:

The

malloc()andcalloc()functions return a pointer to the allocated memory, which is suitably aligned for any built-in type.

For any built-in type. So it doesn't even rely on the amount of memory requested - even if we allocate a single byte, over and over, it'll still give us 64-bit aligned addresses on 64-bit Linux.

Let's try allocating ONE MILLION BYTES, just for fun.

Why did you use your supervillain voice just now

I don't know what you're talking about

#include <stdio.h>

#include <stdlib.h>

int main() {

int aligned_count = 0;

const int max = 1000 * 1000;

// N.B: this leaks memory on purpose - if we freed, we might

// not get unique addresses.

for (int i = 0; i < max; i++) {

void *ptr = malloc(4);

// is this 64-bit aligned?

if (((size_t) ptr % 8) == 0) {

aligned_count += 1;

}

}

printf("%d/%d addresses malloc returned were 64-bit aligned\n", aligned_count, max);

}

$ gcc -Wall -O3 -g main.c -o main && ./main 1000000/1000000 addresses malloc returned were 64-bit aligned

Alright, so String starts with a pointer that is 8-byte-aligned on 64-bit Linux.

What does that mean?

Well - all multiples of 8 have the bottom 3 bits set to zero:

0is all0b08is0b100016is0b1000024is0b11000- etc.

In fact, we can also test alignment that way:

#include <stdio.h>

#include <stdlib.h>

int main() {

int aligned_count = 0;

const int max = 1000 * 1000;

for (int i = 0; i < max; i++) {

void *ptr = malloc(4);

// N.B: binary literals are a GCC extension

if (((size_t) ptr & 0b111) == 0) {

aligned_count += 1;

}

}

printf("%d/%d addresses malloc returned had their bottom 3 bits zeroed\n", aligned_count, max);

}

$ gcc -Wall -O3 -g main.c -o main && ./main 1000000/1000000 addresses malloc returned had their bottom 3 bits zeroed

Alright - so, on x86_64, pointers that are heap-allocated have their bottom 3 bits zeroed.

How does that help us?

Well... we can make an enum out of that!

Let's try to make our own smartstring again.

Since we want to beat Rust enums, we'll have to use a struct, again:

#[derive(Default)]

struct SmartString {

data: InlineString,

}

Our SmartString will be either a String or an InlineString:

#[derive(Default)]

#[repr(C, align(8))]

struct InlineString {

marker: u8,

len: u8,

contents: [u8; 22],

}

...so we need to make sure that both types have the same size, and the same alignment:

assert_eq_size!(SmartString, String); assert_eq_align!(SmartString, String);

Building a SmartString from a String is easy:

impl SmartString {

fn new_boxed(s: String) -> Self {

let mut res: Self = Default::default();

let ptr: *mut String = (&mut res.data) as *mut _ as *mut String;

unsafe { *ptr = s };

res

}

}

Building the inline variant is slightly more involved - we need to copy over the data, and we only have 22 bytes of storage. Luckily, using slice copying operations, the standard library will panic if things go wrong, instead of resulting in silent memory corruption.

impl SmartString {

fn new_inline(data: &str) -> Self {

let mut inline: InlineString = Default::default();

// set bottom bit so we can distinguish from a `String`

inline.marker = 0x1;

let src = data.as_bytes();

// this will panic if `data` is too large

let dst = &mut inline.contents[..data.len()];

dst.copy_from_slice(src);

inline.len = data.len() as u8;

Self { data: inline }

}

}

Pay extra attention to the marker field - it's at the very beginning

of our InlineString struct:

Mhh, aren't the bottom 3 bits at.. the bottom? In the last byte of the data

pointer?

They are!

But we're on Little-Endian, so that "last" byte is stored first.

So, once we have that, we can easily determine whether our current variant

is Boxed our Inline:

#[derive(Debug)]

enum Discriminant {

Boxed,

Inline,

}

impl SmartString {

fn discriminant(&self) -> Discriminant {

if self.data.marker & 0x1 == 0 {

Discriminant::Boxed

} else {

Discriminant::Inline

}

}